An Analysis of "A Stitch in Time Saves Nine: Small VLM as a Precise Guidance for Accelerating Large VLMs"

The challenge of improving the efficiency of vision-LLMs (VLMs) amidst their growing computational demands is a central problem addressed in the paper "A Stitch in Time Saves Nine: Small VLM as a Precise Guidance for Accelerating Large VLMs." This work offers a compelling strategy to harness the capabilities of smaller VLMs for guiding the token pruning in larger models, thus significantly enhancing computational efficiency without substantial sacrifices in performance.

Key Contributions and Insights

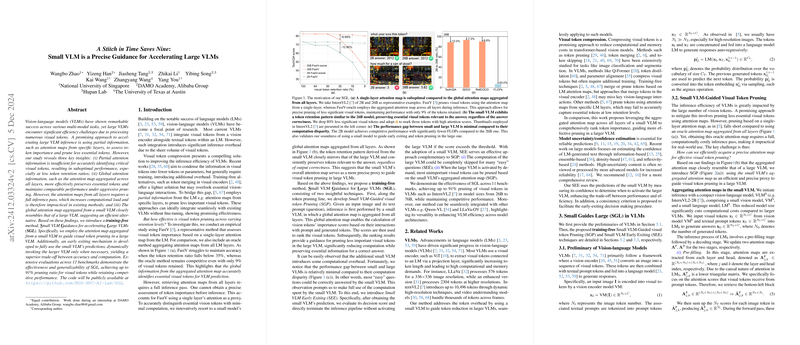

The paper identifies that while VLMs have achieved substantial success across multi-modal tasks, the large VLMs are burdened by inefficiencies stemming from processing vast numbers of visual tokens. An intuitive approach to mitigate this is through the selective pruning of visual tokens, using attention maps to discern their importance. The authors delineate three critical insights:

- Partial Attention Limitations: Using partial attention maps for token pruning is insufficient in retaining performance, especially when the token retention is reduced considerably.

- Merit of Global Attention: Aggregating global attention maps across all model layers can better facilitate the preservation of critical visual tokens, allowing for aggressive pruning while maintaining performance.

- Small VLM's Efficacy: Interestingly, global attention maps derived from smaller VLMs closely mirror those from larger VLMs, providing a basis for efficient token pruning guidance.

The authors capitalize on these insights through the introduction of Small VLM Guidance (SGL), a training-free methodology enabling large VLMs to exploit small VLM aggregates for pruning decisions, paired with a Small VLM Early Exiting (SEE) mechanism to dynamically invoke larger VLMs when computation can yield performance benefits.

Numerical Results and Performance

Throughout a breadth of evaluations across 11 benchmarks, the proposed SGL method showcases impressive results, achieving pruning ratios of up to 91% of visual tokens while preserving competitive performance levels. This profound reduction in visual token handling aligns with striking computational savings, a pivotal breakthrough aligning with the paper's assertions.

Furthermore, the evidence supports that the performance disparity between small and large VLMs diminishes significantly when accounting for computational efficiency, underscoring the strategic value of the SEE technique. By evaluating a decision threshold based on confidence metrics from the small VLM's predictions, SGL can terminate inference early, executing a superior trade-off between necessary computation and output accuracy.

Theoretical and Practical Implications

The implications of this research are both profound and far-reaching. Theoretically, the ability of smaller VLMs to accurately guide the processing of larger counterparts suggests potential pathways to more broadly applicable hierarchical modeling approaches across AI. Practically, SGL offers immediate, tangible benefits by significantly reducing the computational footprint of large VLMs, allowing for more scalable, sustainable AI deployments in resource-constrained environments.

Future Developments

Looking towards future developments, the integration of SGL into unified models that encompass both generation and understanding tasks remains an open avenue. Moreover, exploring finer-grained attention mechanisms or further optimizing the aggregation strategies could yield additional efficiencies. The validation of SGL in dynamic contexts, such as real-time applications, where computational limits are even more stringent, could provide robust assurances of its versatility and robust utility.

In conclusion, this paper provides a substantial advancement in addressing the balance between performance and efficiency within the field of VLMs, paving the way for more computationally efficient AI systems without sacrificing the nuanced understanding required by complex multi-modal tasks.