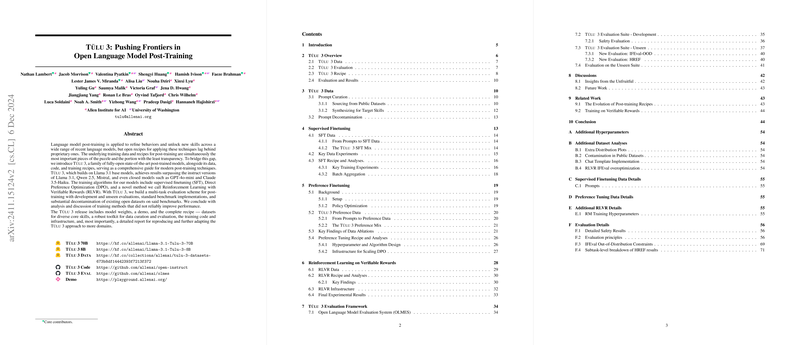

Overview of "T 3: Pushing Frontiers in Open LLM Post-Training"

The paper introduces T 3, an open-source family of state-of-the-art post-trained LLMs, aimed at bridging the gap between open and closed proprietary counterparts. Central to the work is the comprehensive release of data, code, and training recipes that delineate advanced post-training techniques on Llama 3.1 base models. The authors address the lack of transparency and open resources in the field by not only making their models and training regimens public but also surpassing both open and limited-access closed models like GPT-4o-mini and Claude 3.5-Haiku in performance.

Core Innovations and Techniques

At the core of T 3's methodology are several sophisticated training stages that go beyond conventional instruction finetuning. These stages include:

- Supervised Finetuning (SFT): Tailored prompting and completion training that primarily seeks to enhance core skills such as reasoning, math, coding, and instruction following without sacrificing performance in other areas.

- Direct Preference Optimization (DPO): A preference tuning approach leveraging on-policy and off-policy data, improved by extensive data scaling and the integration of synthetic preference data generation processes.

- Reinforcement Learning with Verifiable Rewards (RLVR): A novel method acting through standard RL mechanisms, emphasizing learning through verifiable tasks like mathematics problem-solving and precise instruction following.

Together, these methodologies form a robust multi-task evaluation framework that aligns training data with development and unseen evaluation benchmarks, fostering transparency and reproducibility in evaluation.

Results and Implications

The comprehensive empirical evaluations demonstrate T 3's superiority over top-tier open models such as Llama 3.1 Instruct and Qwen 2.5 Instruct, with significant improvements across several benchmarks including reasoning, math, and instruction-following tasks. The model's open-source nature and detailed documentation stand to greatly benefit the research community by setting a new standard for transparency and accessibility in model development.

Broader Impact and Future Directions

T 3's open-source release is set to have far-reaching implications for the field of AI LLMs. By providing a clear and reproducible recipe for training high-performance open-weight models, the paper lays the groundwork for future research in post-training and invites the community to innovate upon its findings without the constraints often imposed by closed-source models.

The inclusion of detailed data decontamination processes and instruction-following benchmarks like IFEval-OOD further highlights the need for sophisticated evaluation mechanisms to prevent model overfitting and reinforce instruction-following capabilities. Future research can expand on T 3's methodologies to incorporate multilingual capabilities, long-context understanding, and more complex skill domains, which were acknowledged but beyond the scope of the paper's primary contributions.

In summary, T 3 represents a pivotal stride toward democratizing access to advanced AI models, promising enhancements in both theoretical exploration and practical applications of AI technologies.