Disentangling Memory and Reasoning Ability in LLMs

The paper titled "Disentangling Memory and Reasoning Ability in LLMs" offers a novel approach to enhance the interpretability and effectiveness of LLMs by distinctly segmenting the processes involved in memory recall and reasoning. The impetus for this work stems from the observed limitations of existing LLMs that handle complex tasks without clearly distinguishing the retrieval of knowledge from reasoning steps, potentially causing issues like hallucinations and knowledge erosion in high-stakes applications.

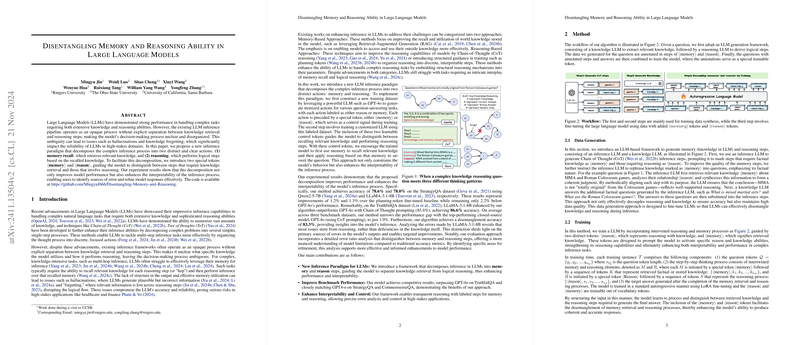

Proposed Inference Paradigm

The authors propose a new inference paradigm wherein the traditional opaque inference process is decomposed into two explicit actions: memory recall and reasoning. This decomposition is facilitated by introducing two special tokens, <memory> and <reason>, which steer the model to delineate between the phases of knowledge retrieval and reasoning. The approach involves training LLMs with datasets specifically structured to incorporate these tokens, thereby training the models to separately activate their stored knowledge and reasoning capabilities.

Key Results

Experimental results demonstrate that this structured inference process not only boosts the performance of LLMs but significantly amplifies the interpretability of the inference mechanism. For instance, when evaluated on the StrategyQA dataset, the models achieved an accuracy improvement over existing methods, with the LLaMA-3.1-8B model recording 78% accuracy, showing a notable improvement compared to baseline methods. Remarkably, on the TruthfulQA dataset, the approach even outperformed the state-of-the-art GPT-4o, achieving an accuracy of 86.6%.

Implications and Significance

The paper's findings have noteworthy implications for the development of more reliable and interpretable AI systems, particularly in domains where transparency in decision-making is critical, such as in healthcare and finance. By decoupling memory and reasoning, the paper presents a methodologically sound approach to addressing the hallucinations and logical inconsistencies that often plague LLM outputs. This separation could also pave the way for more user-controllable AI applications, where end-users might direct the AI to focus on reasoning or recall as needed.

Future Directions

Further research could explore dynamic memory updating mechanisms and adaptive reasoning steps to optimize real-time inference. Given the promising results, future work could extend this paradigm to multimodal tasks, potentially expanding its utility beyond textual data to integrated datasets involving both visual and textual information.

Conclusion

In summarizing, the paper successfully introduces a structural rethinking of LLMs that fosters enhanced performance and dissective clarity in reasoning tasks. While challenges such as potential computational overhead and the complexity of token configuration persist, the proposed method's contributions mark a meaningful step towards realizing more dependable and lucid AI systems. This work invites further exploration within the artificial intelligence research community to refine techniques that promote both improved accuracy and transparency.