Disentangling Knowledge and Reasoning in LLMs

The paper "General Reasoning Requires Learning to Reason from the Get-go" addresses the limitations of current LLMs in achieving true artificial general intelligence (AGI). The authors argue that a key limitation of existing LLMs is their reliance on next-token prediction during pretraining, which is predominantly based on passively collected internet data. This method inhibits their generalization potential across novel scenarios due to their intertwined nature of knowledge and reasoning. The paper presents compelling evidence and arguments for disentangling reasoning from knowledge in order to facilitate more robust and adaptable reasoning capabilities.

Key Contributions

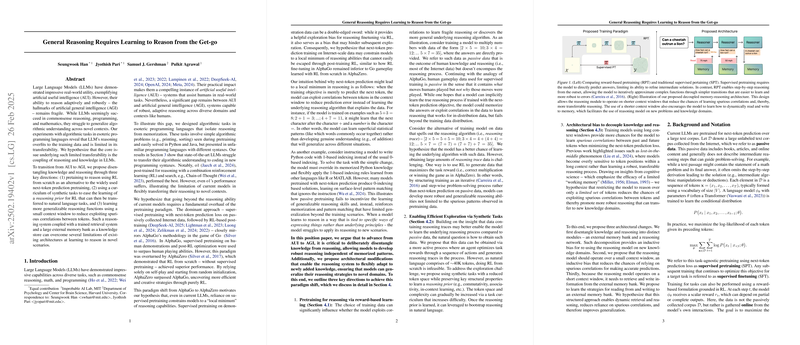

- Evaluation of Current LLMs' Reasoning Capabilities: Through extensive evaluation using algorithmic tasks set in esoteric programming languages, the authors highlight deficiencies in the reasoning and generalization abilities of current state-of-the-art models. The tasks were designed to separate reasoning capabilities from rote memorization and were presented in unfamiliar syntaxes to remove bias from prior knowledge. The results showed that existing models, including advanced ones like o1, struggle with these tasks, highlighting their limitations in transferring learned reasoning skills to new contexts.

- Proposal for Reinforcement Learning (RL) Pretraining: The authors suggest that current paradigms involving supervised pretraining before RL finetuning hinder the model's ability to learn more generalized reasoning strategies, akin to the difference between AlphaGo and AlphaZero in their approach to Go. They propose integrating RL from the onset of pretraining to avoid getting stuck in local minima of reasoning capabilities, drawing on how AlphaZero achieved superior performance by eschewing human demonstration-based pretraining entirely.

- Use of Synthetic Tasks for Reasoning Pretraining: Due to the complex exploration space of natural language, the paper advocates for the use of synthetic tasks with reduced token spaces to pretrain reasoning abilities. By focusing on tasks that encapsulate universal reasoning primitives, the authors hypothesize that this approach could scaffold learning more complex reasoning required in natural language tasks.

- Architectural Enhancements to Disentangle Memory and Reasoning: The authors propose architectural changes to separate knowledge storage from reasoning processes. They suggest a model with an external semantic memory bank and a reasoning network working over a limited context window. This separation aims to reduce reliance on spurious correlations and to foster a more generalizable reasoning process. They draw on insights from cognitive science, particularly the concept of chunking that aids in handling complex information with limited working memory.

Implications and Future Directions

The proposals in this paper hold significant implications for the future of AI research, particularly in the pursuit of artificial general intelligence. By suggesting methods to better incorporate reasoning from the start of a model's training, the authors open avenues for overcoming current limitations observed in LLMs. Their approach of using synthetic tasks for developing reasoning prior within a limited token space provides a new direction for preparing models to handle larger, more complex natural language contexts effectively.

Furthermore, the architectural suggestion to decouple knowledge from reasoning supports a modular approach to model design, where reasoning mechanisms could potentially be standardized and reused across varied domains with different knowledge bases. Such a separation could facilitate easier updates and integration of new information without extensive retraining, mirroring human-like adaptability in AI systems.

The arguments and findings presented warrant further exploration into each of the proposed paradigm shifts. Future work would benefit from empirical validation of these hypotheses, particularly in larger, more realistic scenarios and varied domains, to truly assess the impact and scalability of disentangling reasoning and knowledge in the march towards more generalized AI systems.