Metamorphic Evaluation of ChatGPT as a Recommender System

In the examination of the intersections between LLMs, particularly the Generative Pre-trained Transformer (GPT), and recommender systems, a notable challenge arises—how to effectively evaluate these systems. The paper "Metamorphic Evaluation of ChatGPT as a Recommender System" by Khirbat et al. addresses this issue by introducing a metamorphic testing framework specifically adapted for LLM-based recommender systems. This paper highlights the unique problems faced in evaluating LLMs, given their black-box nature and probabilistic dispositions, common issues that manifest distinctly when applied to recommender systems.

Metamorphic Testing Framework

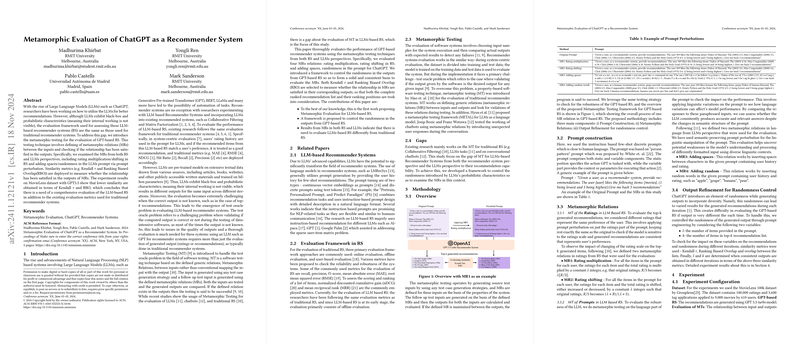

Metamorphic testing (MT) is a strategy used traditionally to address the test oracle problem, where it’s difficult to determine the correct outputs of certain inputs, especially prevalent in data-intensive applications like LLM-based systems. By leveraging metamorphic relations (MRs), the authors craft a testing framework that evaluates the outputs generated by GPT-based systems against predefined relationships rather than exact expected outcomes.

The paper primarily devises two sets of MRs:

- MRs from the Recommender Systems Perspective: These include altering the numerical ratings by multiplication and shifting transformations. These variations test the sensitivity and robustness of the system against alterations in user input values while maintaining relative user preferences.

- MRs from the LLMs Perspective: This involves linguistic alterations to the prompts, such as adding spaces or random words, designed to scrutinize the system’s consistency amidst input variations that don't semantically alter the original prompt.

Methodology and Evaluation Criteria

The methodology harnesses the MovieLens dataset, using the GPT 3.5 turbo model for generating recommendations and evaluates using Kendall's Tau and Ranking Biased Overlap (RBO). These metrics provide a dual analysis—assessing both the agreement in the order of generated lists and assigning higher importance to the top-list rankings, which are more critical in recommendation contexts.

The results starkly highlight the disparities in dealing with the two types of MRs. For example, when numerical transformations are applied, there is a noted degradation in Kendall's Tau, indicating substantial changes in the order of recommended items, yet RBO outcomes suggest some stability at the top-ranked items. This dichotomy is less pronounced with linguistic alterations, indicating further variability and reduced robustness to prompt structure variations.

Theoretical and Practical Implications

The compelling aspect of this paper is its illustration of the necessity for specialized evaluation methodologies catered to LLM-based recommender systems, distinct from those employed in traditional systems. The findings intimate a systemic need to consider the non-deterministic nature of LLMs in evaluation frameworks. This adaptation not only aids in identifying weaknesses and variances in LLM-derived recommendations but also guides future enhancements in design and deployment of such systems.

From a theoretical standpoint, this research contributes to the diversification of evaluation techniques for LLM applications, emphasizing the interplay between software testing paradigms and AI advancements. Practically, this affords developers a new lens through which to optimize LLM-based recommender systems, ensuring greater reliability and user satisfaction.

Future Directions

As LLMs continue to advance and fuse with various application domains, the insights from this paper underscore the importance of continuing research into new testing methodologies that accommodate ever-increasing system complexities and diverse applications. Future endeavors could explore broader classes of MRs or integrate chain-of-thought prompting to refine GPT's recommendation capabilities. The challenge remains to expand these methodologies to encompass the full spectrum of LLM functionalities beyond recommendation tasks. This ongoing research helps chord the narrative for developing more transparent, understandable, and robust AI systems as they become increasingly embedded in decision-making processes across industries.