An Expert Analysis of "How Can Recommender Systems Benefit from LLMs: A Survey"

This survey paper conducted by Lin et al. from Shanghai Jiao Tong University and Noah's Ark Lab, Huawei, offers a comprehensive examination of the integration and potential advantages of incorporating LLMs into recommender systems (RS). The paper carries out an extensive literature review, identifying the fundamental ways LLMs can augment various stages of the RS pipeline and addressing the core challenges encountered during this integration.

LLMs in Recommender Systems: Where and How

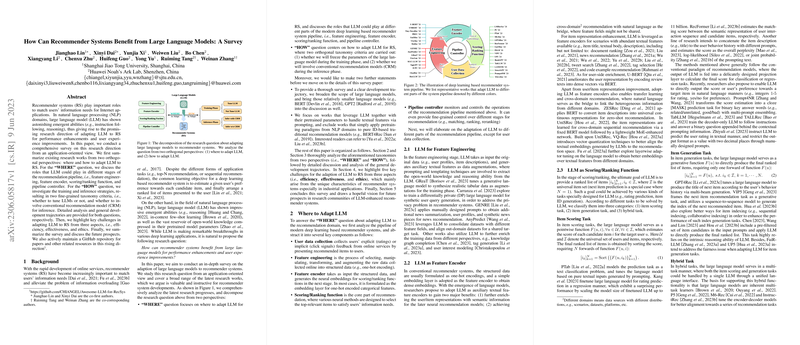

The authors dissect the recommender system pipeline into distinct stages: feature engineering, feature encoding, scoring/ranking function, user interaction, and pipeline control. At each stage, the integration of LLMs offers unique potential benefits.

- Feature Engineering and Encoding: LLMs can augment feature engineering by generating rich, auxiliary textual features, thereby enhancing user-item profiles. This addresses the data sparsity and domain knowledge gaps inherent in traditional models.

- Scoring/Ranking: Leveraging LLM capabilities in logical and commonsense reasoning improves scoring mechanisms, enabling more effective personalization through sophisticated user interaction models.

- User Interaction: LLMs enable dynamic, conversational interaction modes that adapt to user inputs in real-time, offering a more natural interface.

- Pipeline Control: The enhanced control mechanisms provided by LLMs enable an adaptable, refined control of recommendation processes through advanced reasoning and decision-making capabilities.

The survey employs a nuanced classification framework based on two orthogonal dimensions: whether to fine-tune LLMs during model training and whether conventional recommendation models are engaged during inference. This structure allows researchers to understand how LLMs can best fit into existing systems and the trade-offs involved.

Implications and Challenges

The integration of LLMs with recommender systems promises substantial improvements in both personalized user experience and overall model performance. By incorporating LLMs, recommender systems can mitigate existing challenges such as information overload and limited domain adaptation, thereby enhancing both efficiency and effectiveness.

However, the paper also outlines several challenges faced when adapting LLMs to RS contexts:

- Efficiency: The computational overhead associated with training and deploying LLMs is significant, necessitating thoughtful strategies such as parameter-efficient finetuning and pre-computing techniques to ensure practical feasibility.

- Effectiveness: While LLMs offer enhanced representation capabilities, effectively modeling long, contextual sequences and integrating ID features remain open questions that require innovative solutions.

- Ethics and Fairness: Adopting LLMs raises ethical issues, including biases present in training corpuses and decision transparency, demanding robust fairness measures and explainability solutions.

Conclusion and Future Directions

The paper successfully identifies the convergence between LLMs and RS as a promising domain of research. The methodological insights and detailed analysis provide clear pathways for future developments, encouraging exploration into custom LLM architectures tailored to specific recommendation contexts and the development of benchmarks to systematically measure integration outcomes.

In conclusion, the work by Lin et al. offers a pivotal reference point for researchers and practitioners aiming to harness LLMs within recommender systems, pointing toward a future where these systems not only meet but surpass current capability thresholds. Future endeavors in this domain should concentrate on balancing LLM strengths with the requisite computational feasibility while ensuring ethical usage, setting the foundation for more adaptive, intelligent, and user-centric recommendation applications.