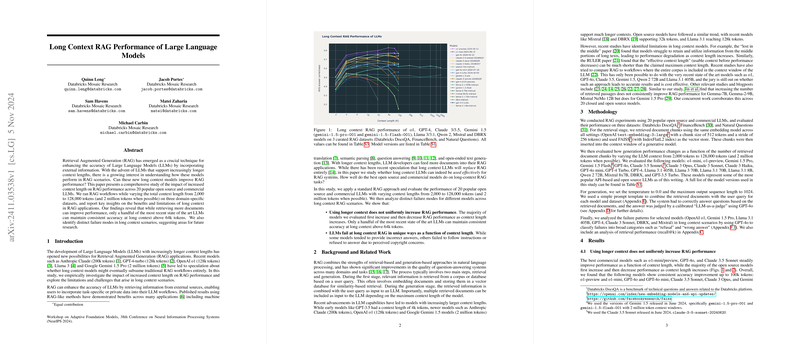

The paper investigates the impact of increasing context lengths on Retrieval Augmented Generation (RAG) performance in LLMs. The paper systematically evaluates a diverse set of 20 LLMs—encompassing both proprietary and open source systems—across a spectrum of context sizes ranging from 2,000 tokens to 128,000 tokens, with select experiments extending to 2 million tokens. Three domain-specific datasets are used for benchmarking: a technical Q&A set (Databricks DocsQA), a financial information benchmark (FinanceBench), and an academic query set (Natural Questions).

Methodology and Experimental Setup

- A standard RAG pipeline is employed where retrieval is conducted using a fixed embedding model (OpenAI text-embedding-3-large) combined with a FAISS index. Retrieved document chunks (fixed at 512 tokens with an overlapping stride) are concatenated with the user query to generate responses.

- Inference is performed under deterministic conditions (temperature set to 0.0) with a fixed output length (1024 tokens) using a unified prompt template which instructs the model to answer based solely on the provided context.

- The system performance is assessed not only in terms of answer accuracy but also through a detailed failure analysis. Multiple failure modes are categorized (e.g., refusal, wrong answer, repeated content, failure to follow instructions) using an LLM-based judgment process calibrated to human labelers.

Key Findings

- Non-Monotonic Performance Gains: While certain state-of-the-art commercial models (such as specific variants of OpenAI’s o1 series, GPT-4o, and Claude 3.5 Sonnet) exhibit a monotonic improvement in answer correctness with longer contexts (up to 100k tokens), the majority of open source models show a characteristic performance escalation followed by degradation. Notably, many open source systems tend to peak their accuracy between approximately 16k and 32k tokens.

- Distinct Failure Modes: The analysis reveals that failure modes vary significantly with context length and across models. For instance:

- Some models, like Claude 3 Sonnet, increasingly refuse to answer queries due to issues tied to copyright safeguards.

- Others, such as Gemini 1.5 Pro, maintain stable performance even at extreme context sizes (up to 2 million tokens) but become more prone to task failures under strict safety filters.

- Open source systems—for example, Llama 3.1 405B and Mixtral-8x7B—show degradation via repeated or random content generation and, in some cases, tend to deviate from the instruction by summarizing rather than directly answering the query.

- Effectiveness of Long Context Integration: The paper demonstrates that increased context length can indeed provide additional relevant information, as verified through recall metrics (recall@k), but this does not translate uniformly into end-to-end RAG performance improvements. The practical benefits of longer contexts are largely model-dependent, and only a limited subset of models leverage very long contexts without incurring accuracy penalties.

- Cost Considerations: An analysis of input token costs reveals that leveraging long contexts is considerably more expensive than traditional retrieval methods. For example, per-query costs can vary dramatically across models (e.g., GPT-4o versus o1-preview) and escalate significantly when scaling up to multi-million token contexts. This economic aspect underlines a trade-off between simplified system architecture (directly ingesting entire datasets) and computational efficiency.

Implications for Future Research

- The heterogeneous performance across LLMs suggests that improvements in long context capabilities remain an active area of investigation, particularly for open source models which lag behind commercial counterparts in stable long context performance.

- The distinct failure modes identified—ranging from safety filtering-induced task failures to divergent behaviors in following prompt instructions—indicate the need for tailored training and alignment strategies that specifically address long context challenges.

- The paper also poses the intriguing possibility that for corpora whose size is within the extended context limits of some modern LLMs, it might become feasible to bypass traditional retrieval steps entirely by ingesting entire datasets. However, this would require balancing computational costs and potential degradation in task-specific performance.

Overall, the paper provides a comprehensive empirical evaluation that quantifies the trade-offs involved in extending context lengths for RAG systems, highlighting both the promise and the limitations of long context LLMs for various application domains.