Inference Scaling for Long-Context Retrieval Augmented Generation

The paper "Inference Scaling for Long-Context Retrieval Augmented Generation" addresses critical aspects of enhancing performance in long-context LLMs with a focus on retrieval augmented generation (RAG). By investigating inference scaling strategies, the authors aim to improve the ability of LLMs to effectively handle knowledge-intensive tasks that involve processing extensive contextual information.

Core Contributions

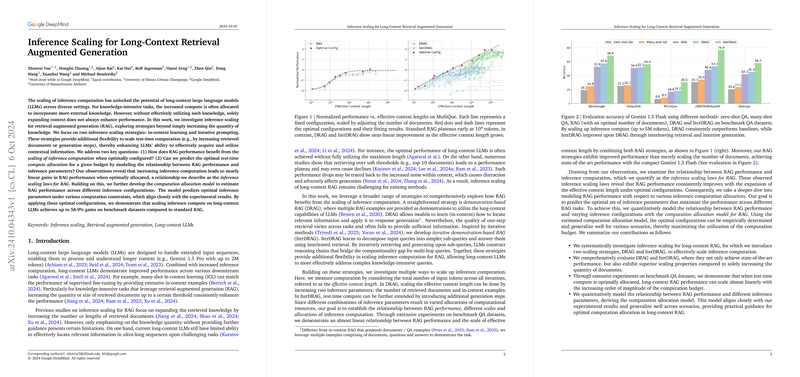

The paper emphasizes two primary inference scaling strategies: demonstration-based RAG (DRAG) and iterative demonstration-based RAG (IterDRAG). These approaches provide flexibility in scaling computation at test time, allowing for the optimization of RAG performance by increasing the quantity of retrieved documents and in-context examples, as well as introducing multiple generations steps. The paper presents an analysis of the impacts of these strategies across various benchmark datasets, modeling the relationships between RAG performance and the configuration of inference parameters.

Key contributions include:

- Demonstration-Based RAG: DRAG utilizes the long-context capabilities of LLMs by incorporating both extensive documents and in-context examples in the input. This design allows the LLM to better utilize the expanded context for generating answers in a single inference step.

- Iterative Demonstration-Based RAG: IterDRAG decomposes complex queries into simpler sub-queries and iteratively retrieves additional context, enhancing LLMs' knowledge retrieval and reasoning capabilities. This iterative approach leverages multiple inference steps to improve upon compositional reasoning tasks that DRAG alone may not address optimally.

- Inference Scaling Laws: The authors propose the inference scaling laws for RAG, demonstrating that performance scales linearly with increased effective context length under optimal configurations of inference parameters. This finding suggests a systematic method to predict and enhance LLMs' performance by scaling computational resources effectively.

- Computation Allocation Model: The paper introduces a computation allocation model that estimates optimal inference parameters for various computation constraints, guiding the effective allocation of resources to maximize RAG performance.

Experimental Outcomes

Empirical results reveal that the proposed strategies, DRAG and IterDRAG, substantially outperform traditional approaches, such as zero-shot and many-shot question answering, particularly when handling large-scale queries. IterDRAG, in particular, showcases superior performance for extensive contexts, achieving up to 58.9\% gains on benchmark datasets when the computation budget is expanded to five million tokens.

Implications and Future Directions

The findings of this paper have significant theoretical and practical implications. The identification of inference scaling laws offers a robust framework for understanding and predicting LLM behavior in long-context tasks. The computation allocation model provides actionable insights into resource allocation, optimizing test-time computation to achieve better results. Future work in this domain may delve into more sophisticated retrieval methods, refining document relevance, and addressing intrinsic limitations in long-context LLMs' modeling capabilities. Additionally, the exploration of dynamic retrieval approaches could present opportunities for further enhancements in inference efficiency and accuracy.

This paper advances the state-of-the-art in retrieval augmented generation by offering coherent strategies and theoretical foundations for scaling inference capabilities in long-context LLM applications. Through systematic exploration of inference trade-offs, it sets the stage for more efficient and effective AI systems in knowledge-intensive domains.