Overview of "TokenSelect: Efficient Long-Context Inference and Length Extrapolation for LLMs via Dynamic Token-Level KV Cache Selection"

The paper "TokenSelect: Efficient Long-Context Inference and Length Extrapolation for LLMs via Dynamic Token-Level KV Cache Selection" presents a novel approach to address the challenges associated with long-context inference in LLMs. The paper targets two primary obstacles: performance degradation when dealing with sequences longer than those seen during training and the high computational costs associated with quadratic attention complexities.

Key Contributions

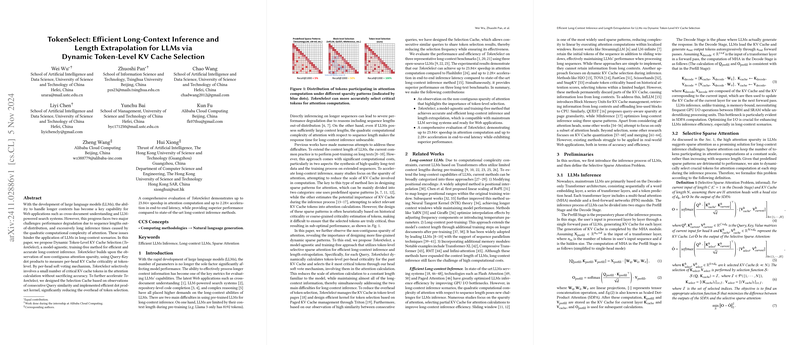

The authors introduce TokenSelect, a method that improves the efficiency and accuracy of long-context inference without requiring additional training or model-specific adaptations. TokenSelect is predicated on token-level Key-Value (KV) cache selection via dynamic evaluation of token importance, which deviates from more traditional block-level or fixed sparse attention methods.

The paper makes significant advancements in the following areas:

- Dynamic Token-Level Selection: TokenSelect evaluates token importance via dot products for each head, implementing a per-head selection mechanism that maintains long-context information accurately.

- Selection Cache: This component leverages consecutive query similarity, conserving computational resources by caching selection results across similar queries.

- Efficient Implementation: Leveraging the Paged Attention concept, the authors developed an efficient kernel for TokenSelect, mitigating I/O bottlenecks that often limit inference speeds.

Experimental Results and Implications

Evaluation was conducted on benchmarking datasets such as InfiniteBench, RULER, and LongBench using various mainstream LLMs, including Qwen2-7B-Instruct, Llama-3-8B-Instruct, and Yi-1.5-6B-Chat. The results demonstrate that TokenSelect:

- Achieves up to a 23.84× speedup in attention computation relative to the FlashInfer library, improving computational efficiency significantly.

- Offers up to a 2.28× reduction in end-to-end latency compared to state-of-the-art long-context inference methods while maintaining or improving accuracy.

- Demonstrates superior performance without requiring lengthy post-training processes, maintaining the model's original capabilities even when extrapolating to longer contexts.

These improvements illustrate the potential of TokenSelect in enhancing inference efficiency in large-scale web applications where prompt response times and the ability to handle extended sequences are critical.

Future Prospects

TokenSelect's framework for token-level dynamic selection opens several avenues for future research:

- Exploring further integration with memory-efficient architectures could inform new design paradigms in Transformer models.

- Adapting the Selective Sparse Attention framework to other domains requiring efficient processing of extensive datasets, such as continuous monitoring or streaming applications.

- Investigating the scalability of TokenSelect in the context of model distillation or transfer learning could provide insights into improving efficiency across varied deployment contexts.

In conclusion, through its intelligent design and efficient implementation, TokenSelect significantly enhances the capability of LLMs to manage long contexts, with substantial implications for the future of natural language processing in computationally constrained environments.