NaCl: A General and Effective KV Cache Eviction Framework for LLMs at Inference Time

The rapid advancements in LLMs have brought about a host of promising applications, yet the memory-intensive nature of these models, especially during inference, poses significant challenges. The paper "NaCl: A General and Effective KV Cache Eviction Framework for LLMs at Inference Time" presents an innovative approach to managing KV cache memory consumption, addressing both the theoretical and practical aspects of long-context modeling.

Introduction and Problem Setting

The deployment of LLMs requires substantial computational resources, primarily due to the memory overhead associated with the KV cache. Traditional models, such as LLaMA and GPT series, consume large amounts of memory with increasing context lengths, making them impractical for applications constrained by fixed hardware resources. For instance, a 7-billion-parameter model with a sequence length of 32k can generate a KV cache exceed 64GB, vastly outstripping the capacity of many modern GPUs.

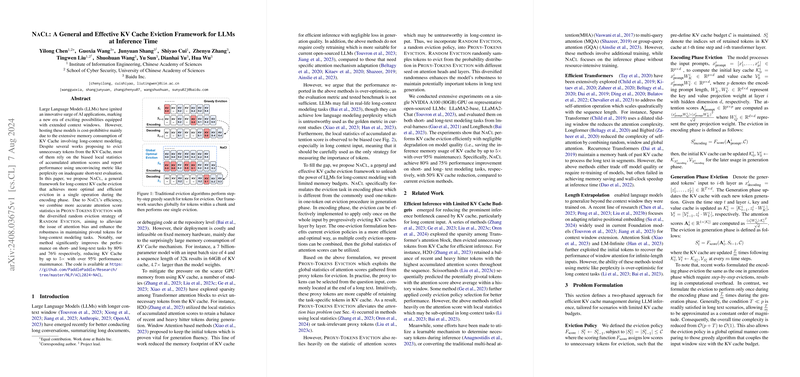

Existing approaches to alleviate KV cache memory pressure, such as H2O and Scissorhands, typically rely on local statistics of accumulated attention scores. However, these methods often lack robustness and fail to generalize across different tasks and text lengths. They predominantly report performance using perplexity on short-text data, which may not translate to real-world long-context scenarios. NaCl offers an alternative by combining more accurate attention statistics with a diversified eviction strategy.

The NaCl Framework

NaCl introduces a general framework for efficient and optimal KV cache eviction during the encoding phase. This framework is tailored to enhance performance in long-context modeling tasks through two primary strategies: Proxy-Tokens Eviction and Random Eviction.

Proxy-Tokens Eviction

Proxy-Tokens Eviction leverages global statistics of attention scores obtained from a selected set of proxy tokens. These tokens, typically located towards the end of the input, serve as a reliable reference for estimating the importance of other tokens. The method aims to mitigate the attention bias observed in local statistics-based solutions by considering the global context of text sequences.

Random Eviction

To complement Proxy-Tokens Eviction, NaCl incorporates Random Eviction, which introduces randomness by sampling tokens based on their probability distribution derived from proxy-token statistics. This approach enhances the robustness of the model by maintaining a diverse set of potentially important tokens, even under long-context scenarios.

Experimental Results

Experiments were conducted using LLaMA2 models on both short- and long-text tasks to evaluate NaCl's efficacy. The results showcased the following key findings:

- Short-Text Tasks: On benchmarks such as PiQA, COPA, and ARC-E, NaCl achieved an average accuracy close to the performance of full cache use, demonstrating minimal degradation even with considerable KV cache reduction. Specifically, NaCl maintained 95% performance while reducing the KV cache footprint by up to 5×.

- Long-Text Tasks: In tasks like NarrativeQA and TriviaQA, NaCl outperformed traditional methods, demonstrating stable and superior accuracy. Notably, the framework retained crucial context information, thereby enhancing comprehension and generation capabilities for extended sequences. NaCl achieved up to 80% improvement over baseline methods while maintaining a significant reduction in memory usage.

Implications and Future Directions

NaCl's hybrid eviction policy presents a significant advancement in the efficient deployment of LLMs, particularly in memory-constrained environments. By effectively reducing memory usage, NaCl facilitates the application of LLMs in practical, long-context settings without compromising performance.

The implications of NaCl extend to various domains, including real-time conversational agents, large-scale document summarization, and repository-level code analysis. The enhanced ability to manage long texts with limited memory constraints could spur innovations in these areas, potentially leading to more efficient and accessible AI-driven solutions.

Conclusion

NaCl marks a critical step forward in optimizing KV cache management for LLMs at inference time. By integrating Proxy-Tokens Eviction and Random Eviction, the framework strikes a balance between efficiency and robustness, resulting in significant memory savings and stable performance across diverse tasks. Future work could explore adaptive proxy-token selection mechanisms and further refine the integration with advanced attention mechanisms to push the boundaries of long-context modeling in LLMs. The availability of the code and comprehensive evaluation methodology further validates the practicality and effectiveness of this approach, making it a valuable contribution to the field of AI research.