Evaluating LLMs in Cognitive Behavior Therapy with CBT-Bench

The paper "CBT-Bench: Evaluating LLMs on Assisting Cognitive Behavior Therapy" presents a new framework for assessing the potential of LLMs in supporting psychotherapy, particularly in Cognitive Behavior Therapy (CBT). The authors introduce CBT-Bench, a benchmark designed to systematically evaluate LLMs across different tasks associated with CBT, highlighting the challenges and capabilities of AI in this critical domain.

Key Contributions

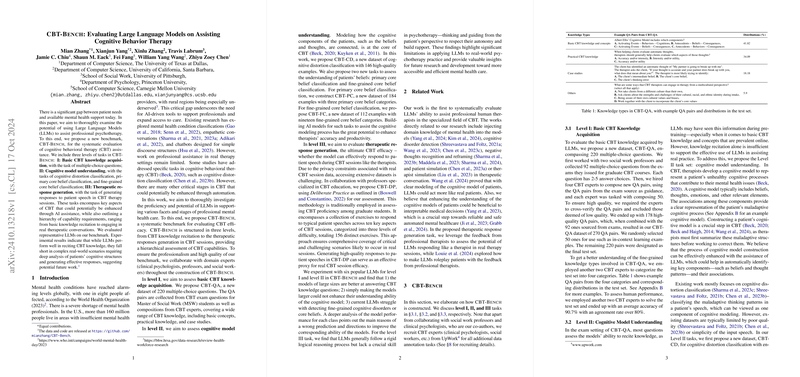

The CBT-Bench comprises three hierarchical levels of tasks:

- Basic CBT Knowledge Acquisition:

- The authors introduce CBT-QA, a dataset with 220 multiple-choice questions to evaluate foundational CBT knowledge. This level assesses the LLMs' capability to recite information ranging from basic concepts to practical knowledge. Results show that larger models such as Llama-3.1-405B perform better, achieving accuracy rates comparable to human experts.

- Cognitive Model Understanding:

- This level involves more complex tasks like cognitive distortion classification (CBT-CD), primary core belief classification (CBT-PC), and fine-grained core belief classification (CBT-FC). The paper's analysis reveals that while LLMs handle basic knowledge well, they struggle with nuanced cognitive tasks such as identifying fine-grained beliefs and distortions, which require deep understanding and interpretation.

- Therapeutic Response Generation:

- Using deliberate practice exercises, CBT-DP simulates real therapy scenarios. Here, models are evaluated on their ability to generate responses similar to those of professional therapists. The results indicate significant gaps between model responses and human-crafted responses, with LLMs often lacking the empathy and nuanced reasoning essential in therapy.

Experimental Findings

- LLMs of smaller sizes show similar performances to larger models when contextual reasoning is required, such as in understanding patients' cognitive models.

- Despite high knowledge recitation performance, LLMs fall short in tasks requiring empathy and flexibility, especially in real-world scenarios.

- The experiments highlight that current models can serve as supportive tools but are not yet capable of independently conducting therapy sessions.

Implications and Future Directions

While the LLMs exhibit potential in knowledge-based tasks, their application in CBT practice is currently limited by deficiencies in understanding complex human emotions and interactions. The findings suggest several directions for future work:

- Enhancing Model Training: Incorporating more diverse and contextually rich datasets could improve the models' ability to understand and interpret complex cognitive structures and emotional states.

- Hybrid Systems: Combining LLMs with domain-specific algorithms or expert systems might address current limitations by adding layers of interpretability and decision-making fidelity.

- Ethical Considerations: Ensuring the ethical deployment of AI in mental health settings remains paramount. Systems should augment human therapists rather than replace them, maintaining stringent oversight and consent protocols.

Conclusion

The CBT-Bench framework represents a significant effort to assess LLM capabilities in a highly sensitive field. While promising in certain areas, much work remains to align LLM performance with the nuanced requirements of real-world therapeutic contexts. As the authors lay the groundwork with their benchmark, future research can build upon these insights to create more effective and empathetic AI tools for psychotherapy assistance.