Universal Visual Grounding for GUI Agents: An Analytical Overview

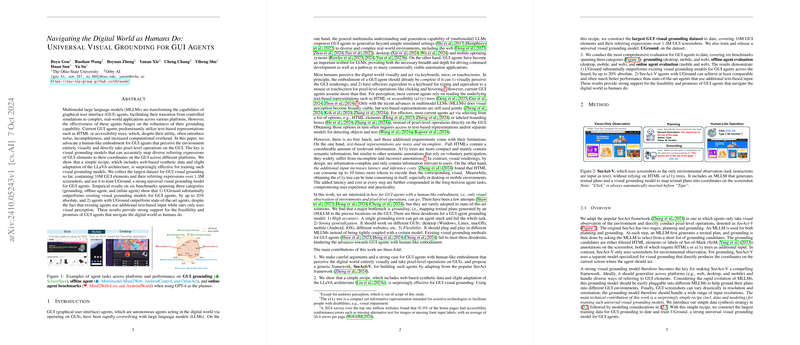

The paper "Navigating the Digital World as Humans Do: Universal Visual Grounding for GUI Agents" addresses an important challenge in the development of autonomous graphical user interface (GUI) agents. These agents are becoming increasingly complex, moving from controlled simulations to real-world, multifaceted applications. The research focuses on enhancing GUI agents by advocating a human-like embodiment where agents perceive the environment visually and perform pixel-level actions rather than relying on text-based representations such as HTML or accessibility (a11y) trees.

Motivation and Approach

The current reliance on text-based representations in GUI agents contributes to inefficiencies, including noise, incompleteness, and increased computational overhead. This paper proposes a shift towards agents that operate akin to humans by perceiving GUIs visually and performing actions directly at the pixel level. This approach necessitates robust visual grounding models capable of mapping diverse GUI element expressions to precise coordinate locations.

To achieve this, the paper introduces a methodology leveraging a simple yet effective recipe. This includes the use of web-based synthetic data, coupled with a slight adaptation of the LLaVA architecture, to train universal visual grounding models. A significant data collection effort results in a dataset featuring 10 million GUI elements over 1.3 million screenshots, marking it as the largest of its kind for GUI visual grounding. The research culminates in the development of UGround, a universal model showing substantial performance improvements over existing methods.

Empirical Results and Contributions

The paper presents empirical evaluations across six benchmarks categorized into grounding, offline agent, and online agent tests. The results indicate that UGround excels compared to existing models, with an increase in accuracy up to 20% in some cases. Agents augmented with UGround achieve superior performance against state-of-the-art agents that rely on additional text-based inputs. These results affirm the feasibility and promise of GUI agents that navigate in a manner akin to human interaction.

Key contributions of this work include:

- Presenting a compelling rationale for GUI agents with human-like embodiments.

- Demonstrating a surprisingly effective recipe for GUI visual grounding achieved through synthetic data and LLaVA model adaptation.

- Providing the largest GUI visual grounding dataset and introducing UGround, a robust model with broad generalization capabilities.

- Conducting comprehensive evaluations demonstrating the efficacy of vision-only GUI agents in realistic environments.

Implications and Future Directions

The success of UGround has significant implications for the development of GUI agents, suggesting that pixel-based interactions might become a more prevalent approach in the design of autonomous systems. The research points toward a future where GUI agents need not depend on cumbersome text representations, thereby streamlining processes and reducing computational costs.

Future research could further refine visual grounding techniques, improve data efficiency in training, and enhance the understanding of long-tail elements on mobile and desktop UIs. Exploring how these agents can handle more nuanced interactions and idiosyncratic iconography will be crucial in advancing their practical applications. Moreover, investigating the integration of auditory perception and other sensory modalities could expand the horizon of these agents' capabilities.

In conclusion, this paper lays the groundwork for significant advancements in GUI agent technology, demonstrating the advantages of a human-like approach to digital interactions and setting a new standard in the field of visual grounding for GUI-based systems.