Enhancing Vision-Language Compositionality in VLMs

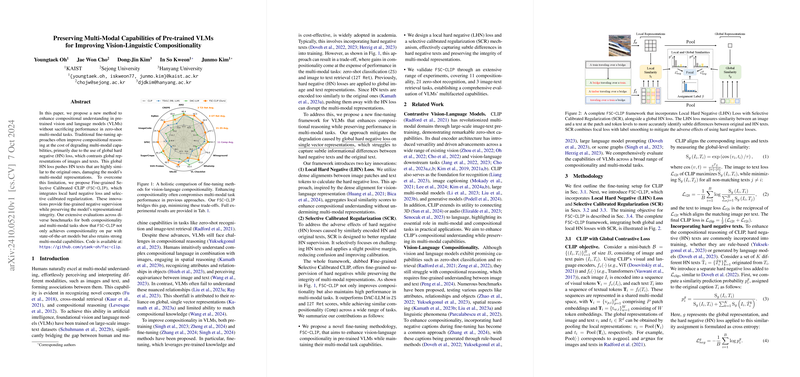

The paper "Preserving Multi-Modal Capabilities of Pre-trained VLMs for Improving Vision-Linguistic Compositionality" proposes Fine-grained Selective Calibrated CLIP (FSC-CLIP), a fine-tuning framework that enhances compositional understanding in Vision-LLMs (VLMs) while maintaining robust performance in multi-modal tasks. This approach addresses the prevalent trade-offs faced in existing methods that rely on global hard negative (HN) loss strategies.

Methodology and Innovations

- Local Hard Negative (LHN) Loss:

- The LHN loss involves the use of dense alignments between image patches and text tokens, which helps in capturing the nuanced differences between the original text and its hard negative counterparts. This enhancement allows VLMs to develop a more fine-grained understanding without impacting their multi-modal capabilities.

- Selective Calibrated Regularization (SCR):

- SCR comprises focal loss and label smoothing to regulate HN supervision. This innovation reduces the detrimental effects of similar encoding of HN and original texts. By focusing more on challenging associations and allowing slight positive margins for HN texts, SCR aids in maintaining model calibration and integrity.

Evaluation and Results

The evaluation was conducted over a broad spectrum of 11 compositionality and 21 zero-shot classification tasks, alongside three image-text retrieval benchmarks. The results demonstrate that FSC-CLIP achieves competitive compositionality scores akin to existing state-of-the-art methods while preserving multi-modal functionalities:

- Compositionality: FSC-CLIP shows improvements in compositional reasoning tasks, achieving scores close to 54.2, thus surpassing previous methods like DAC-LLM and TSVLC in various setups.

- Multi-modal Task Preservation: The robust preservation of zero-shot recognition and retrieval capabilities attests to the efficacy of FSC-CLIP’s earlier described methodologies. For example, it achieves retrieval scores that reflect an understanding of fine-grained compositional details.

- Cross-Validation: The incorporation of LoRA further bolsters scores, enhancing trade-offs between maintaining compositional understanding and multi-modal task performance.

Implications and Future Directions

The methodologies proposed in FSC-CLIP not only advance the compositional understanding in vision-LLMs but also offer pathways to refine fine-tuning strategies that minimize adverse effects on multi-modal capabilities. By leveraging local hard negative loss and calibrated regularization, FSC-CLIP provides a nuanced approach that can potentially enhance broader model architectures beyond VLMs.

Future developments could further explore diversifying training datasets, moving beyond short captions to incorporate more complex language structures. There is also potential in adapting similar frameworks for other multi-modal tasks, expanding scope to encompass audio or 3D model integrations, thereby broadening the scope of compositionality research within artificial intelligence.

In summary, the introduction of FSC-CLIP serves as a significant contribution towards reconciling compositional reasoning with multi-modal task execution, a step forward in advancing the capabilities of VLMs in a variety of applications.