Enhancing Compositionality in Contrastive Vision-LLMs with CLoVe

Introduction to CLoVe Framework

The integration of Vision and LLMs (VLMs) has achieved notable advancements in tasks requiring an understanding of both textual and visual inputs. Models like CLIP have demonstrated their adeptness in object recognition but have struggled with handling compositional language, indicating a need for models that can interpret complex concepts by understanding the composition of simpler concepts. The paper introduces a novel framework, CLoVe, aiming to significantly enhance the compositional language encoding capabilities of existing contrastive VLMs without compromising their performance on standard benchmarks.

Examining the Challenge of Compositionality

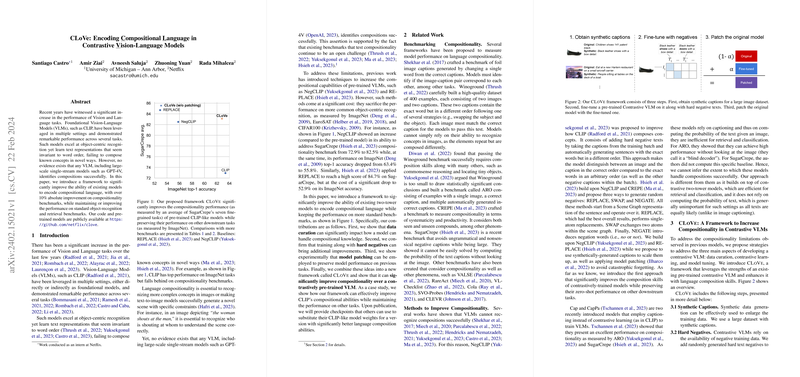

Various benchmarks have established that even highly sophisticated models like GPT-4V fail to grasp compositional nuances effectively. Previous attempts to imbue VLMs with compositional understanding (e.g., NegCLIP and REPLACE) have unfortunately led to a decrease in object recognition accuracy. CLoVe addresses this issue by improving upon the compositionality of models through a multi-faceted approach that includes data curation, the inclusion of hard negatives, and model patching, showcasing over 10% absolute improvement on compositionality benchmarks.

CLoVe Framework Detailed

Synthetic Captions

The CLoVe framework enriches training data with high-quality synthetic captions generated from a vast dataset, maintaining a balance between data volume and annotation quality. This approach counters the drawbacks of using smaller, though high-quality, datasets like COCO, which may not cover a wide array of objects and actions.

Hard Negatives

In integrating hard negative texts during the training phase, CLoVe sharpens a model's understanding of language composition. By employing carefully crafted hard negatives, the model is trained to discern subtle nuances in word arrangement and contextual usage, substantially improving its compositionality skills.

Model Patching

A critical innovation within CLoVe is the use of model patching, designed to retain the pre-trained model’s original performance on standard benchmarks while integrating enhanced compositionality. This step amalgamates the strengths of the fine-tuned model with the foundational capabilities of the original model, addressing the trade-off observed in previous methodologies.

Empirical Validation

The efficacy of the CLoVe framework was demonstrated through a comprehensive evaluation involving a series of ablation studies and comparisons against baseline models. The use of synthetic captions, the inclusion of hard negatives, and strategic model patching collectively contributed to noteworthy improvements across both compositionality and standard benchmarks. For instance, applying CLoVe to CLIP not only improved its compositional understanding as measured by benchmarks like SugarCrepe but also maintained its proficiency in object recognition tasks, such as ImageNet.

Looking Forward

While CLoVe marks a significant step towards rectifying compositionality in VLMs, the journey towards models that can fully comprehend and generate compositional language continues. Future efforts could explore refining synthetic caption generation, addressing potential biases in model performance across different demographics, and extending these techniques to single-tower models. The release of code and pre-trained models opens avenues for further research and application, fostering advancements in the field of Vision-LLMing.

Concluding Thoughts

In summary, the CLoVe framework represents a substantial advancement in encoding compositional language within contrastive VLMs. By overcoming the existing trade-offs between compositionality and object-centric recognition accuracy, CLoVe sets a new precedent for future developments in the integration of vision and language understanding in AI models.