Evaluating Long Text Generation Capabilities of LLMs: An Overview of HelloBench

In the evolving landscape of NLP, the capabilities of LLMs have been widely acknowledged. However, the domain of long text generation has not been thoroughly examined. To address this gap, the research paper "HelloBench: Evaluating Long Text Generation Capabilities of LLMs" introduces HelloBench, a benchmark explicitly designed for evaluating the performance of LLMs in generating long text.

Key Contributions and Findings

HelloBench's Design and Structure:

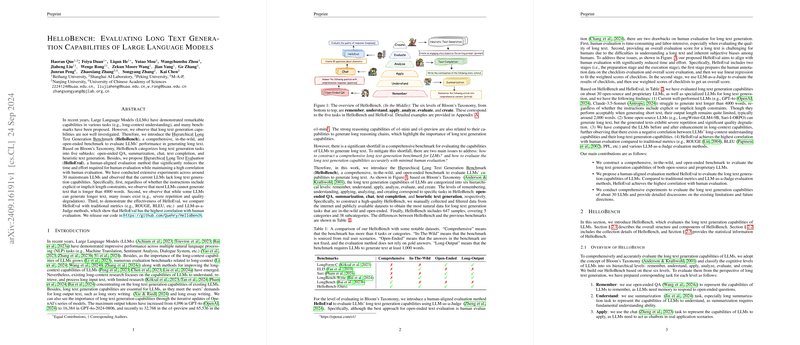

HelloBench is a comprehensive, in-the-wild, and open-ended benchmark that assesses the ability of LLMs to generate long texts across five tasks: open-ended QA, summarization, chat, text completion, and heuristic text generation. These tasks are aligned with Bloom's Taxonomy, which categorizes long text generation capabilities into six hierarchical levels: remembering, understanding, applying, analyzing, evaluating, and creating. This categorization aids in systematically analyzing the text generation proficiency of LLMs.

Human-Aligned Evaluation Method (HelloEval):

The paper also proposes HelloEval, a human-aligned evaluation method that leverages LLM-as-a-Judge to assess long text generation. HelloEval includes two stages: preparation and execution. In the preparation stage, human annotators evaluate checklists and overall scores, and linear regression is used to determine weighted scores for each checklist. In the execution stage, LLMs evaluate the checklists, and the weighted scores are summed to produce a final overall score. This method significantly reduces the time and labor required for human evaluations while maintaining high correlation with human judgement.

Experimental Results:

The paper conducted extensive experiments on approximately 30 mainstream LLMs. Key observations include:

- Many current LLMs struggle to generate texts longer than 4,000 words.

- Even when some LLMs manage to generate longer texts, issues such as severe repetition and quality degradation are prevalent.

- The comparative analysis highlighted that GPT-4o-2024-08-06 and Mistral-Large-API performed best in generating long texts, yet with scores still showing room for improvement.

- Enhanced LLMs specifically designed for long text generation, like LongWriter-GLM4-9B and Suri-I-ORPO, can generate significantly longer texts, but often at the cost of content quality.

- Current LLMs tend to generate around 1,000 words when implicit length constraints are given, regardless of the actual instruction to generate longer text.

Effectiveness of HelloEval:

When comparing different evaluation methods such as traditional metrics (ROUGE, BLEU) and other LLM-as-a-Judge methods, HelloEval's correlation with human evaluation was the highest. This indicates the method's robustness and closer alignment with human judgement, making it a reliable tool for assessing long-text generation quality.

Practical and Theoretical Implications

From a practical perspective, HelloBench and HelloEval offer significant tools for developers and researchers to comprehensively evaluate LLMs' long text generation capabilities. The detailed and systematic evaluation criteria, combined with the human-aligned evaluation framework, provide nuanced insights into model performance, thereby guiding future improvements in LLM development. Practically, this could lead to better deployment of LLMs in applications requiring high-quality long text generation, such as content creation, automated report generation, and extended dialogue systems.

Theoretically, the paper highlights the trade-off between the length and quality of generated text, unveiling that increasing the output length often results in decreased content quality. Further, the observation that enhancements in long-context understanding do not necessarily improve long text generation capabilities offers a novel avenue for future research. Addressing this discrepancy will be pivotal in advancing LLM architecture and training methodologies.

Future Directions

The paper underscores the necessity for continued research in developing techniques that can extend output length while maintaining, or even enhancing, quality. Moreover, exploring methods beyond alignment training to shift the current preference from short-input-long-output paradigms is crucial. Finally, harmonizing improvements in both long-context comprehension and long-text generation remains a fundamental challenge in realizing the full potential of LLMs in both long input and output scenarios.

Conclusion

The research presented in "HelloBench: Evaluating Long Text Generation Capabilities of LLMs" makes significant contributions to the field by providing a comprehensive benchmark and a robust evaluation framework. With HelloBench and HelloEval, the paper sets a new standard in assessing the long text generation capabilities of LLMs, providing a reliable foundation for future innovations in this domain.