TinyVLA: Towards Fast, Data-Efficient Vision-Language-Action Models for Robotic Manipulation

The paper "TinyVLA: Towards Fast, Data-Efficient Vision-Language-Action Models for Robotic Manipulation" introduces a novel family of compact vision-language-action (VLA) models designed to overcome significant challenges in existing VLA models, particularly those related to inference speed and data efficiency. This research addresses the limitations of current VLA models, such as OpenVLA, which are characterized by slow inference speeds due to their dependence on large model parameters and extensive pre-training requirements.

Contributions and Methodology

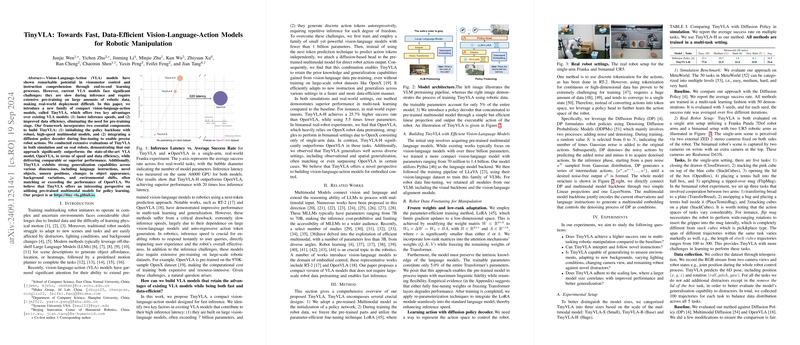

The authors present TinyVLA, a VLA model that boasts faster inference speeds and improved data efficiency without compromising performance. The key innovations in TinyVLA include:

- Policy Backbone Initialization: The model initialization leverages robust, high-speed multimodal models, ensuring a compact yet powerful VLA model.

- Diffusion Policy Decoder: The integration of a diffusion-based policy decoder during the fine-tuning phase enables precise robot actions. This approach allows for direct action prediction without the need for extensive autoregressive token generation.

TinyVLA’s architecture includes a pre-trained multimodal model as the policy network's starting point. During the training phase, the policy backbone's weights are frozen, and low-rank adaptation (LoRA) techniques are applied, updating only 5% of the model's parameters. The model also employs a diffusion-based head for generating robot actions, improving efficiency and maintaining the pre-trained model's generalization capabilities.

Experimental Validation

The efficacy of TinyVLA is demonstrated through extensive experimentation in both simulated environments and real-world robotic setups. Key findings include:

- Simulation Results: In the MetaWorld benchmark (50 tasks), TinyVLA-H significantly outperformed the Diffusion Policy, achieving a 21.5% higher average success rate. The superiority was especially pronounced in more complex tasks.

- Real-World Performance: The model was evaluated on both single-arm and bimanual robotic tasks. TinyVLA-H exhibited superior performance with a 94.0% average success rate across five single-arm tasks, outperforming OpenVLA by 25.7%. In bimanual experiments, TinyVLA-H achieved success rates where baselines consistently failed.

- Generalization: TinyVLA demonstrated strong generalization capabilities across various dimensions:

- Instruction Generalization: The model correctly interpreted and followed novel instructions involving unseen objects and tasks.

- View and Background Generalization: TinyVLA managed to perform tasks accurately under different camera viewpoints and various backgrounds.

- Lighting Conditions and Distractors: The model remained robust against changes in lighting conditions and the presence of distractors, significantly outperforming baselines.

- Spatial and Visual Generalization: TinyVLA effectively handled spatial generalization and adapted to objects with different appearances.

Implications and Future Directions

This research has substantial implications for the deployment of robotic systems in real-world environments. The ability of TinyVLA to deliver fast inference and high data efficiency makes it a promising solution for practical robotic applications where computational resources are limited, and rapid adaptation to new tasks and environments is essential.

Conclusion

TinyVLA represents a significant advancement in the design of vision-language-action models for robotic manipulation. Its compact architecture, rapid inference capabilities, and robust generalization to various tasks and environments highlight its potential for broad application. Future work could explore further optimization of the model architecture and expansion to more complex multi-agent systems, pushing the boundaries of what VLA models can achieve in the field of robotics.