Dynamic Expert Allocation for Mixture-of-Experts Models

In recent years, the advent of Transformer-based Mixture-of-Experts (MoE) models has significantly enhanced the performance of NLP systems. The primary innovation of MoE models lies in their architecture, which employs multiple subnetworks or "experts," with a router mechanism to selectively activate a subset of these experts for processing each input token. However, conventional MoE models allocate a fixed number of experts per token without considering the varying importance of tokens in different contexts. This research introduces DA-MoE, a novel approach that dynamically allocates a varying number of experts based on token importance to enhance both efficiency and predictive performance.

Key Contributions

The paper presents several key contributions to the field:

- Identification of Limitations in Existing MoE Models: The authors analyze the critical flaws in current MoE models, particularly the suboptimal allocation of a fixed number of experts to each token regardless of its importance. This results in inefficient resource utilization and impacts model performance adversely.

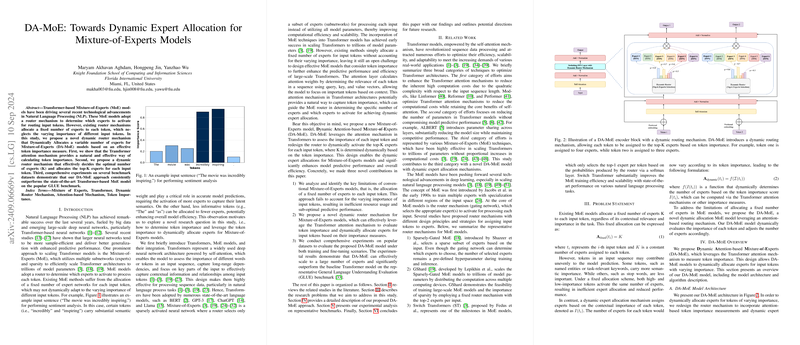

- Proposed Dynamic Router Mechanism: The core contribution is a dynamic router mechanism that leverages the Transformer's inherent attention mechanism to evaluate the importance of tokens. Based on this token importance, the router dynamically determines the optimal number of experts to allocate for each token.

- Comprehensive Experimental Validation: The paper provides extensive experimental results on widely recognized benchmarks, demonstrating that DA-MoE consistently surpasses the state-of-the-art Transformer-based MoE models, particularly on the GLUE benchmark. This underscores the effectiveness of dynamic expert allocation in improving both scalability and efficiency.

Technical Overview

DA-MoE integrates an attention-based token importance measure into the standard MoE architecture. The process is multi-faceted:

- Attention-Based Token Importance: The model calculates token importance using the Transformer's attention weights. These weights indicate the relevance of each token in the input sequence, affirming that tokens with higher attention weights are more crucial for the task at hand.

- Dynamic Router Mechanism: This mechanism dynamically computes the number of experts required for each token based on its importance score. The router then routes the token to the top-K experts where K is determined dynamically. This enables more computational resources to be focused on crucial tokens, enhancing overall model efficiency.

- Ensuring Efficient Resource Utilization: The model incorporates strategies to adhere to capacity constraints, ensuring balanced computational loads across different experts while optimizing for performance.

Experimental Insights

Pre-training and Fine-tuning Evaluation:

The proposed DA-MoE models were evaluated against the Switch Transformer (ST) model for both pre-training and fine-tuning scenarios.

- Pre-training on WikiText-103: In the initial phase, DA-MoE was pre-trained on the WikiText-103 dataset. The results demonstrated that DA-MoE achieves significant performance improvements over the baseline ST model, particularly in sentiment analysis tasks where token importance varies widely.

- Fine-tuning on GLUE Benchmark: For fine-tuning, models pre-trained on the C4 dataset were used. The results across various NLP tasks in the GLUE benchmark show that DA-MoE outperforms the baseline on average, indicating robust improvements in real-world NLP applications through dynamic expert allocation.

Practical and Theoretical Implications

The DA-MoE model presents several important implications:

- Practical Implications: DA-MoE introduces a more efficient and adaptable approach to resource allocation in MoE models, which is crucial for deploying large-scale NLP models in bandwidth-limited environments. The dynamic allocation of experts ensures optimal use of computational resources, resulting in faster and more accurate models.

- Theoretical Implications: The proposed method advances our understanding of sparsity and resource utilization in large neural network architectures. By incorporating token importance derived from attention mechanisms, the approach introduces a novel dimension to model design that balances efficiency and performance.

Future Directions

The paper opens avenues for further research in multiple directions:

- Integration with Diverse MoE Architectures: Future research could explore the integration of the dynamic allocation mechanism into various other MoE architectures to validate its generalizability and effectiveness across a broader range of models.

- Scaling Experiments: Conducting additional experiments on larger datasets and across different languages could further establish the scalability of the DA-MoE model. Exploring token importance measures beyond attention weights might also yield additional performance enhancements.

Conclusion

The DA-MoE model presents a significant advancement in the field of Mixture-of-Experts models by introducing a dynamic expert allocation mechanism that effectively leverages token importance. Comprehensive empirical results affirm the superiority of DA-MoE over conventional MoE approaches, highlighting its potential to set new standards for efficiency and performance in large-scale NLP models. Future research in refining and expanding this approach could further extend its impact and applicability across a wide range of applications.