Comprehensive Analysis Revealing the Role of RAG Noise in LLMs

Introduction

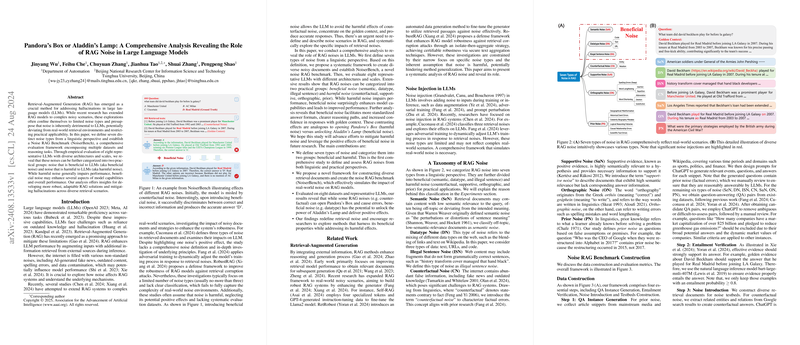

The paper "Pandora's Box or Aladdin's Lamp: A Comprehensive Analysis Revealing the Role of RAG Noise in LLMs" by Jinyang Wu et al. presents a systematic exploration of Retrieval-Augmented Generation (RAG) noise and its impacts on LLMs. The paper delineates seven distinct types of noise and proposes a Noise RAG Benchmark (NoiserBench) for evaluating various noises across multiple datasets and reasoning tasks. The research introduces the novel concept of beneficial and harmful noise, providing empirical evidence on how different noises affect LLM performance. The findings offer valuable insights into developing more robust RAG systems and mitigating hallucinations in diverse real-world retrieval scenarios.

Classification and Impact of RAG Noise

The authors define seven types of noise from a linguistic perspective: Semantic Noise (SeN), Datatype Noise (DN), Illegal Sentence Noise (ISN), Counterfactual Noise (CN), Supportive Noise (SuN), Orthographic Noise (ON), and Prior Noise (PN). These are further categorized into beneficial (SeN, DN, ISN) and harmful noise (CN, SuN, ON, PN). The NoiserBench is introduced to assess the impact of these noises on eight representative LLMs through a comprehensive framework that includes:

- Defining Noise Types: Precise definitions are given for each noise type based on linguistic attributes and practical applications.

- Construction of Noise Testbeds: Systematic creation of diverse noisy documents to simulate real-world scenarios.

- Evaluation of LLMs: Empirical evaluation on eight datasets revealing the bifurcation of noises into beneficial and harmful groups.

Empirical Evaluation and Key Findings

The experiment's results show that beneficial noise can improve model performance by enhancing LLMs' capabilities in delivering more accurate and confident responses. Specifically, beneficial noise like ISN, DN, and SeN consistently leads to improved performance across various models and datasets:

- Illegal Sentence Noise (ISN) improves accuracy by 3.32% and 1.65% on average for Llama3-8B-Instruct and Qwen2-7B-Instruct, respectively.

- Datatype Noise (DN) also shows positive impacts, enhancing performance significantly when combined with diverse LLMs and RAG systems.

- Semantic Noise (SeN), although previously highlighted, continues to demonstrate slight performance improvements.

Moreover, the research underscores that harmful noise like counterfactual noise leads to performance degradation, evidenced by a significant decrease when models encounter it. The analysis of prior noise shows an average accuracy of 79.93% with baseline conditions, which drastically drops to 34.20% under misleading prior noise scenarios. This highlights the importance of detecting and addressing prior errors in user queries.

Mechanisms Behind Beneficial Noise

The positive effects of beneficial noise are hypothesized to be due to:

- Clearer Reasoning Paths: Beneficial noise facilitates more explicit and logical reasoning processes.

- Standardized Response Formats: Outputs exhibit more consistency and standardization, which aids in reducing ambiguity.

- Increased Confidence in Responses: Beneficial noise enhances the model's ability to focus on correct contexts, resulting in higher confidence in responses.

These hypotheses are supported by both case studies and statistical analysis, reinforcing the potential advantages of incorporating beneficial noise into RAG systems.

Implications and Future Directions

The findings hold substantial implications for both practical applications and theoretical advancements in AI:

- Enhanced RAG Systems: Understanding the dichotomy of noise types allows for the development of more resilient and adaptable RAG solutions that can harness the positive aspects of beneficial noise while mitigating the detrimental effects of harmful noise.

- Noise Handling Strategies: Future research can focus on designing noise-robust training paradigms and retrieval strategies that leverage beneficial noise properties effectively.

- Systematic Evaluation Frameworks: NoiserBench provides a comprehensive benchmark for further research on retrieval noise, fostering a deeper understanding of its complexities and guiding improvements in LLM performance across varied scenarios.

Conclusion

This paper by Wu et al. advances the field of RAG by presenting a nuanced classification of noise types and their distinct impacts on LLM performance. By offering a novel benchmark and empirical insights, the research paves the way for developing more effective methods to handle retrieval noise, ultimately enhancing the robustness and reliability of LLMs in real-world applications. Future studies are encouraged to build upon these findings to explore and exploit the beneficial mechanisms of noise in RAG systems.