Fine-Tuning LLMs: Comprehensive Insights and Technical Review

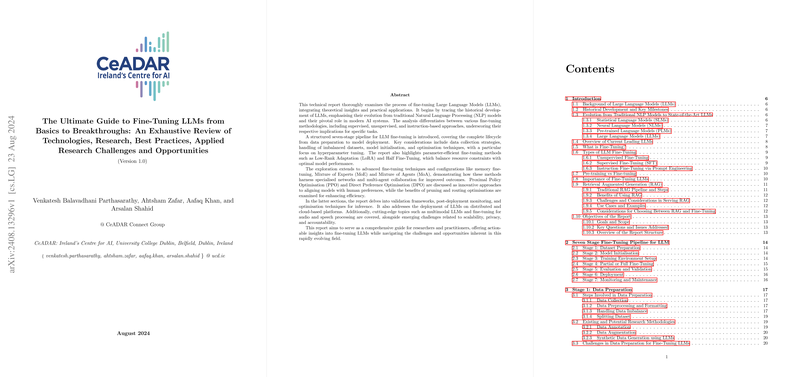

The paper "The Ultimate Guide to Fine-Tuning LLMs from Basics to Breakthroughs" provides an extensive examination of fine-tuning methods for LLMs, articulating the evolution from traditional NLP frameworks and illustrating contemporary practices in adapting these models for domain-specific applications. The authors, affiliated with University College Dublin's CeADAR, provide a methodical dissection of the processes involved—from data preparation to deployment—positioning their work as a pragmatic guide for researchers and engineers in AI.

Core Methodologies and Innovations

The paper delineates a structured seven-stage pipeline crucial for fine-tuning LLMs, beginning with data preparation and culminating in deployment and monitoring. The first stages emphasize meticulous data collection and preprocessing, enhancing the foundation upon which fine-tuning is built. The authors highlight challenges like handling imbalanced datasets and discuss techniques such as SMOTE for synthetic data generation, marking significant advancements in data strategy.

Parameter-Efficient Fine-Tuning (PEFT)

An area of substantial interest is the discussion on Parameter-Efficient Fine-Tuning techniques like Low-Rank Adaptation (LoRA) and its derivatives, including QLoRA. These techniques are pivotal for resource-constrained environments, achieving significant reduction in memory and computational costs. By allowing selective parameter updates, PEFT methods ensure scalability and performance without the exhaustive burden of traditional full-model fine-tuning.

Advanced Configurations

Further sophistication in model training is achieved through the exploration of Memory Fine-Tuning, Mixture of Experts, and Direct Preference Optimisation. These methods optimise multi-agent collaboration and model alignment with human preferences, incorporating reinforcement learning algorithms like PPO.

Deployment and Monitoring

The latter sections of the paper address deployment strategies on cloud-based and distributed platforms, focusing on optimisation for inference. The emergence of multimodal LLMs and their applications in tasks beyond textual data are also explicated, providing perspectives on speech and audio processing.

Empirical Results and Practical Applications

Empirical evidence is furnished by comparing fine-tuned models against benchmark datasets like GLUE and MMLU. The paper notes improvements in task-specific performance with reduced training data, underscoring the efficiency of the fine-tuning strategies discussed.

Future Directions and Open Challenges

The authors articulate ongoing challenges such as scalability, ethical considerations, and accountability in model deployment. They advocate for further research into hardware-software co-design and integration with emergent AI technologies, positing these as crucial for overcoming current limitations.

Conclusion

This exhaustive review serves as a vital resource for understanding the nuanced technical landscape of LLM fine-tuning. It seamlessly integrates theoretical insights with practical methodologies, catering to the intricate demands of deploying AI in real-world scenarios. Future research, as they suggest, should focus on scalability solutions and enhancing model transparency, aspects critical to the evolution of AI technologies.