Limits of NN-LMs: An Analysis of Memory and Reasoning

The paper "Great Memory, Shallow Reasoning: Limits of NN-LMs" by Shangyi Geng, Wenting Zhao, and Alexander M Rush, analyzes the effectiveness of -nearest neighbor LLMs (NN-LMs) in improving LLMs (LMs) through non-parametric retrieval mechanisms. While these models showcase significant improvements in memory-intensive tasks, they reveal substantial limitations in reasoning-intensive tasks.

Abstract Summary

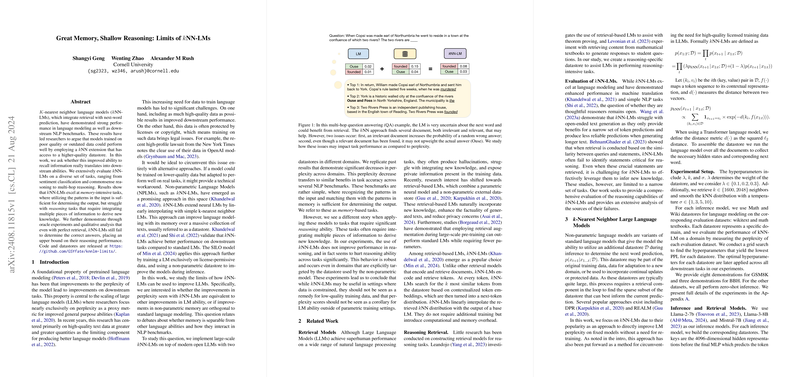

NN-LMs have garnered attention for their superior performance in LLMing tasks by integrating retrieval-based techniques for next-word prediction. The authors examine whether the enhanced information recall facilitated by NN-LMs translates into improved performance on downstream tasks. Their comprehensive evaluation on various tasks demonstrates that NN-LMs excel in tasks requiring memory but significantly underperform in reasoning tasks, even with ideal retrieval conditions. This outcome underscores an upper limit on the reasoning abilities of NN-LMs.

Introduction

The authors contextualize the significant performance improvements in LLMs, primarily through enhanced perplexity. This focus leads to a heavier reliance on high-quality data, often raising legal issues around data usage. NN-LMs offer a promising solution by leveraging non-parametric methods allowing memory extension with a higher-quality datastore. Though these models reduce perplexity, the paper explores whether this reduction correlates with improved downstream reasoning abilities.

Related Work

The research covers the domain of retrieval models and the mixed outcomes of using NN-LMs in text generation and simple NLP tasks. Specifically, the discussion emphasizes the shortcomings of NN-LMs in open-ended and extended text generation tasks, setting the stage for the paper's exploration into reasoning tasks.

-Nearest Neighbor LLMs

NN-LMs embed tokens and retrieve the most similar ones based on contextual embeddings. This mechanism provides a linear interpolation with the base LM's output. Despite lowering perplexity, the evaluation on reasoning abilities tells a different story.

Key Findings

Memory vs. Reasoning Performance

- Memory-Intensive Tasks: In tasks such as sentiment classification and topic classification, NN-LMs outperform standalone LMs. This result aligns with the notion that these tasks rely heavily on identifying and matching input patterns with stored patterns.

- Reasoning-Intensive Tasks: For tasks demanding multi-hop reasoning and the integration of disparate information pieces, NN-LMs underperform. Even with perfect retrieval of the correct pieces, the model fails to synthesize the information effectively, suggesting a fundamental separation between memory retrieval and reasoning.

Experimental Setup and Results

The authors used large-scale datastores from Wikipedia and mathematical texts to benchmark the model's capabilities. Despite substantial perplexity reductions, performance on reasoning tasks (e.g., Natural Questions, HotpotQA) was not only unimproved but sometimes degraded. The paper outlines detailed hyperparameter configurations and retrieval setups and provides comprehensive quantitative results showcasing this disparity.

Oracle Experiment

When evaluated under ideal retrieval conditions, where the correct answer is included among the nearest neighbors, NN-LMs still fail to leverage this effectively for reasoning tasks. This demonstrates intrinsic limitations in NN-LMs' reasoning capabilities, unrelated to retrieval accuracy.

Qualitative Insights

Detailed qualitative analysis reveals that NN-LMs often retrieve contextually appropriate tokens that do not necessarily answer the task at hand. For multi-hop reasoning, where correct answers span across different texts, NN-LMs struggle to synthesize the disparate information. They are prone to retrieving high-frequency yet irrelevant tokens, indicating their sensitivity to contextual but not semantic proximity.

Implications and Future Research

The implications are clear: while NN-LMs offer valuable improvements for specific NLP tasks, their limitations in reasoning necessitate further exploration beyond mere perplexity reduction. Future research could focus on hybrid models that better integrate non-parametric memory with parametric reasoning capabilities or develop more advanced retrieval models that can refine the relevance of retrieved data better.

Conclusion

The paper presents a critical evaluation of NN-LMs, highlighting the dichotomy between improved memory retrieval and foundational reasoning limitations. It underscores the necessity for nuanced approaches that exceed the simplicity of NN augmentation for advancing reasoning capabilities in LLMs. As the field progresses, these insights can guide balanced advancements in both memory and reasoning enhancements for LLMs.

Limitations

While the paper provides robust insights, it acknowledges constraints in the size of datastores and the range of base models used. Larger datastores or different base models could potentially yield varying outcomes, suggesting the need for broader evaluations in future studies.

Acknowledgements

The research was supported by NSF IIS-1901030, NSF CAREER 2037519, and the IARPA HIATUS Program.

The comprehensive analysis presented in this work offers a significant contribution to understanding the limits of NN-LMs, guiding future advancements in LLMing research.