Investigating the Efficacy of Parametric and Non-Parametric Memories in LLMs

Recent research efforts have explored the capabilities and limitations of LLMs (LMs) in retaining and recalling factual knowledge. The paper "When Not to Trust LLMs: Investigating Effectiveness of Parametric and Non-Parametric Memories" contributes to this line of inquiry by systematically probing LMs on their ability to memorize and retrieve factual knowledge across diverse subject entities and relationship types. The analysis involves comprehensive evaluations using a newly introduced dataset, PopQA, and the existing EntityQuestions dataset to assess LMs' parametric knowledge and their performance when augmented with non-parametric memory retrieval.

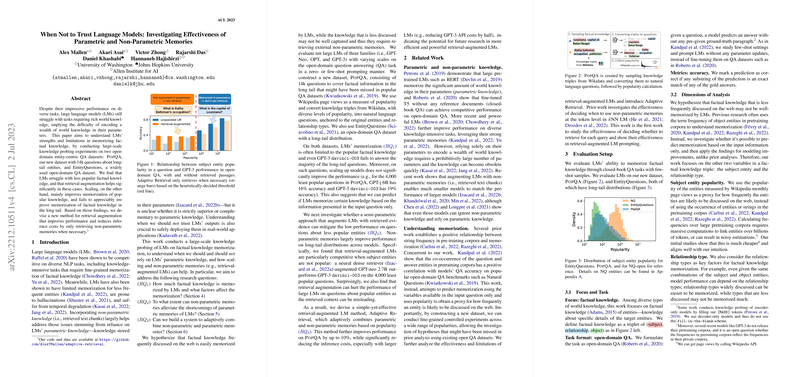

The paper elucidates that LMs exhibit significant proficiency in memorizing factual knowledge when it pertains to popular or frequently encountered entities. However, their performance wanes considerably in the long tail of less popular entities. The research identifies a strong positive correlation between entity popularity and memorization accuracy: larger LMs, such as GPT-3, demonstrate improved recall for popular knowledge but struggle with less frequent data. This implies that while scaling enhances LMs' parametric memory capacities for widely known facts, it does not notably augment performance for less commonly discussed entities.

To address these limitations, the authors explore integrating retrieval-augmented techniques to complement the inherent weaknesses of parametric memory in LMs. The retrieval-augmented models leverage external non-parametric memories by incorporating retrieval mechanisms like BM25 and Contriever, and generate-read methods such as GenRead. The results are noteworthy: smaller models, when augmented with retrieval capabilities, can achieve accuracies surpassing much larger unassisted models. For instance, Contriever-augmented LMs outperform vanilla GPT-3 models, particularly in recalling less popular factual information.

However, the augmentation is not without its pitfalls. The retrieval-based enhancement can occasionally mislead LMs, especially when the retrieved documents present incorrect or irrelevant information, resulting in reduced performance in answering questions about popular entities correctly memorized by the models.

To mitigate this, the paper introduces the concept of Adaptive Retrieval—a dynamic strategy that selectively activates retrieval augmentation based on heuristically determined popularity thresholds for different relationship types. This approach capitalizes on the findings that popular knowledge is often well-memorized by parametric models, while less popular knowledge benefits from external retrieval. The Adaptive Retrieval method showcases improved performance and reduced inference costs, especially with larger models, underscoring its potential utility in practical implementations.

The implications of this research are significant for the future development of LLMs. It suggests a path towards more efficient models that judiciously blend parametric and non-parametric knowledge, enhancing both accuracy and efficiency. Furthermore, it prompts further exploration into more nuanced strategies for retrieval augmentation, potentially integrating more sophisticated calibration techniques with robust retrieval systems. As LMs continue to evolve, the insights from this paper provide a foundational understanding of optimizing knowledge retrieval to balance memory capabilities across the spectrum of factual information.