VisualAgentBench: Benchmarking LMMs as Visual Foundation Agents

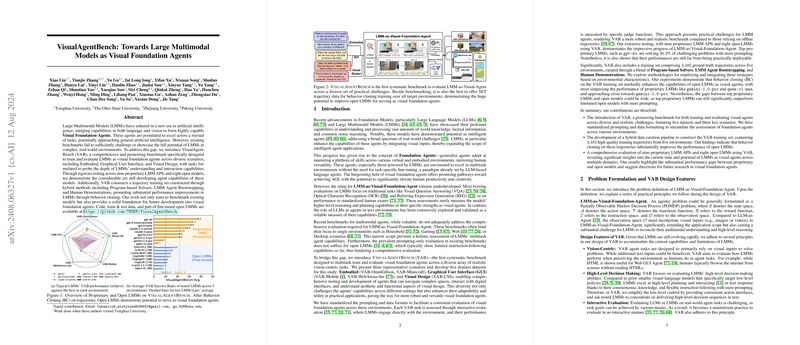

The paper introduces VisualAgentBench (VAB), a systematic and comprehensive benchmark designed to evaluate Large Multimodal Models (LMMs) as visual foundation agents. These agents possess the potential to operate across diverse scenarios, integrating both language and vision capabilities. Visual foundation agents aim to excel in multitask environments, similar to LLMs operating in text-based tasks. However, existing benchmarks are insufficient to challenge LMMs in real-world environments, prompting the development of VAB.

VAB encompasses a series of environments that simulate practical challenges faced by LMMs, categorized into Embodied, Graphical User Interface (GUI), and Visual Design modalities. The benchmark facilitates training and evaluation through a diverse set of tasks:

- Embodied Scenarios: VAB-OmniGibson and VAB-Minecraft simulate household and game environments, challenging LMMs with object manipulation and resource collection tasks, respectively. These tasks demand effective interaction with the environment and long-term planning capabilities.

- Graphical User Interface Scenarios: VAB-Mobile and VAB-WebArena-Lite are designed for testing GUI agents. They require understanding complex user interfaces in mobile and web applications, necessitating high-level decision making and interaction capabilities.

- Visual Design Scenarios: VAB-CSS tasks evaluate LMMs' abilities in web frontend design, where agents are required to fix CSS style issues through iterative problem-solving, which involves both aesthetic and functional reasoning.

Each scenario in VAB presents tailored challenges for LMMs, demanding robust visual grounding and planning abilities. LMMs are evaluated through interactive scenarios where success is measured by agents' capabilities to adapt and solve complex problems using multimodal inputs.

Key Contributions and Implications:

- Introduction of VAB: By creating this benchmark, the paper provides a new standard for assessing LMMs, expanding the scope of evaluation beyond traditional tasks like Visual Question Answering (VQA) or Optical Character Recognition (OCR). VAB aligns more closely with real-world scenarios that foundation models are likely to encounter.

- Training via Trajectory Data: VAB leverages a hybrid data curation pipeline to construct a training set of 4,482 trajectories for behavior cloning. This methodology significantly improves open LMMs' performances when fine-tuned on these datasets.

- Evaluation of Proprietary and Open LMMs: The results indicate a substantial gap between existing proprietary LMM APIs and fine-tuned open models, with top proprietary models like gpt-4o demonstrating better success rates in complex environments. However, the benchmark reveals potential pathways for open models to become competitive through enhanced training strategies.

Future Directions and Conclusions:

The introduction of VAB underscores the need to bridge the gap between proprietary and open LMMs in terms of their agent capabilities. The diverse and realistic challenges posed by VAB provide a rich testbed for future research into improving LMMs’ multimodal reasoning abilities. Future developments aim to include reinforcement learning strategies to complement behavior cloning, further advancing LMMs toward achieving more general and versatile visual foundation agent capabilities. The benchmark not only highlights the current state and potential of LMMs but also sets a trajectory for future developments in AGI.