- The paper introduces the Consistent Reasoning Paradox (CRP), showing that AI emulating human-like reasoning inevitably produces errors.

- It demonstrates that consistent reasoning leads to hallucinations, making the detection of errors computationally challenging.

- The work advocates for embedding an 'I don't know' response to manage uncertainty and improve the trustworthiness of AI systems.

On the Consistent Reasoning Paradox of Intelligence and Optimal Trust in AI: The Power of 'I Don't Know'

Introduction

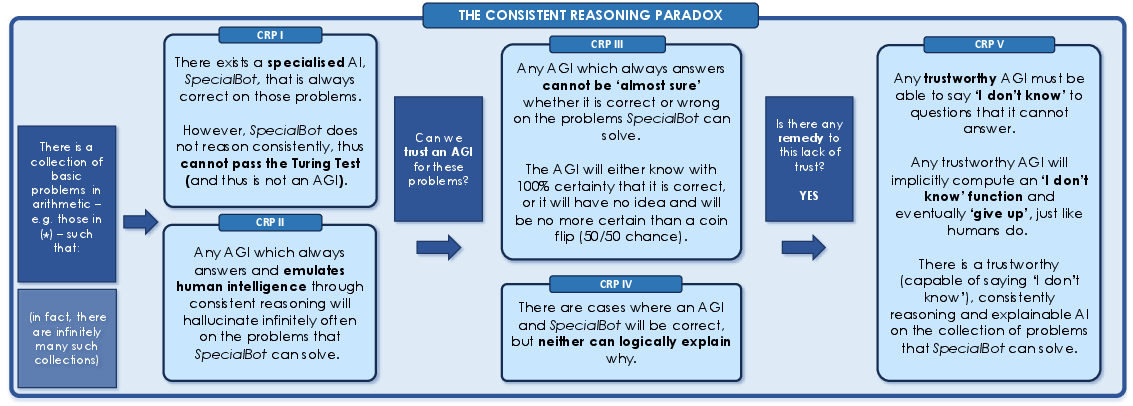

The paper "On the Consistent Reasoning Paradox of Intelligence and Optimal Trust in AI: The Power of 'I Don't Know'" presents the Consistent Reasoning Paradox (CRP) within the context of AGI. It addresses a foundational issue in AI: emulating human-like consistent reasoning inevitably leads to fallibility akin to human errors. This paradox has significant implications for the design and trustworthiness of AI systems, particularly when striving for AGI. The central argument posits that trustworthy AI must acknowledge its limitations through the ability to express uncertainty, encapsulated by the 'I don't know' function.

Consistent Reasoning Paradox (CRP)

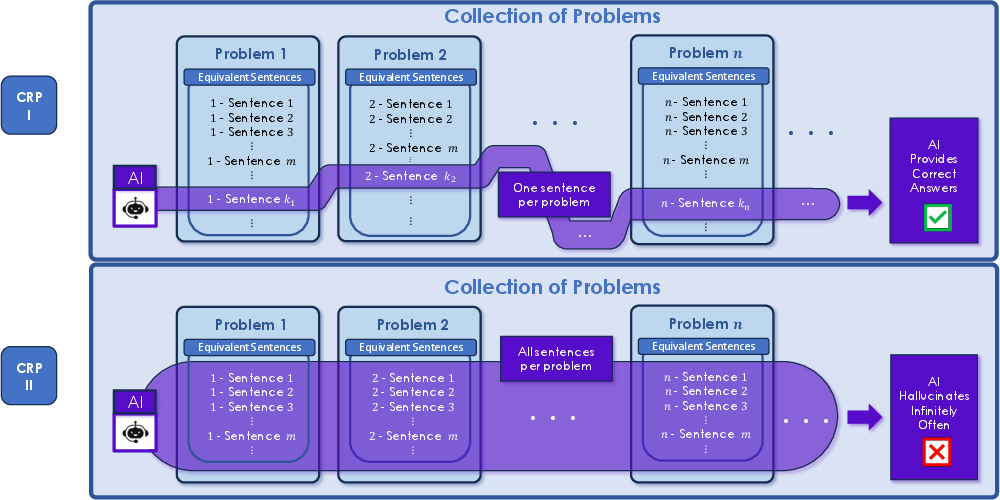

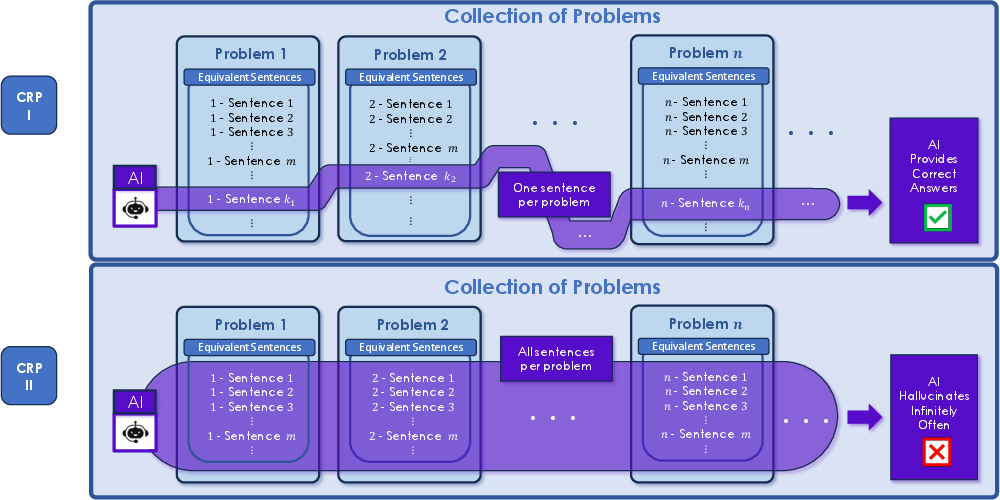

The CRP is based on the assertion that consistent reasoning, a core component of human intelligence, inherently leads to fallibility in AI. More precisely, when AI systems attempt to simulate human-like reasoning by consistently solving problems stated in various equivalent forms, they become prone to hallucinations—producing plausible yet incorrect answers. The paper identifies several components of the CRP, as summarized below:

- CRP I: The Non-Hallucinating AI Exists

- There exists an AI capable of solving a set of problems without hallucinating, provided it is restricted to a specific formulation of each problem.

- CRP II: Attempting Consistent Reasoning Yields Hallucinations

- CRP III: The Impossibility of Hallucination Detection

- Detecting when hallucinations occur is computationally harder than solving the original problems, even when randomness is introduced.

- CRP IV: Explaining Correct Answers is Not Always Possible

- Even when an AI provides the correct answer, it cannot always logically explain the rationale behind it, pointing to limitations in explainability.

- CRP V: The Necessity of 'I Don't Know'

- A trustworthy AI must possess the ability to indicate uncertainty ('I don't know'), especially with complex, multi-valued problems.

Implications for AGI

The CRP suggests that AGI, or any AI emulating human intelligence through consistent reasoning, is inherently subject to human-like imperfections. This paradox challenges the notion of creating an infallible AGI and highlights the need for AI systems to implement mechanisms acknowledging uncertainty. The CRP underscores that any universally trustworthy AGI must incorporate the 'I don't know' function, fundamentally aligned with human-like decision-making processes.

Figure 2: An illustration showing Claude’s consistent reasoning leading to failures, emphasizing the practical implications of CRP in AI development.

Construction of Trustworthy AI

Addressing the CRP involves embracing the conceptual framework of the 'I don't know' function. The ability to 'give up' or express uncertainty is crucial, mirroring human cognition where confidence in solutions varies. The notion of computability within the Σ1 class, where problems are computable with convergence from below, becomes vital. This class allows for the establishment of trustworthiness in AI systems by delineating when the AI should express certainty or defer with 'I don't know'.

Conclusion

The paper's discussion on the CRP offers pivotal insights into AI's capabilities and limitations when mimicking human intelligence. Emphasizing trust and explaining AGI's behavior provides foundational guidelines for the future development of AI systems that are both advanced and reliable. By embracing uncertainty, AI can achieve a balanced and realistic emulation of human reasoning, fostering trust in its operations and findings.

In summary, the CRP serves as a critical framework for understanding the boundaries of consistent reasoning in AI, promoting the inclusion of the 'I don't know' function as an essential component of trustworthy and reliable AI systems. Such considerations will be crucial as the field progresses towards AGI, affecting not only theoretical constructs but practical implementations in diverse domains.