Insightful Overview of "REAPER: Reasoning based Retrieval Planning for Complex RAG Systems"

The paper "REAPER: Reasoning based Retrieval Planning for Complex RAG Systems," authored by Ashutosh Joshi et al., presents a method for optimizing retrieval in conversational agents, specifically Amazon's Rufus. This paper targets the critical aspect of efficiently planning retrieval tasks in Retrieval-Augmented Generation (RAG) systems by introducing REAPER, a novel planning module that aims to minimize retrieval latency while maintaining high accuracy.

Introduction and Problem Statement:

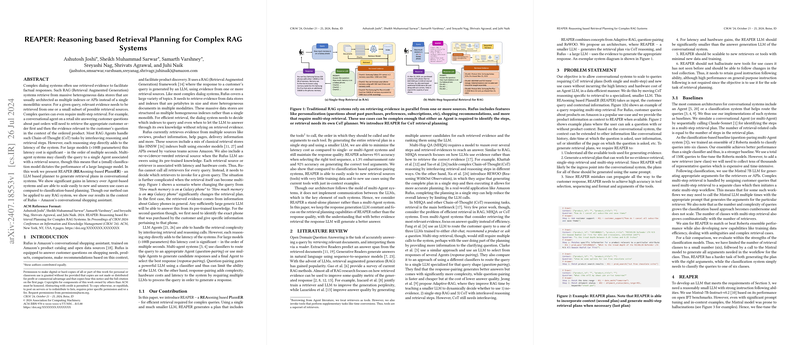

Conversational agents such as Amazon's Rufus, which uses a RAG framework, necessitate retrieval from multiple large and heterogeneous data sources. These retrievals are not always a single-step process; complex queries may require multi-step retrievals. Traditional methods like LLM-based Agents and multi-agent systems suffer from significant latency and hardware costs due to the sequential nature of reasoning steps. This paper proposes REAPER, a much smaller LLM-based planner that generates retrieval plans efficiently, thus addressing these challenges.

Contribution and Methodology:

REAPER's architecture consists of a few innovative components:

- Tool Evolve (TEvo): Generates diverse inputs by sampling tool names and descriptions and varying in-context examples to enhance the model's understanding of different tools.

- Tool-Task Generator (TTG): Creates diverse tasks related to retrieval planning, forcing the LLM to understand the tools and retrieval plans deeply.

- Diverse Query Sampler (DQS): Samples semantically diverse customer queries, ensuring the model generalizes well across different query types.

The training data is a combination of REAPER-specific tasks and general-purpose instruction data (Generic-IFT and Generic-IFT-Evolve), maintaining a balance between task-specific accuracy and broad instruction-following ability. REAPER achieves impressive accuracy in tool selection (96%) and argument extraction (92%), outperforming both the baseline classifier-based systems and larger models like Claude3-Sonnet and the Mistral 7B LLM in efficiency.

Experimental Results:

The paper presents an extensive evaluation through ablation studies and comparisons with baselines. Key findings include:

- Data Efficiency: The classifier-based system required 150K training samples, while REAPER achieved higher accuracy with just 6K in-domain queries.

- Latency: REAPER's latency is only 207ms per plan compared to 2 seconds for large models like Claude3-Sonnet.

- Generalization and Scalability: REAPER can generalize to new tools and use cases with minimal additional training data. For instance, adding a new retrieval source required only 286 new examples, and the model learned to use an unseen tool based on in-context examples alone.

Implications and Future Work:

Practically, REAPER represents a substantial improvement in responsive conversational AI systems, particularly those deployed in settings with stringent latency requirements. Theoretically, the principles underlying REAPER's data generation and fine-tuning methods suggest potential applications in other areas requiring efficient multi-step reasoning.

Future research could explore further optimization of REAPER’s training data mix and its application to even more diverse retrieval scenarios. Additionally, more studies could delve into the integration of REAPER with various backend systems to explore its robustness and scalability in different real-world environments.

In conclusion, REAPER showcases a significant advancement in the planning of retrieval tasks in RAG systems, facilitating rapid and accurate conversational AI responses. Its innovative approach to data generation and model fine-tuning presents a pathway toward more efficient and scalable AI systems in complex, multi-step query environments.