Overview of LLM Alignment Techniques

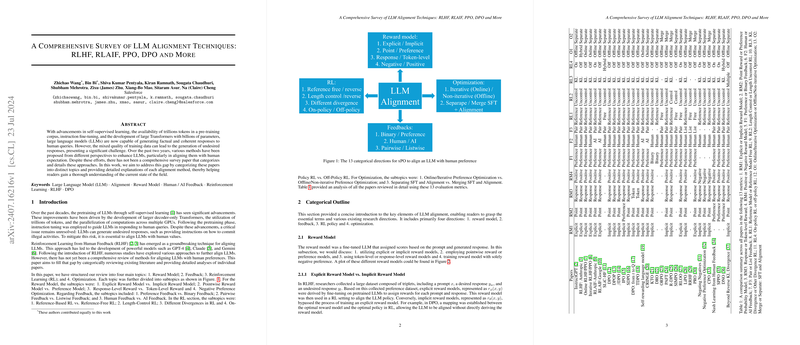

The paper "A Comprehensive Survey of LLM Alignment Techniques: RLHF, RLAIF, PPO, DPO and More" (Wang et al., 23 Jul 2024 ) offers a structured overview of methodologies designed to align LLMs with human expectations. The survey categorizes these alignment techniques into four major areas: Reward Models, Feedback, Reinforcement Learning (RL), and Optimization, providing a detailed exploration of each.

Reward Models in Detail

The paper distinguishes between explicit and implicit reward models. Explicit reward models involve the assignment of tangible rewards to LLM outputs, with models fine-tuned to maximize these rewards. Implicit reward models, such as Direct Preference Optimization (DPO), directly optimize for preferable policy configurations without explicitly calculating rewards. Pointwise models score individual outputs, while preferencewise models rank outputs relative to alternatives. Token-level rewards and negative preference optimization are used to refine specific actions within a response.

Pointwise vs. Preferencewise Reward Models

Pointwise reward models score each LLM output independently. The reward function assigns a score to the output given the input . These models are trained to predict this score accurately. In contrast, preferencewise models rank outputs relative to each other. Given two outputs, and , the model predicts which one is preferred. This is typically done by optimizing a contrastive loss.

Explicit vs. Implicit Reward Models

Explicit reward models, like those used in traditional RLHF, involve training a separate reward model to predict human preferences. Implicit reward models, like DPO, bypass this step by directly optimizing the policy based on preference data. DPO re-parameterizes the RL objective, allowing for stable training without an explicit reward model.

Feedback Mechanisms

Feedback mechanisms play a crucial role in LLM alignment. The survey discusses the efficacy of preference feedback versus binary feedback. Preference feedback, which involves ranking response pairs or lists, can enhance the alignment process. Binary feedback, such as "thumbs up" or "thumbs down," is simpler but potentially noisier. The distinction between human and AI feedback is also examined, highlighting recent advancements in AI-generated feedback within RLAIF paradigms, which can reduce the reliance on human input.

Preference Feedback

Preference feedback involves collecting data where humans compare different outputs from the LLM and indicate which one they prefer. This can be in the form of pairwise comparisons or rankings of multiple outputs.

Binary Feedback

Binary feedback is a simpler form of feedback where humans indicate whether an output is good or bad. This is often collected via "thumbs up" or "thumbs down" ratings. While easier to collect, binary feedback can be noisier and less informative than preference feedback.

Reinforcement Learning (RL) Approaches

RL is a key mechanism in aligning LLMs. The paper explores reference-based and reference-free RL methods. Reference-based methods, such as PPO, maintain a connection to the initial pretrained models, while reference-free approaches, such as SimPO, explore independent paradigms. The RL methods discussed also include strategies for length control and alternative divergence measures to preserve response diversity.

Reference-based RL

Reference-based RL methods, like PPO, update the LLM's policy while staying close to the initial pre-trained model. This is typically enforced using a KL divergence penalty in the RL objective:

where is the current policy, is the reference policy, is the reward, and is a coefficient controlling the strength of the KL penalty.

Reference-free RL

Reference-free RL methods, like SimPO, do not rely on staying close to the initial pre-trained model. This allows for more exploration but can also lead to instability and divergence.

Optimization Techniques

The surveyed techniques include various optimization approaches, emphasizing the benefits of iterative and online optimization. Iterative optimization involves repeatedly refining the model based on new data inputs. The separation versus merging of SFT and alignment is also discussed, with techniques like PAFT proposing parallel fine-tuning and later merging to prevent catastrophic forgetting.

Iterative Optimization

Iterative optimization involves repeatedly fine-tuning the LLM on new data. This allows the model to continuously improve its alignment with human preferences. Online optimization is a special case where the model is updated in real-time as new data becomes available.

Parallel Fine-Tuning

Parallel fine-tuning, as in PAFT, involves fine-tuning multiple copies of the LLM on different objectives and then merging them. This can prevent catastrophic forgetting and improve overall performance.

Key Findings and Implications

The paper highlights the differential performance of methods like RLHF, which uses a distinct reward model, compared to direct optimization methods like DPO. Implicit reward models like DPO simplify the alignment process but may face scalability challenges with larger models. Listwise preference optimization (e.g., LiPO) shows the potential of structured data collection for large-scale alignment tasks. The trade-offs between complexity, computational overhead, and alignment efficacy are significant considerations.

Future Research Directions

The paper identifies the need for standardized and unified benchmarks for alignment evaluation. The feasibility and scalability of novel methods, such as Nash learning and integrated SFT-alignment approaches, on larger models remain open research questions. Refining methods for binary feedback utilization and exploring AI-generated helpful feedback are also important future directions.

Conclusion

The survey paper "A Comprehensive Survey of LLM Alignment Techniques: RLHF, RLAIF, PPO, DPO and More" (Wang et al., 23 Jul 2024 ) provides a detailed overview of current methods for aligning LLMs with human values. It categorizes techniques across reward models, feedback mechanisms, reinforcement learning, and optimization, offering insights into existing techniques and highlighting gaps in research. The paper also guides future scientific inquiries and practical implementations in the domain of AI alignment, emphasizing the trade-offs between different alignment strategies and their scalability.