Modular Sentence Encoders: A Novel Framework for Enhancing Multilinguality

The paper presents a significant contribution to addressing the challenges faced by multilingual sentence encoders (MSEs), specifically the issues of parameter sharing negatively affecting monolingual performance and the trade-off between monolingual and cross-lingual tasks. The introduced framework emphasizes a modular approach to sentence encoding, focusing on separating monolingual specialization from cross-lingual alignment, which is pivotal in mitigating the effects of the "curse of multilinguality."

Core Contributions

- Modular Architecture:

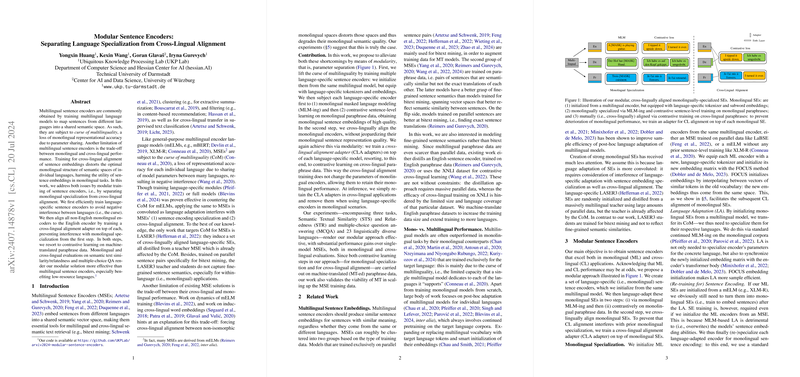

- The paper underscores a two-step modular training approach. Initially, language-specific sentence encoders are trained to avoid negative interference between languages using monolingual data. Subsequently, all non-English encoders are aligned with the English encoder using cross-lingual alignment adapters.

- The use of contrastive learning, based on machine-translated paraphrase data, ensures that the training process benefits both monolingual and cross-lingual performance.

- Empirical Evaluations:

- It evaluates monolingual and cross-lingual performance through standardized tasks like Semantic Textual Similarity (STS) and multiple-choice question answering (MCQA). The results exhibit significant performance enhancements, particularly in low-resource languages, compared to traditional MSEs.

- Effectiveness of Machine-Translated Paraphrase Data:

- The framework demonstrates the utility of machine-translated paraphrase data in scaling up training data efficiently, paving the way for extending the training to additional languages with limited resources.

Numerical Results

- The framework achieves prominent numerical superiority in STS and MCQA tasks over existing MSE models.

- Notably, in the STS tasks, the framework records consistent performance improvements across various datasets, confirming its robustness in preserving monolingual integrity while enhancing cross-lingual alignment.

Implications

Practical Implications:

- By modularizing the training process, the approach allows for the efficient addition of new languages, requiring only the alignment of new language-specific encoders to the existing English encoder without re-training the entire system.

- This modularity results in substantial computational savings while maintaining performance standards, offering a scalable solution for language expansion in NLP applications.

Theoretical Implications:

- The researchers' insights into the curse of multilinguality highlight inherent trade-offs in multilingual model training, providing a framework adaptable to other areas in NLP requiring balancing mono- and cross-lingual capabilities.

- The examination of multi-parallel data's role in aligning monolingual encoders opens avenues for further research into efficient cross-lingual alignment techniques.

Future Directions

- The paper identifies potential in further refining cross-lingual adapters and exploring multilingual adaptations in domains beyond sentence encoding.

- Given the positive results, future work may involve experimenting with different adapter architectures and encoder initialization strategies to optimize and enhance the modular approach further.

In conclusion, the research outlined in the paper provides an insightful and robust framework for developing sentence encoders that proficiently handle multiple languages, addressing key challenges in the field. The innovation of modular encoders, combined with efficient cross-lingual alignment, represents a transformative step in the evolution of multilingual NLP models.