LMMs-Eval: Reality Check on the Evaluation of Large Multimodal Models

The paper "LMMs-Eval: Reality Check on the Evaluation of Large Multimodal Models" by Zhang et al. introduces a new benchmark framework designed to rigorously evaluate Large Multimodal Models (LMMs). Despite significant advancements in evaluating LLMs, comprehensive benchmarking of LMMs remains an underexplored area, necessitating a robust and standardized evaluation framework.

Key Contributions

The contributions of this work are threefold:

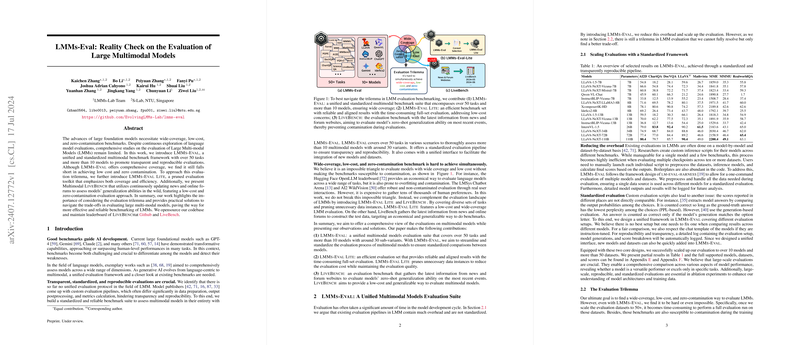

- LMMs-Eval: This is a unified and standardized benchmark suite that encompasses over 50 tasks across more than 10 models, facilitating comprehensive and apples-to-apples comparison among models.

- LMMs-Eval Lite: To address evaluation cost without sacrificing coverage, this pruned version of LMMs-Eval is designed to balance efficiency and robust evaluation.

- LiveBench: For evaluating models on real-world, dynamically updated data, LiveBench provides a novel low-cost and zero-contamination approach, leveraging continuously updating news and online forum data.

Standardized Framework

LMMs-Eval aims to reduce redundancy and ensure standardized comparisons. Current model evaluations suffer from inconsistent practices (e.g., different data preparation and metrics calculation methods). LMMs-Eval addresses this by providing one command evaluation across multiple models and datasets, standardizing data preprocessing, inference, and metric calculation. As shown in their results, a vast array of models including LLaVA-1.5, Qwen-VL, and InternVL have been evaluated on tasks ranging from captioning in COCO to complex QA in GQA.

Table \ref{tab:fair-comparison} in the paper shows the efficacy of LMMs-Eval in providing standardized comparisons. For instance, LLaVA-NeXT-34B achieves an 80.4% score on RealWorldQA, showcasing competitive performance relative to other models. The framework scales evaluations effectively, reducing manual overhead and ensuring comparability.

Addressing the Evaluation Trilemma

The paper identifies an evaluation trilemma: achieving wide coverage, low cost, and zero contamination concurrently is challenging. While frameworks like Hugging Face’s OpenLLM leaderboard provide broad coverage, they risk contamination and overfitting. Conversely, real-user evaluation platforms like AI2 WildVision are costly due to the need for extensive human interaction data.

LMMs-Eval Lite attempts to navigate these trade-offs by focusing on a smaller yet representative subset of the full benchmark. Using a k-center clustering algorithm to prune data instances, the Lite version retains high correlation with full benchmark results, thereby maintaining reliability while reducing evaluation cost. Figure \ref{fig:cost} illustrates a significant reduction in evaluation time with comparable performance metrics, making it a practical solution for iterative model refinement.

LiveBench: Enhancing Real-World Evaluation

Addressing contamination issues involves not only benchmarking with clean data but also ensuring relevance and timeliness. LiveBench transitions from static to dynamic evaluation sources, gathering data from frequently updated websites like news outlets and forums. This setup tests models on their generalization capabilities more rigorously.

Their analysis reveals high contamination risks in existing benchmarks; for example, datasets like ChartQA show considerable overlap with pretraining data. LiveBench mitigates such risks by dynamically generating up-to-date evaluation datasets and focusing on zero-shot generalization. Monthly curated problem sets ensure continuous relevance.

The evaluation on LiveBench (Table \ref{tab:livebench_results}) reveals that while open-source models have made strides, commercial models such as GPT-4V and Claude-3 still outperform in dynamically updated, real-world scenarios. This underscores the gap between academic benchmarks and practical model utility.

Implications and Future Directions

The implications of LMMs-Eval and its derivatives are significant. For researchers, it provides a robust tool for evaluating model performance across a broad spectrum of tasks under consistent conditions. Practically, it aids in detecting model weaknesses and areas for improvement. Theoretically, the realization of the evaluation trilemma informs ongoing methodological refinements.

Future developments could focus on improving the balance among the trilemma’s three facets or innovating to potentially resolve it. Furthermore, refining contamination detection methods that don't require access to training data could be another avenue of exploration.

In conclusion, LMMs-Eval, along with its Lite and LiveBench variants, offers a comprehensive benchmarking solution for evaluating the growing suite of Large Multimodal Models. As the field continues to evolve, such frameworks will be essential for fostering advancements and ensuring the reliability and robustness of AI systems in diverse real-world applications.