Context Embeddings for Efficient Answer Generation in RAG

In the domain of LLMs, Retrieval-Augmented Generation (RAG) has emerged as a critical technique to overcome the intrinsic knowledge limitations by leveraging external sources of information. RAG extends the input by incorporating retrieved, relevant contextual documents, which significantly enhances performance in knowledge-intensive tasks. However, this augmentation comes at the cost of increased input lengths, consequently slowing down the model's decoding time and affecting the user experience due to longer wait times for responses.

The paper "Context Embeddings for Efficient Answer Generation in RAG" by Rau et al. proposes a novel method named COCOM (COntext COmpression Model) to address this efficiency challenge. COCOM introduces context compression, reducing long contexts into a smaller set of Context Embeddings, thereby accelerating the generation time without substantial loss in performance.

Key Contributions

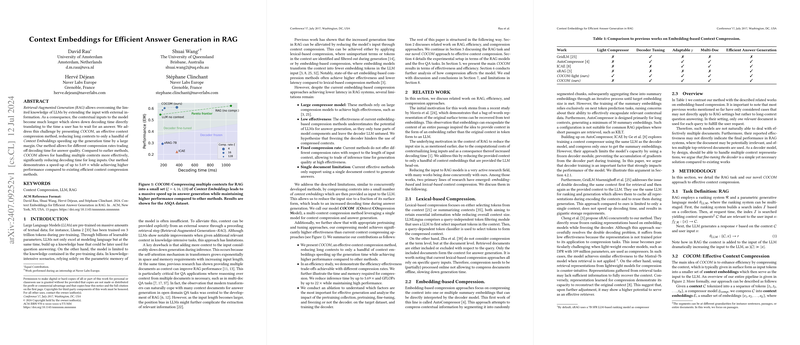

- Efficient Context Compression: COCOM employs context compression to truncate long contexts into a limited number of Context Embeddings. This action significantly speeds up the generation process, achieving a reduction in decoding time by up to 5.69 times while maintaining higher or competitive performance relative to existing methods.

- Trade-off Flexibility: The method allows users to adjust compression rates, offering a balance between decoding time and answer quality. This adaptability is particularly relevant in applications where performance requirements might vary in different operational contexts.

- Scalability with Multiple Contexts: COCOM proves effective in handling multiple context documents, an essential feature for tasks that necessitate reasoning over several documents. This scalability is achieved without compromising on performance and shows marked improvements over the baseline methods.

- Comparative Performance: The performance evaluation of COCOM shows substantial improvements over prior methods like ICAE and xRAG. The paper reports COCOM’s ability to handle up to five documents concurrently, demonstrating superior EM scores across widely recognized datasets such as Natural Questions and ASQA.

Methodology

Context Compression Mechanism

COCOM's primary innovation lies in its approach to context compression. The mechanism involves reducing the input contexts into Context Embeddings through a compression model . These embeddings replace the original tokenized context, thus shrinking the input size delivered to the LLM. The process involves training the model on pre-defined tasks that teach it to effectively compress and later decompress input data. The embeddings are contextualized representations ensuring that key information from the original context is retained while removing redundant data.

Adaptable Compression Rates

By varying the compression rate, COCOM can manage the trade-off between fidelity and efficiency. For instance, with a compression rate , an input context of 128 tokens is reduced to two embeddings, aptly scaling down the decoding time while striving to retain essential contextual information. This flexibility allows the model to be tailored to specific application needs.

Joint Training Paradigm

The model is trained jointly to perform both context compression and answer generation tasks. This joint training ensures the embeddings' information utility is optimized for the subsequent generation task. The training involves pre-training through two tasks: auto-encoding of contexts and LLMing from context embeddings, both designed to ingrarnate an efficient compression mechanism into the model.

Results and Insights

The experimental results underscore the efficacy of COCOM. The paper compares COCOM with other state-of-the-art methods like ICAE and xRAG, providing empirical evidence of its superior performance. Notably, COCOM outperforms these methods even when they employ significantly larger models (e.g., Mixtral-8x7B). For example, in Exact Match (EM) scores, COCOM achieves 55.4% on Natural Questions (NQ) with a compression rate of 4, compared to the next best 26.5% from xRAG using Mixtral-8x7B.

Furthermore, the paper's ablation studies reveal critical insights into the model's behavior. Fine-tuning the decoder, a feature absent in existing methods, significantly impacts performance, illustrating the vital role of comprehensive model tuning. Another notable finding is the method's performance regression at higher compression rates, suggesting the intrinsic challenge of maintaining information fidelity with severe compression.

Implications and Future Directions

The research sets a new benchmark in the domain of efficient RAG systems by substantially reducing the decoding time while maintaining high performance. The implications for practical deployment are significant: faster response times in interactive applications and decreased computational overhead, translating to cost savings and enhanced user experience.

Future research may extend the application of COCOM beyond QA tasks to other domains requiring efficient handling of extensive contexts, such as summarization or machine translation. Additionally, investigating advanced pre-training objectives or hybrid architectures could further enhance the performance and adaptability of context compression models, paving the way for broader applicability and even more efficient NLP systems.

In conclusion, the method presented by Rau et al. in COCOM stands as a notable advancement in the optimization of RAG frameworks, offering both practical and theoretical contributions to the field of AI-driven text generation.