CacheGen: An Approach to KV Cache Compression and Streaming for Efficient LLM Serving

The paper "CacheGen: KV Cache Compression and Streaming for Fast LLM Serving" introduces an innovative approach to address the latency issues in LLM serving systems, particularly focusing on the delays incurred by processing long-context inputs. As LLMs increasingly engage in complex tasks, the requirement to process longer contexts introduces significant latency in generating outputs. This latency challenge prompted the authors to develop CacheGen, a solution designed to enhance the efficiency of context loading in LLM systems by facilitating faster fetching and processing of contexts through optimized KV cache management.

Key Concepts and Methodologies

- KV Cache Encoding:

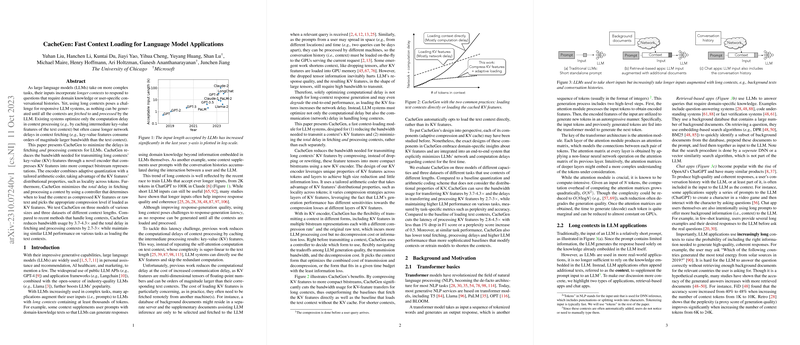

- CacheGen employs a novel KV cache encoding scheme aimed at mitigating the network delays intrinsic to transferring large tensor-based KV caches. This scheme uses custom quantization and arithmetic coding strategies, leveraging the observed distributional properties of KV caches, particularly token-wise locality and layer-wise sensitivity to data loss.

- By encoding KV caches into compact bitstream representations, CacheGen significantly reduces bandwidth requirements, thus addressing one of the primary bottlenecks in LLM latency. The encoding process is designed to introduce minimal computational overhead, maintaining system efficiency.

- Adaptation to Bandwidth Variations:

- The streaming module in CacheGen is capable of adapting to fluctuations in available network bandwidth. When bandwidth constraints are detected, the system dynamically adjusts compression levels or opts to compute KV caches from text on-the-fly.

- This adaptability ensures that the context-loading delay remains within acceptable limits, adhering to service-level objectives without compromising the accuracy or quality of the generated LLM responses.

Experimental Evaluation and Results

The experimental evaluations showcased CacheGen's performance across various LLMs and datasets, demonstrating substantial improvements in time-to-first-token (TTFT) metrics:

- Performance Metrics: CacheGen reduced the size of KV caches by 3.7-4.3 and minimized overall fetching and processing delays by 2.7-3.2 compared to recent systems that reuse KV caches. Critically, these improvements were achieved without significant degradation in response quality.

- Comparison with Baselines: Compared to both text context transmission and basic quantization, CacheGen maintained a superior trade-off between transmission delay reduction and LLM accuracy across diverse workloads and network conditions.

Implications and Future Directions

CacheGen offers considerable practical advantages by optimizing KV cache management, thus facilitating more efficient use of bandwidth and computational resources in LLM serving environments. By addressing the latency challenges associated with long contexts, CacheGen has the potential to enhance user experience by enabling faster and more responsive LLM applications.

The theoretical implications of this work suggest new avenues for engineering KV caches in LLMs, particularly in environments where network bandwidth is variable or constrained. The observations about KV cache characteristics may guide future research in designing even more effective compression algorithms tailored specifically for tensor-based data structures in neural networks.

Looking ahead, potential developments could include integrating CacheGen within broader frameworks for distributed inference, enabling more seamless and cost-effective deployment of LLMs across computational infrastructures. Additionally, exploring CacheGen's compatibility with emergent memory-efficient architectures or investigating its application within multi-tenant LLM platforms could extend its utility and impact.

In conclusion, CacheGen represents a significant advancement in LLM serving systems, providing a robust solution to the persistent challenge of context-induced latency. By articulating an empirical basis for KV cache compression and streaming, it underscores the value of targeted engineering solutions that respect the complex interplay between network dynamics and computational efficiency in modern AI applications.