Overview of SEED-Story: Multimodal Long Story Generation with LLMs

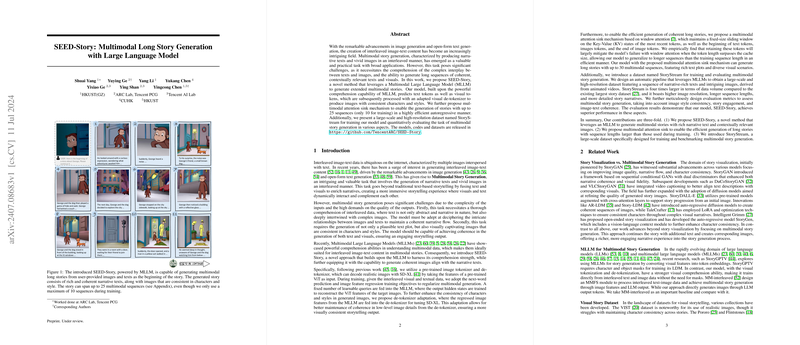

The paper "SEED-Story: Multimodal Long Story Generation with LLM" presents a method for generating extended multimodal stories that interleave narrative text with visual content. The proposed approach leverages advancements in both image and open-form text generation by utilizing a Multimodal LLM (MLLM) to enhance the storytelling process. This work introduces several innovations, including a multimodal attention sink mechanism, which enables efficient generation of long sequences, and the creation of a large-scale dataset, StoryStream, to support the training of the model.

Key Contributions

- MLLM-Based Story Generation: SEED-Story makes use of a Multimodal LLM to predict both text and visual tokens. This approach allows the model to generate coherent narratives that seamlessly blend text and images, maintaining consistency in characters and style across sequences.

- Visual Tokenization and De-tokenization: The use of pre-trained Vision Transformers (ViT) as tokenizers and a diffusion model as a de-tokenizer facilitates the conversion of image features into and out of a tokenized form, enabling high-quality image generation consistent with narrative contexts.

- Efficient Long Story Generation: The paper introduces a multimodal attention sink mechanism. This enhancement overcomes traditional limitations of sliding window attention mechanisms, permitting the model to preserve critical tokens (e.g., beginning and end of image tokens) and maintain narrative coherence over longer story sequences than possible with standard attention models.

- StoryStream Dataset: To substantiate the training and evaluation of SEED-Story, the authors have developed the StoryStream dataset. This dataset boasts a larger scale and higher resolution than previous datasets, enabling it to support the generation of more complex and engaging storylines.

Results

The evaluation of SEED-Story shows superior performance in generating long, coherent multimodal narratives compared to baseline models like MM-interleaved. Quantitative metrics such as FID scores and user studies demonstrate higher image quality, text-image coherence, and story engagement. Moreover, the multimodal attention sink mechanism shows substantial improvements in generating long sequences with reduced computational demand compared to traditional dense attention mechanisms.

Implications and Future Directions

Practically, SEED-Story's approach can be transformative for applications in educational content creation and digital storytelling, where the capacity to generate consistent and rich multimodal content is invaluable. Theoretically, the integration of MLLMs with advanced tokenization and attention mechanisms provides a robust framework for further research into multimodal understanding and generation.

Future work might explore expanding the diversity of training data, including more realistic scenarios beyond animation-based datasets, to improve the model's applicability across different domains. Furthermore, there is potential to refine the de-tokenization process for even more finely detailed image generation, fostering advancements in character and style consistency across varying contexts.

In conclusion, SEED-Story represents a significant step forward in the multimodal story generation landscape, setting the stage for future innovations in AI-driven narrative experiences.