SEED-Bench-2: A Comprehensive Benchmark for Multimodal LLMs

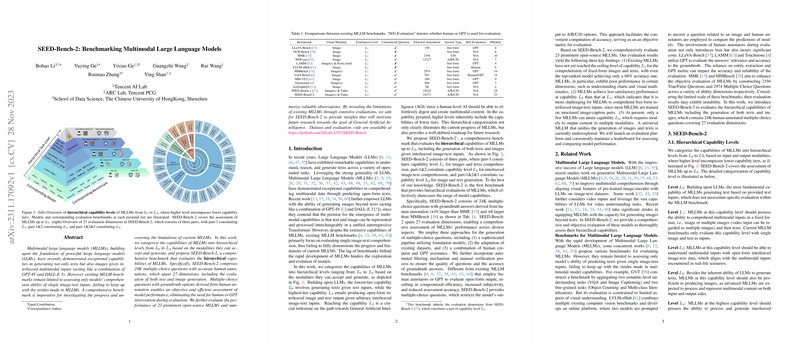

In the continuous advancement of Multimodal LLMs (MLLMs), understanding and evaluating their capabilities remains a critical area of exploration. The paper "SEED-Bench-2: Benchmarking Multimodal LLMs" presents a sophisticated and well-structured framework designed to methodically assess the capabilities of MLLMs. This work categorizes the capabilities of these models into hierarchical levels from to and proposes SEED-Bench-2, a comprehensive benchmark that evaluates the hierarchical capabilities of MLLMs, specifically up to .

Hierarchical Capability Levels and Evaluation Dimensions

The authors introduce a thoughtful categorization of MLLM capabilities into hierarchical levels. At the foundational level, , MLLMs generate texts based on text inputs, which aligns with the inherent capabilities of LLMs. The subsequent levels ( to ) progressively demand more complex interactions with multimodal content, culminating in , where models are expected to process and produce interleaved image-text content in an open-form format. The SEED-Bench-2 focuses on levels , , and to provide a current horizon of model capabilities. This hierarchical framework not only illustrates the current progress but also provides a roadmap for future research.

The benchmark is comprised of 24,000 multiple-choice questions across 27 evaluation dimensions. Each evaluation dimension is structured to assess specific aspects of MLLMs' capabilities. For instance, the evaluation includes dimensions that require models to comprehend fixed-format multimodal inputs (), interpret interleaved image-text inputs (), and generate images in addition to texts (). Notably, the benchmark extends beyond single image-text pair comprehension, embracing the complexity and variety inherent in real-world interactions.

Construction and Evaluation Methodology

The data and evaluation questions were constructed using a combination of automatic pipelines and manually curated datasets. The automatic pipeline leverages foundation models like BLIP2, Tag2Text, and SAM for extracting detailed visual information from images, which is then used to automate the generation of multiple-choice questions. Human annotators further refine this data to ensure accuracy and relevance. This meticulous construction process underscores the robustness of SEED-Bench-2 in providing objective and efficient assessment metrics for current MLLMs.

For evaluating the MLLMs, the paper utilizes an answer ranking strategy where models' outputs are ranked based on the likelihood of responses. This approach provides a quantitative performance metric without imposing the subjective biases inherent in human evaluation.

Results and Observations

The evaluation results elucidate several important insights into the current state of open-source MLLMs. First, it is evident that existing models have not fully realized their potential even at the capability , with leading models achieving around 60% accuracy. This highlights room for improvement, especially in complex reasoning tasks such as understanding charts and visual mathematics, where all models performed poorly.

Additionally, the results underscore the challenges in comprehending interleaved image-text data, with performance on part-2 dimensions generally worse than on fixed-format evaluations. This indicates a gap between training data structures and evaluation demands. Furthermore, only a limited subset of models currently support full multimodal generation capabilities, revealing a need for further research into integrated text and image output systems.

Implications and Future Directions

SEED-Bench-2's comprehensive framework has significant implications for advancing the field of multimodal AI. By providing a structured evaluation across a spectrum of capabilities, this benchmark paves the way for identifying specific areas requiring innovation. This work will serve as a cornerstone for both academic research and practical applications aiming to achieve a more profound understanding of and improvements in MLLMs.

As future efforts aspire towards achieving General Artificial Intelligence, frameworks like SEED-Bench-2 will be pivotal. Continuous refinement and expansion of such benchmarks to include emerging modalities and evaluation metrics will be vital to align with the evolving landscape of AI capabilities. This paper presents a foundational step towards developing robust, versatile MLLMs capable of understanding and generating complex multimodal content in varied contexts.