Analyzing Speculative RAG: Effective Integration of LLMs and External Knowledge Sources in Retrieval Augmented Generation

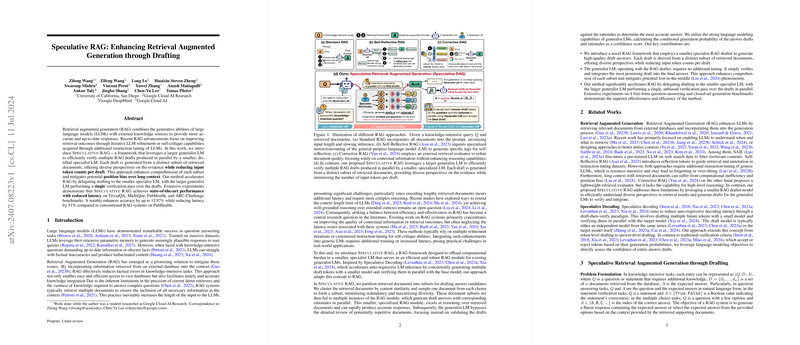

The paper "Speculative RAG: Enhancing Retrieval Augmented Generation through Drafting" introduces a novel approach to Retrieval Augmented Generation (RAG), which combines the generative capabilities of LLMs with external information retrieval for accurate and up-to-date responses. This work aims to improve both the efficiency and effectiveness of RAG systems by introducing a dual-model framework involving a smaller specialist LLM (LM) for drafting and a larger generalist LM for verification.

Core Contributions

The core contributions of this paper are threefold:

- Speculative RAG Framework: The proposed Speculative RAG framework leverages a two-tier model system where multiple drafts are generated in parallel by a smaller, specialist LM (the RAG drafter), each draft derived from a distinct subset of the retrieved documents. These drafts are then verified in a single pass by a larger, generalist LM (the RAG verifier). This division of labor serves to enhance processing speed and ensures that diverse perspectives offered by the retrieved documents are comprehensively evaluated.

- Enhanced Drafting and Verification Mechanism: Through extensive instruction tuning, the smaller specialist LM is designed to excel at generating high-quality drafts based on the retrieved documents, producing not just answers but also supporting rationales. This is crucial in mitigating problems of redundancy and position bias that current dense retrievers face with long contexts. The larger generalist LM does not require additional tuning and focuses on verifying the drafts based on their rationales, leveraging its advanced LLMing capabilities.

- Empirical Validation: The paper offers comprehensive experimental validation across multiple benchmarks: TriviaQA, MuSiQue, PubHealth, and ARC-Challenge. In these tests, Speculative RAG demonstrated significant performance improvements, achieving up to 12.97% higher accuracy and reducing latency by 51% compared to traditional RAG systems.

Key Numerical Results and Comparisons

Main results showed that Speculative RAG outperformed baseline RAG methods, Self-Reflective RAG, and Corrective RAG on all evaluated benchmarks. For instance, on the PubHealth dataset, the Speculative RAG achieved an accuracy of 76.60% using + , significantly higher than the best-performing baseline which achieved 63.63%. The reduction in latency was particularly notable, with up to 51.25% less time required for processing on the PubHealth dataset.

Theoretical and Practical Implications

The Speculative RAG framework illustrates a substantial step toward improving the efficiency of generating responses that require integrating external knowledge. The division of labor between a specialist LM and a generalist LM can significantly reduce computational overhead, making the process more viable for real-world applications where latency is a critical factor.

The theoretical implications are profound as well, indicating that leveraging the complementary strengths of different LMs—specifically, a smaller one for detailed, contextually grounded generation and a larger one for robust verification—can yield better performance than using a monolithic model alone. This approach can potentially be extended to other domains where similar task decomposition could lead to efficiency gains without sacrificing accuracy.

Future Prospects in AI

Looking forward, the Speculative RAG framework opens several avenues for further research:

- Model Specialization: Further specialization of drafting models for different types of knowledge-intensive tasks (e.g., fact-checking, multi-hop reasoning) could yield even better results.

- Adaptive Verification Mechanisms: Developing more sophisticated verification mechanisms that can dynamically adjust based on the complexity and reliability of the drafts could further streamline the process.

- Integration with Other Models: Exploration of how this speculative approach can be integrated with other types of LLM tasks, such as code generation or machine translation, where external knowledge is crucial.

- Resource Efficiency: Investigating the balance between computational resources and model performance to optimize the deployment of these models in real-world settings.

Conclusion

The Speculative RAG framework presents a compelling method to combine the strengths of both small and LLMs for enhanced retrieval augmented generation. By effectively distributing the drafting and verification tasks, this method achieves notable improvements in both accuracy and efficiency. The implications for future research and application in AI are promising, suggesting new strategies for leveraging diverse model capabilities in concert.