Autonomous agents powered by LLMs (LMs) show promise for automating computer tasks, particularly on the web. However, current LM agents often struggle with multi-step reasoning, planning, and effectively using environmental feedback, which are crucial for navigating complex, open-ended web environments. The paper "Tree Search for LLM Agents" (Koh et al., 1 Jul 2024 ) addresses this by proposing an inference-time search algorithm that allows LM agents to explicitly explore and plan within the actual interactive web environment.

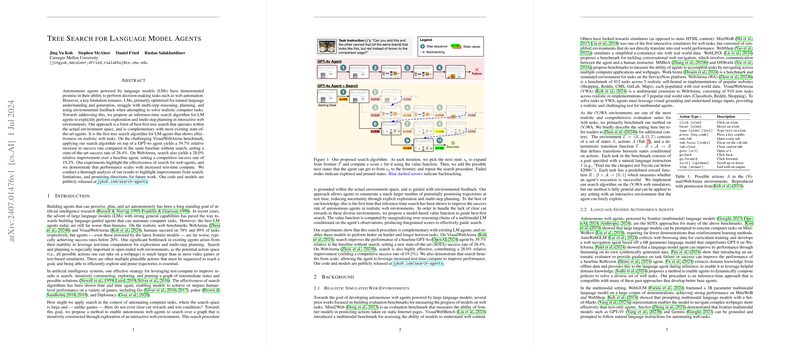

The core idea is to integrate a best-first tree search algorithm with a LLM agent. Unlike traditional game AI where search spaces are well-defined and rewards are clear, web environments are vast, complex, and lack explicit reward signals at every step. The proposed method performs search directly in the environment state space, guided by a learned value function.

The system consists of three main components:

- Agent Backbone: A standard large (multimodal) LLM prompted to output actions given an observation. The paper uses models like GPT-4o + Set-of-Marks (SoM) (Koh et al., 1 Jul 2024 ), caption-augmented Llama-3-70B-Instruct (Koh et al., 1 Jul 2024 ), and text-only GPT-4o (Koh et al., 1 Jul 2024 ) as baselines. The agent's ability to sample diverse actions (e.g., via nucleus sampling) is leveraged to generate multiple branches in the search tree.

- Value Function: A crucial component implemented by prompting a separate multimodal LM (GPT-4o (Koh et al., 1 Jul 2024 )) to estimate the likelihood of a state being a goal state. It takes the natural language task instruction, a sequence of recent observations (screenshots), previous actions, and the current URL as input. To obtain a more robust score, the value function samples multiple reasoning paths using Chain-of-Thought prompting and averages their scores (0 for failure, 0.5 for on-track, 1 for success). This provides a fine-grained heuristic to guide the best-first search.

- Search Algorithm: A best-first search that maintains a frontier (priority queue) of states to explore, prioritized by their estimated value. Starting from the current state, the algorithm iteratively:

- Pops the state with the highest value from the frontier.

- Evaluates the popped state using the value function.

- If the state's value exceeds a threshold or the search budget is reached, the search terminates, and the agent commits to the sequence of actions leading to the best state found.

- Otherwise, the baseline agent is prompted to generate candidate next actions for the current state.

- Each candidate action is executed in the environment to reach a new state. These new states are added to the frontier, typically prioritized by the value of their parent state (as immediate evaluation of children is expensive in this setup).

- Backtracking to explore different branches involves resetting the environment and re-executing the action sequence that leads to the desired state in the search tree.

The implementation uses specific parameters for the search: maximum depth , branching factor , and search budget node expansions per step of the agent's execution. The agent is also capped at a maximum of 5 actions in total for the main experiments, demonstrating the efficiency of search even under strict action limits.

The effectiveness of the approach is demonstrated on the challenging VisualWebArena (VWA) (Koh et al., 1 Jul 2024 ) and WebArena (WA) (Koh et al., 1 Jul 2024 ) benchmarks.

- On VWA, applying search to a GPT-4o + SoM agent improved the success rate from 18.9\% to 26.4\%, a 39.7\% relative increase, setting a new state-of-the-art.

- On WA, search applied to a GPT-4o agent increased success rate from 15.0\% to 19.2\%, a 28.0\% relative improvement.

- Search also substantially boosted the performance of a Llama-3-70B-Instruct agent on VWA, from 7.6\% to 16.7\% (+119.7% relative), highlighting its complementarity with different base agents.

Analysis shows that performance scales with increased search budget (Fig 2), depth, and branching factor (Table 2), indicating that more compute for search yields better results. Compared to a simple trajectory-level reranking baseline (sampling 3 full trajectories and picking the best), the proposed tree search with performed significantly better, validating the benefits of exploring partial trajectories and backtracking.

Breakdowns by task difficulty (Table 3) reveal that search provides the largest relative gains on medium difficulty tasks (4-9 actions), likely because the search depth of aligns well with the planning horizon needed for these tasks. Improvements were also observed across various websites (Tables 4 and 5), suggesting generality.

Qualitative examples illustrate how search enhances agents:

- Improved Multi-step Planning: Search helps agents avoid common failure modes like getting stuck in loops or undoing previous progress by exploring alternative paths and committing to trajectories that lead to success.

- Resolving Uncertainty: By executing sampled actions in the environment and using the value function to evaluate resulting states, the agent can filter out less promising actions and commit to better branches, even if the initially sampled action wasn't optimal.

Despite its effectiveness, the method has practical limitations:

- Computational Cost: Search significantly increases inference time and cost due to the need for multiple LM calls and environment interactions per search iteration. Efficiency improvements in LM inference and environmental interaction are key for practical deployment.

- Backtracking Overhead: The current implementation handles backtracking by resetting the environment and re-executing a sequence of actions, which can be slow if environment state transitions are expensive.

- Destructive Actions: In real-world web automation, certain actions (like making a purchase or deleting data) are irreversible. Safe deployment requires mechanisms to prevent the exploration or execution of such destructive actions. This could involve action classifiers or manual constraints, which can be integrated more easily into a search process compared to simpler reactive agents or trajectory reranking methods.

In conclusion, the paper demonstrates that environmentally grounded tree search is a powerful technique for improving the planning and reasoning capabilities of LLM agents in realistic web environments, offering substantial performance gains over non-search baselines. The approach is flexible and can be applied on top of existing agents, paving the way for more capable autonomous agents that can effectively interact with complex digital interfaces.