The Multilingual Alignment Prism: Aligning Global and Local Preferences to Reduce Harm

The paper "The Multilingual Alignment Prism: Aligning Global and Local Preferences to Reduce Harm" addresses a critical gap in the alignment and safety of AI systems, particularly LLMs, in multilingual contexts. With LLMs being increasingly deployed worldwide, their ability to handle multiple languages and cultural nuances safely is of paramount importance. This paper investigates the effectiveness of various alignment approaches designed to optimize performance across several languages while minimizing both global and local harms.

Key Contributions

- Multilingual Red-Teaming Dataset: The authors present the first human-annotated dataset of harmful prompts across eight languages—English, Hindi, French, Spanish, Russian, Arabic, Serbian, and Filipino. This dataset distinguishes between "global" harms, which are universally recognized, and "local" harms, which are culturally specific. Annotators created 900 prompts per language, categorizing the types of harm and providing translations for analysis.

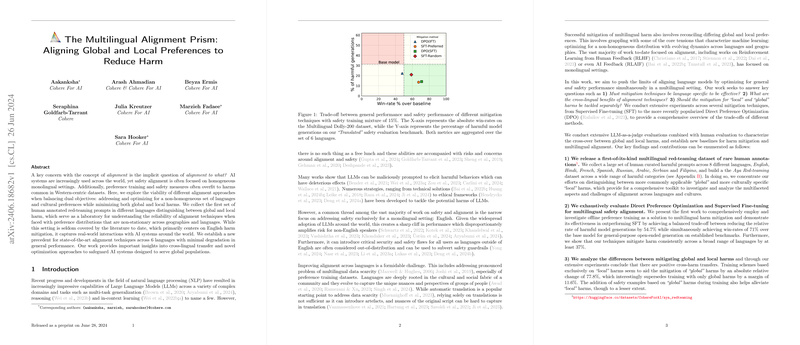

- Evaluation of Alignment Techniques: The paper extensively evaluates alignment methods, comparing traditional Supervised Fine-Tuning (SFT) and the more recent Direct Preference Optimization (DPO). The paper demonstrates that both methods substantially reduce harmful outputs across languages. Notably, DPO applied after an SFT stage (DPO(SFT)) exhibited the best balance between reducing harmful generations and maintaining general performance.

- Cross-Lingual Alignment Challenges: The experiments show that global harms are slightly easier to mitigate compared to local harms across diverse languages. Furthermore, the paper finds significant cross-harm transfer effects—training on examples of local harms can effectively improve mitigation of global harms and vice versa. This insight suggests that carefully curated training datasets incorporating both harm types can enhance the safety profiles of multilingual models.

Experimental Findings

- Safety Improvements: The SFT and DPO(SFT) models achieved considerable reductions in harmful generations, by 56.6% and 54.7% respectively, compared to the base model. Additionally, the introduction of safety training data (both global and local) proved beneficial, with notable improvements particularly in Hindi and Arabic.

- General Performance: Despite the focus on safety, DPO(SFT) maintained high levels of general-purpose performance, with a 71% win-rate on the Multilingual Dolly-200 benchmark. This demonstrates that safety and performance are not necessarily mutually exclusive but can be optimized in tandem with appropriate alignment techniques.

- Cross-Harm Transfer: Interestingly, the data revealed that local harm training improved global harm mitigation by 77.8%, more than training exclusively on global harms. However, comprehensive training on combined global and local harms resulted in the most consistent performance across different harm types.

Practical and Theoretical Implications

Practical Implications:

- The creation and release of the multilingual red-teaming dataset provide a valuable resource for the AI research community to further investigate and develop robust alignment techniques.

- The demonstrated effectiveness of DPO(SFT) offers a promising approach for developing safer multilingual LLMs that can be applied in real-world applications without compromising general performance.

Theoretical Implications:

- The cross-lingual generalization observed in harm reduction indicates that LLMs can learn universal principles of non-harmful content generation, which transfer across languages.

- The findings suggest that future research should explore more nuanced and culturally specific training datasets to fully capture the diversity of global linguistic contexts.

Future Directions

Future research could further explore:

- Scalability: Extending these alignment methods to cover more languages, especially low-resource languages, to ensure broader applicability and inclusivity.

- Dynamic Harm Databases: Developing adaptive datasets that can evolve to accommodate new types of harms as they emerge, considering the dynamic nature of harmful content.

- Human-in-the-loop Systems: Integrating continuous human feedback to refine and update alignment models, ensuring they remain effective over time.

The paper makes a robust case for the necessity of multilingual alignment in AI systems, showing that with the right techniques and datasets, it is possible to achieve safe and effective LLMs across diverse linguistic and cultural landscapes.