DeciMamba: Exploring the Length Extrapolation Potential of Mamba

The paper "DeciMamba: Exploring the Length Extrapolation Potential of Mamba" confronts a critical challenge in deep learning, specifically in long-range sequence processing. Conventional Transformers struggle with sequences of increasing length due to their quadratic complexity. The Mamba architecture presents a promising alternative, matching or exceeding Transformer performance while requiring fewer computational resources. However, Mamba exhibits limited length-generalization capabilities. The paper addresses this limitation and proposes DeciMamba, a context-extension mechanism tailored for Mamba.

Background and Problem Statement

Long sequence processing is pivotal for applications such as comprehensive text analysis, high-resolution video, and genomic data. Transformers excel in these tasks but face computational constraints imposed by their quadratic complexity with respect to input length. This limitation motivates the development of alternative architectures, including those with sub-quadratic complexity. Among these, the Mamba architecture, which uses Selective State-Space Layers (S6), emerges as a promising candidate. However, Mamba's performance deteriorates when evaluating sequences longer than those encountered during training, primarily due to a restricted Effective Receptive Field (ERF).

Methodology

The paper introduces DeciMamba, a context-extension method that enables Mamba to generalize to significantly longer sequences without additional computational overhead. DeciMamba leverages a hidden filtering mechanism within the S6 layer, primarily controlled by the selective step , which acts as a gating mechanism to determine the importance of different tokens in a sequence.

- ERF Characterization: The authors establish that Mamba's ERF is limited by the training sequence length, confining the model's length-extrapolation abilities. Visualizations of Mamba's "hidden attention" reveal sparse attention maps when inferring on sequences longer than those seen during training.

- Mamba Mean Distance: To quantify the ERF, the authors introduce the "Mamba Mean Distance," analogous to the attention mean distance in Transformers, measuring how effectively the model can attend to distant tokens in a sequence.

- DeciMamba Mechanism:

- Decimation Strategy: By examining the recurrent rule in S6 layers, they highlight the role of in controlling token importance. Tokens with smaller values contribute less to future representations.

- Decimation Ratio: The model retains only the top tokens with the largest mean values, where varies by layer depth.

- Decimation Scope: Decimation is applied selectively to layers focusing on long-range interactions, determined using the Mamba Mean Distance metric.

Empirical Evaluation

Experiments demonstrate substantial improvements in length-extrapolation across various tasks:

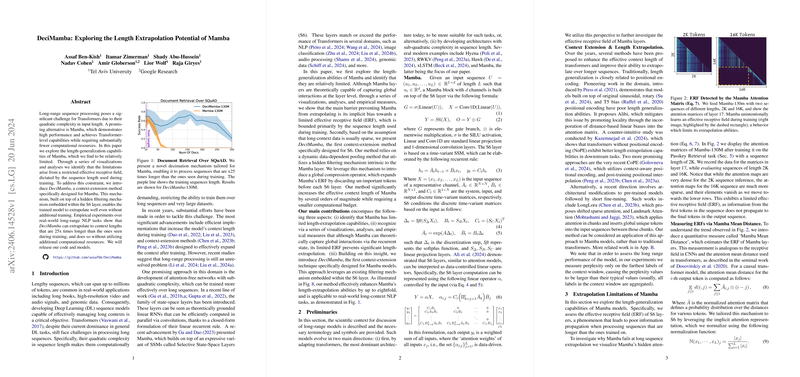

- Document Retrieval: DeciMamba successfully extrapolates to contexts 25 times longer than those used during training, maintaining performance in document retrieval tasks when evaluated on sequences up to 60,000 tokens.

- Passkey Retrieval: DeciMamba-130M exhibits significant improvements, retrieving correct passkeys embedded in sequences of up to 128K tokens, compared to Mamba's limit of 16K tokens.

- LLMing: On the PG-19 dataset, DeciMamba maintains lower perplexity compared to Mamba when evaluated on long sequences. The method's performance is shown to be stable across different sequence lengths, trailing close to a baseline established using models trained on the respective evaluation lengths.

- Multi-Document QA: DeciMamba outperforms Mamba in tasks requiring free-text answers from multiple documents, particularly as the number of documents increases significantly beyond the training setup.

Implications and Future Directions

The theoretical and practical implications of this paper are profound. DeciMamba effectively extends the context length that Mamba can handle, demonstrating that attention-free architectures like Mamba can be exploited for long-range dependencies without the quadratic complexity of Transformers. The introduction of dynamic, data-dependent decimation provides an efficient pathway to handle extended sequences in NLP and other sequence-heavy applications.

Future work may include refining the decimation strategy further, investigating hybrid models that combine both the values and other features such as token norm for filtering, and extending the analysis to other attention-free architectures like RWKV and xLSTM. Additionally, exploring architectural modifications within Mamba itself to inherently support longer sequences could provide more robustness and flexibility.

Conclusion

The paper contributes a significant advancement in the field of sequence modeling, particularly for long-range NLP tasks. DeciMamba offers a novel solution to the inherent limitations of Mamba, opening avenues for efficient long-sequence processing in deep learning. Through careful empirical evaluation and an innovative decimation mechanism, the paper paves the way for practical applications requiring extensive context understanding. This work sets a precedent for future research in optimizing context length capabilities for state-space models and other emerging architectures.