Optimizing RLHF Training for LLMs through Parameter Reallocation

This paper introduces ReaLHF, a system designed to enhance the efficiency of Reinforcement Learning from Human Feedback (RLHF) training for LLMs. The paper presents a novel approach termed parameter reallocation, enabling dynamic redistribution of LLM parameters across a GPU cluster to optimize computational workloads and address the intricate dependencies inherent in RLHF settings.

Context and Motivation

LLMs, such as GPT-3 and ChatGPT, rely heavily on extensive hardware resources due to their vast parameter sizes, driving the necessity for multiparallelization strategies to distribute computations effectively across GPUs. While traditional parallelization approaches, including data, tensor-model, and pipeline-model parallelism, are well-explored in the context of supervised training, their direct application to RLHF remains sub-par due to RLHF's distinct infrastructure requirements and multi-model dependencies.

Existing RLHF training systems often suffer from over-parallelization, leading to inefficiencies exemplified by synchronization and communication overheads in GPU clusters, or under-utilization, due to dependencies that prevent optimal GPU usage. This paper posits that parameter reallocation—dynamically adjusting the distribution of LLM parameters across devices during training—can efficiently address such bottlenecks by enabling tailored parallelization strategies for each function call type within RLHF.

Methodology

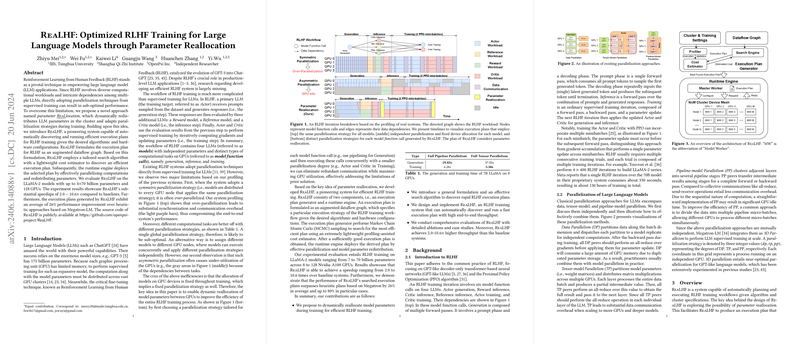

The central innovation of ReaLHF lies in its ability to automatically discover and execute efficient execution plans. It models the RLHF workflow as an augmented dataflow graph, transforming parameter reallocation and LLM execution into a systematic optimization problem.

- Execution Plan Formulation: Each RLHF function call is assigned a device mesh and a specific 3D parallelization strategy. Execution plans are represented as augmented dataflow graphs where computations are mapped to optimal device and parallel configurations.

- MCMC-Based Search: The exploration of execution plans leverages Markov Chain Monte Carlo (MCMC) sampling to navigate a vast combinatorial space efficiently. This method identifies cost-effective execution plans based on predicted time and memory costs while conforming to device memory constraints.

- Runtime Execution: The chosen execution plan is operationalized on the ReaLHF system, utilizing a master-worker model to manage the dynamic redistribution of parameters across GPUs, optimizing data transfers, and ensuring efficient parallel execution.

Performance and Implications

Experimentation with LLaMA-2 models demonstrated significant speed-ups—ranging from 2.0 to 10.6 times—compared to existing systems, underscoring the efficacy of ReaLHF's innovative parameter reallocation technique. These results emphasize the capability of ReaLHF to reduce communication costs and maximize GPU utilization by exploiting concurrent execution across disjoint device subsets.

ReaLHF exhibits distinct advantages over baseline systems by dynamically adapting to varying computational patterns inherent in RLHF, such as generation and inference diversity, without the need for manually configured resource allocation and parallel strategies.

Future Perspectives

ReaLHF sets a precedent for future LLM training system designs by illustrating the potential of parameter reallocation and automated execution planning in complex RLHF workflows. The framework provides a foundation for subsequent research endeavors focusing on optimizing model training pipelines, particularly in scenarios where multi-model dependencies complicate resource management.

While promising, ReaLHF's approaches are primarily tuned for decoder-only transformer architectures and fixed workflows, leaving open research opportunities in expanding the adaptability to broader model types and dynamic dataflow configurations. Furthermore, its implementation suggests unexplored avenues in integrating emerging optimizations for single-function calls like memory-efficient attention mechanisms with ReaLHF's comprehensive execution framework.

ReaLHF exemplifies the ongoing evolution of AI system architecture, emphasizing the critical synergy between algorithmic innovation and hardware awareness to sustain the advancement of LLM applications.