PERL: A Leap in Computational Efficiency for Reinforcement Learning from Human Feedback

Introduction to PERL

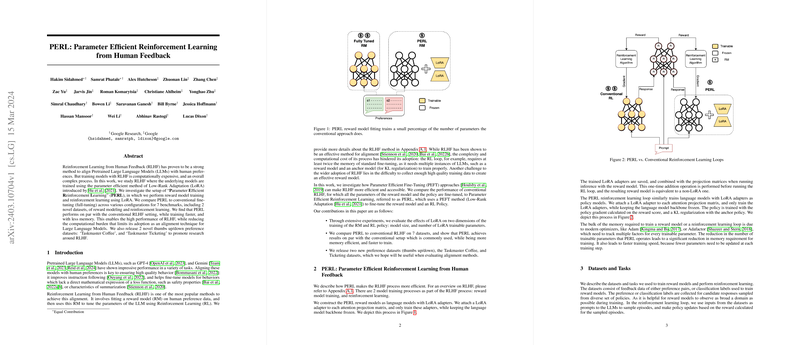

In the quest for aligning Pretrained LLMs with human preferences, Reinforcement Learning from Human Feedback (RLHF) emerges as a pivotal technique. However, the significant computational expense and intricacy involved in this training approach pose substantial challenges. Addressing these hurdles, the paper on "Parameter Efficient Reinforcement Learning from Human Feedback" introduces PERL, an innovative framework that leverages Low-Rank Adaptation (LoRA) for training models within the RLHF paradigm. Notably, PERL exhibits equivalent performance to traditional RLHF modes while ensuring a reduction in both training duration and memory requirements.

The Efficiency of PERL

PERL delineates a significant departure from conventional fine-tuning practices by incorporating LoRA into both the reward model training and the reinforcement learning phase. This integration facilitates the training of models with a substantially reduced number of parameters. Specifically, the paper reveals that PERL can operate by adjusting merely 0.1% of the total parameters present in a model. This efficiency is not at the expense of performance; PERL matches the results obtained through traditional full-parameter tuning across various benchmarks.

Deployment and Practical Implications

The deployment of PERL in learning settings highlights its practical benefits, notably in terms of memory efficiency and faster training speeds. For instance, in reward model training, a direct comparison to full-tuning setups demonstrates PERL's capability to halve the memory usage while accelerating the training process by 50%. Similar improvements are observed in the reinforcement learning phase, with memory savings of about 20% and a speed increase of 10%. Such enhancements make PERL a compelling choice for aligning LLMs with human preferences, an aspect crucial for diverse applications ranging from text summarization to UI automation.

Exploration of Datasets

The evaluation of PERL extended across a myriad of datasets, encompassing tasks like text summarization (e.g., Reddit TL;DR and BOLT Message Summarization), UI automation, and generating neutral viewpoint responses. The comprehensive analysis across these datasets not only showcases PERL's robustness but also its adaptability to distinct task domains. The introduction of the Taskmaster Coffee and Ticketing datasets further enriches the research landscape, providing new avenues for exploring RLHF methodologies.

Future Directions

The paper’s findings prompt a reevaluation of parameter tuning in reinforcement learning, particularly within the RLHF framework. The efficiency gains observed with PERL pave the way for broader adoption and experimentation, potentially expanding the horizons of LLM alignment techniques. Future investigations might delve into enhancing PERL’s cross-domain generalization capabilities or exploring more efficient ensemble models. The potential integration of recent advancements, such as weight-averaging models, could also contribute to mitigating reward hacking issues, thereby ensuring a more reliable and robust learning process.

In Summary

PERL represents a significant stride toward computational efficiency in reinforcement learning, particularly in the context of aligning LLMs with human preferences. By harnessing the power of LoRA, PERL achieves parity with traditional RLHF methods in performance metrics while substantially reducing the computational resources required. The release of new datasets alongside PERL’s validation across multiple benchmarks signals a promising direction for future research in AI alignment, promising to make reinforcement learning from human feedback an even more accessible and efficient process.