Overview of "GenQA: Generating Millions of Instructions from a Handful of Prompts"

The paper, titled "GenQA: Generating Millions of Instructions from a Handful of Prompts," addresses the challenges associated with creating large-scale datasets for finetuning LLMs. Traditionally, dataset creation involves substantial human input, which is both labor-intensive and costly. This dichotomy has led to a divide between academic datasets, which typically contain hundreds or thousands of samples, and industrial datasets, which can include tens of millions of samples. The paper presents methods for generating extensive instruction datasets autonomously through an LLM, effectively addressing the need for large-scale data without heavy human involvement.

Key Contributions

- Automated Dataset Creation: The primary contribution of this work is the introduction of GenQA, a method to automatically generate large instruction datasets using an LLM. The dataset leverages a small number of human-written meta-prompts to generate millions of diverse questions and answers. This approach significantly reduces the need for human-curated data, thus cutting down both time and financial costs.

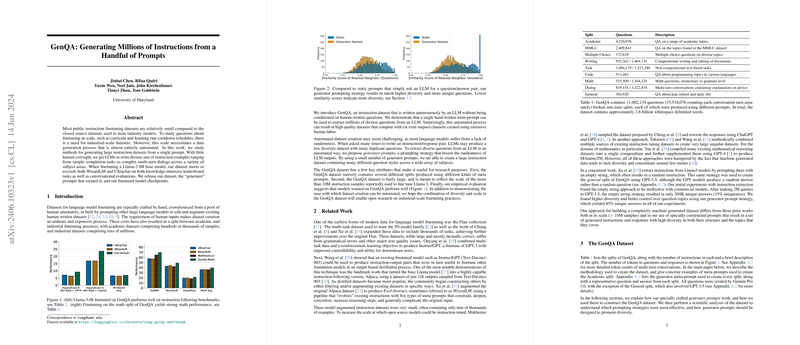

- Generator Prompts for Diversity: A central innovation in the paper is the use of "generator prompts" to enhance the diversity of the generated outputs. Traditional methods often suffer from low diversity as LLMs tend to collapse into a few frequent modes. Generator prompts mitigate this by creating lists of possible outputs internally within the prompt and then selecting from these lists in a randomized manner.

- Comprehensive Dataset Splits: GenQA is composed of several distinct splits, each tailored to different domains— Academic, MMLU, Multiple Choice, Writing, Task, Code, Math, Dialog, and General. Each split utilizes specific meta-prompts to ensure coverage of diverse question styles and subject areas. For instance, the Math split contains multi-turn conversations on various levels of mathematical problems, while the Code split focuses on programming and debugging tasks.

Evaluation and Results

The paper performs comprehensive evaluations, comparing models finetuned on GenQA against those finetuned on other datasets such as WizardLM and UltraChat. Key metrics included performance on knowledge-intensive tasks from the Huggingface Open LLM Leaderboard and conversational benchmarks such as AlpacaEval and MT-Bench. The main findings are:

- Conversational Benchmarks:

Models finetuned on GenQA outperformed those finetuned on comparable datasets on conversational benchmarks, with higher scores achieved on AlpacaEval and MT-Bench.

- Knowledge-Intensive Tasks:

On general knowledge benchmarks, the models trained on GenQA demonstrated performance on par with, or slightly below, models trained on WizardLM and UltraChat, reaffirming GenQA's viability as a high-quality dataset for instruction finetuning.

- Math Specific Tasks:

Finetuned models on the Math split of GenQA outperformed other datasets on mathematical reasoning tasks, showcasing GenQA's ability to generate high-quality, domain-specific instructions.

Implications and Future Directions

The approach introduced by this paper has significant implications for the scalability of LLM finetuning. By reducing the reliance on human-curated data, it opens up new possibilities for creating specialized datasets on-demand. This can further democratize access to high-quality datasets, potentially leveling the playing field between academic researchers and industry practitioners.

The practical implications include:

- Cost and Efficiency:

The methods proposed can drastically reduce the cost and time associated with dataset creation, making it feasible to maintain up-to-date and extensive datasets tailored to specific domains or applications.

- Customizability:

Researchers and developers can quickly generate new datasets or augment existing ones to address specific blind spots, thereby improving the generalization capabilities of LLMs.

Speculation on Future Developments

In the broader context of AI developments, the automated data generation technique presented could be extended to various domains beyond text-based tasks, including multimodal data combining text, images, and possibly other sensor data. Future work may involve refining the generator prompts to further enhance output quality and diversity, leveraging more sophisticated prompt engineering techniques, and exploring automated methods for prompt tuning and validation.

The research sets a precedent for future works aiming to balance between data quality, diversity, and the logistical constraints of dataset creation. As the LLM technology evolves, incorporating more nuanced and complex generator prompts could yield even richer datasets, improving the performance of models across a broader spectrum of tasks.

In conclusion, "GenQA: Generating Millions of Instructions from a Handful of Prompts" presents a compelling solution to the challenges of large-scale dataset creation. It offers a novel, efficient, and scalable approach that bridges the gap between small academic datasets and expansive industrial datasets, paving the way for more accessible and diverse data resources in the community.