Scaling Instruction-Finetuned LLMs

The paper "Scaling Instruction-Finetuned LLMs" primarily investigates the effects of scaling on instruction-finetuned LLMs, focusing explicitly on (1) the number of tasks, (2) the model size, and (3) the incorporation of chain-of-thought (CoT) data in finetuning. The research assesses the performance of these models across various setups and benchmarks, including MMLU, BIG-Bench Hard (BBH), TyDiQA, and MGSM.

Key Findings

Impact of Scaling

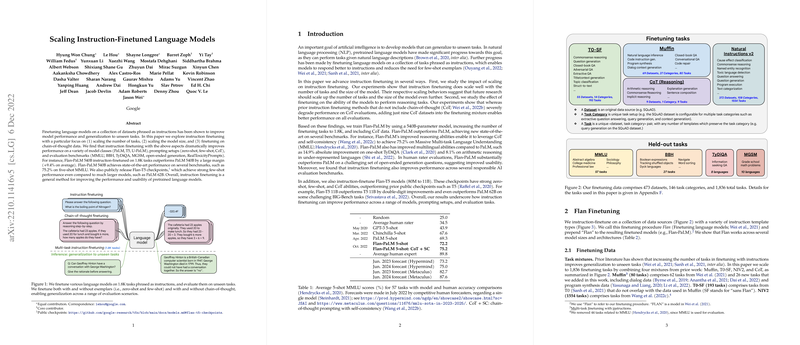

This paper systematically explores the benefits of scaling both task number and model size. Scaling is performed on three PaLM model sizes (8B, 62B, and 540B parameters) by sequentially adding task mixtures from smaller to more extensive collections (CoT, Muffin, T0-SF, and NIV2). Notably, instruction finetuning yields substantial performance improvements across all sizes, with the largest PaLM model (540B parameters) achieving the highest performance gains. Though the gains diminish for a larger number of tasks after a certain point, scaling the model size continues to provide incremental benefits.

Chain-of-Thought Finetuning

The paper emphasizes the importance of incorporating CoT data in finetuning. Models finetuned with CoT data demonstrate improved performance in tasks that require reasoning, achieving superior benchmarks across the board. For instance, the Flan-PaLM 540B model reaches a new state-of-the-art performance on five-shot MMLU with a score of 75.2%. The model also shows significant improvements in multilingual tasks and complex reasoning tasks when CoT and self-consistency are combined.

Generalization Across Models

The research extends instruction finetuning to multiple model families, including T5, PaLM, and U-PaLM, of various sizes (from 80M to 540B parameters). The performance benefits are consistent across architectures and sizes, highlighting the robustness and scalability of instruction finetuning. Interestingly, relatively smaller models like Flan-T5-XXL (11B parameters) outperform much larger models like PaLM 62B on certain evaluation metrics.

Practical and Theoretical Implications

The results have significant implications for both the practical deployment and theoretical understanding of LLMs. Practically, the improvements in CoT capabilities and multilingual understanding make these models more versatile and effective in diverse real-world applications. Theoretically, the paper confirms that instruction finetuning is a highly effective way to enhance the capabilities of LLMs.

Speculative Future Developments

Future research could investigate:

- The benefits of even larger task collections beyond the current threshold.

- The fusion of instruction finetuning with other training paradigms, such as reinforcement learning from human feedback.

- The exploration of CoT with even more specialized or diverse datasets to further enhance reasoning capabilities.

- The investigation of hybrid models leveraging multiple architectures and pre-training objectives.

Responsible AI Considerations

The paper also touches on responsible AI by evaluating the models on benchmarks measuring toxic language harms and the potential for representational biases. The instruction-finetuned models demonstrate lower toxicity probabilities and reduced bias across identity dimensions compared to their non-finetuned counterparts, making them preferable for safer deployment.

Conclusion

The paper establishes the efficacy of instruction finetuning as a robust, scalable method to enhance the performance and usability of pretrained LLMs. By demonstrating improvements across a wide range of tasks and model sizes, the paper provides compelling evidence for the adoption of instruction finetuning in future LLM deployments.