A Comprehensive Benchmark for Robust Multi-image Understanding

Introduction

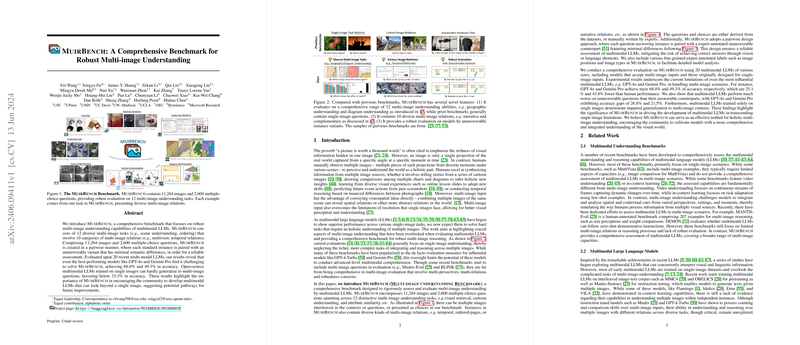

The paper introduces a comprehensive benchmark named MUIRBench, which aims to rigorously evaluate the multi-image understanding capabilities of multimodal LLMs. It recognizes that while humans can naturally synthesize information from multiple images to perceive the world holistically, current multimodal LLMs, including state-of-the-art models such as GPT-4o and Gemini Pro, struggle to achieve similar levels of comprehension when dealing with multiple images.

Benchmark Design and Objectives

MUIRBench comprises 11,264 images and 2,600 multiple-choice questions that span 12 distinctive multi-image understanding tasks. These tasks range from visual retrieval and cartoon understanding to geographic understanding and diagram understanding, encapsulating various categories of multi-image relations like temporal sequences, multi-view perspectives, and ordered pages. Notably, MUIRBench adopts a pairwise design where each answerable question is paired with an unanswerable counterpart that exhibits minimal differences, thereby providing a robust evaluation framework that mitigates the risk of models relying on superficial shortcuts.

Experimental Evaluation

An extensive evaluation on MUIRBench was conducted across 20 recent multimodal LLMs, including models that natively support multi-image inputs and those traditionally designed for single-image inputs. The findings reveal substantial performance gaps: even the best-performing models like GPT-4o and Gemini Pro achieved only 68.0% and 49.3% accuracy, respectively. Intriguingly, open-source multimodal LLMs trained on single images demonstrated poor generalization to multi-image questions, with accuracies hovering around 33.3%.

Key Findings and Model Performance

The comprehensive assessment on MUIRBench highlighted several crucial aspects:

- Holistic Multi-image Understanding: Multimodal LLMs showed significant difficulties in holistic multi-image tasks. For instance, while GPT-4o achieved relatively higher accuracies on tasks like image-text matching and visual retrieval, it struggled considerably with tasks requiring detailed integration and reasoning across multiple images, such as multi-image ordering and visual grounding.

- Robustness to Unanswerable Questions: The introduction of unanswerable question variants revealed that models frequently fail to recognize what they do not know. The gap in accuracy between answerable and unanswerable variants was notable, suggesting that current models are prone to overconfidence and do not possess reliable mechanisms to abstain from answering when necessary.

- Training Data and Model Size: The results underscored the importance of training data and processes. There was no evident correlation between model size and performance, indicating that mere scaling of model parameters does not suffice for developing robust multi-image understanding. Instead, targeted training on diverse multi-image datasets is essential.

Error Analysis

Error analysis provided additional insights. A common error category involved failures in capturing nuances and details within images, which constituted 26% of the errors. Logical reasoning errors and difficulties in object counting were also prevalent, indicating areas where current models require substantial improvements.

Implications and Future Directions

The findings from MUIRBench have important implications for the future development of multimodal LLMs.

- Improved Training Paradigms: There is a clear need for training paradigms that imbue models with the ability to effectively synthesize and reason over multiple images. This could involve leveraging large-scale, curated multi-image datasets and refining architectures to better handle multi-dimensional visual inputs.

- Robustness and Uncertainty Quantification: Future research should focus on enhancing the robustness of multimodal LLMs, particularly their ability to handle unanswerable questions gracefully. Techniques such as uncertainty quantification and abstention mechanisms could be crucial in this regard.

- Expanding Task Diversity: Incorporating a broader range of tasks and image relations will further stress-test models and uncover latent weaknesses, driving continual improvements in multimodal understanding capabilities.

Conclusion

MUIRBench stands out as a rigorous, comprehensive benchmark specifically designed to push the boundaries of multi-image understanding in multimodal LLMs. The current limitations of even the most advanced models underscore the need for refined training methodologies and robust evaluation frameworks. By providing a meticulous assessment of multi-image reasoning tasks, MUIRBench paves the way for developing next-generation models capable of transcending single-image limitations and achieving holistic visual comprehension.