An Analysis of "OccamLLM: Fast and Exact LLM Arithmetic in a Single Step"

The paper "OccamLLM: Fast and Exact LLM Arithmetic in a Single Step" addresses a notable challenge in the performance of LLMs—the accurate execution of complex arithmetic operations. Despite the broad capabilities of LLMs in text generation and reasoning, their proficiency in arithmetic remains limited, which impedes applications such as educational tools and automated research assistants.

Overview of OccamLLM

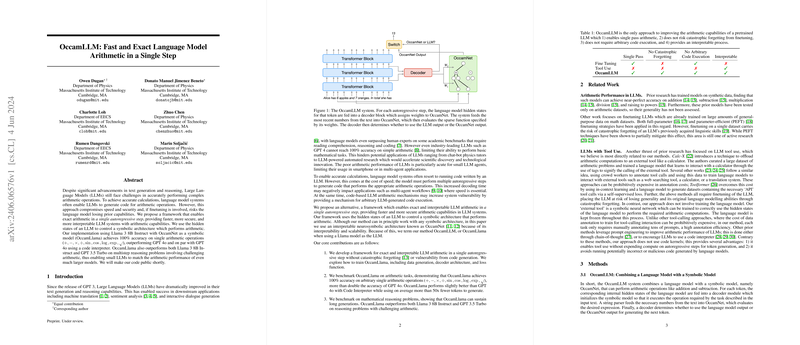

The authors propose a novel framework, OccamLLM, which integrates a symbolic architecture called OccamNet with LLMs to perform exact arithmetic operations in a single autoregressive step. This approach sidesteps the common reliance on code generation by LLMs for arithmetic tasks, which can slow down computations and introduce potential security vulnerabilities. The proposed method achieves both speed and security, producing interpretable results without compromising the model's prior capabilities through fine-tuning.

Methodology and Implementation

OccamLLM leverages the internal hidden states of LLMs to control OccamNet, enabling precise arithmetic computations. The integration, termed OccamLlama when using a Llama model, was tested using Llama 3 in conjunction with OccamNet. Key capabilities include performing fundamental operations such as addition, subtraction, multiplication, division, and more complex functions like trigonometric and logarithmic calculations with 100% accuracy.

Results and Comparative Analysis

Benchmarking against GPT-4o and its variant with a code interpreter, OccamLlama significantly outperforms these models, achieving exact arithmetic with reduced computational steps. Specifically, it surpasses GPT-4o in both accuracy and token efficiency, with more than 50-fold fewer tokens required on average.

Table \ref{tab:comparison} highlights the capabilities where OccamLLM stands out as the only approach achieving single-pass arithmetic without risking catastrophic forgetting or necessitating arbitrary code execution, while providing a transparent process.

Implications and Future Directions

OccamLLM's ability to enhance arithmetic performance in LLMs presents significant implications for practical applications, particularly in fields necessitating both rapid and reliable mathematical processing. The method's architecture offers a promising model for integrating symbolic reasoning into broader AI systems, potentially extending to more complex computational tasks.

The paper suggests that future research could explore further developments of OccamLLM by integrating additional tools, enhancing the architecture to accommodate multi-layer symbolic computations, and improving the OccamLLM switch for better handling of complex generation prompts. Moreover, it notes the potential for OccamLLM to benefit larger models like GPT-4o, indicating a broad applicability that could optimize performance across various AI implementations.

In summary, OccamLLM introduces a significant advancement in combining LLMs with symbolic models to tackle arithmetic challenges, setting the stage for more sophisticated AI systems capable of both deep reasoning and precise computation.