Program-Aided LLMs: Enhancing Reasoning with External Interpreters

The paper under discussion presents a method for augmenting the capabilities of LLMs by leveraging external program interpreters. Titled "Program-aided LLMs" (PaL), the approach is developed to address the challenges that LLMs face in performing complex arithmetic and logical reasoning—areas where even cutting-edge models frequently falter due to their propensity for calculation errors despite successful problem decomposition.

Core Contributions and Experimental Insights

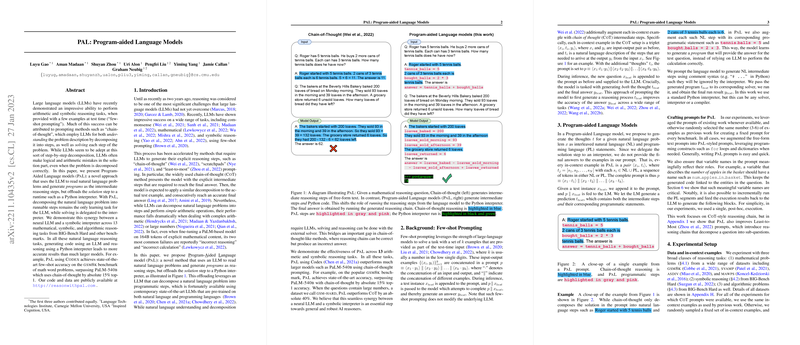

The central premise of PaL is the integration of symbolic reasoning with neural networks by generating programs to be executed by an external interpreter—specifically, a Python runtime. This design bifurcates the problem-solving responsibility. The LLM decomposes the problem into logical steps, formulating solutions as runnable code, while the Python interpreter executes these steps to derive accurate results. This separation contrasts with traditional approaches that rely solely on neural models for both decomposition and execution, which often leads to inaccuracies.

The efficacy of this approach is validated across multiple datasets involving mathematical, symbolic, and algorithmic reasoning tasks drawn from the BIG-Bench Hard and other benchmarks. Notably, PaL demonstrates superior performance against state-of-the-art methods like chain-of-thought (CoT) reasoning, particularly in scenarios that require detailed computations across large datasets involving complex arithmetic. For instance, PaL achieves a 15% absolute improvement in few-shot accuracy on the gsm8k dataset compared to traditional neural methods and handles datasets with large numerical values without a significant drop in performance, a task which typically challenges CoT-based LLMs.

Methodological Advantages

- Decoupled Reasoning and Execution: By decomposing complex reasoning into programmatic steps handled by a Python interpreter, PaL ensures computational accuracy. This separation leverages the strengths of each component: the LLM’s proficiency in natural language understanding and decomposition, and the interpreter’s deterministic computation capabilities.

- Transferability Across Tasks: PaL demonstrates its utility across diverse reasoning tasks, from mathematical problems to counting and date manipulation, underscoring its generalizability. The method outperforms both specialized and generalized approaches (like those enhanced by CoT) in each domain, indicating its robustness beyond mathematical reasoning.

- Scalability and Efficiency: A key insight from the paper is the scalability of PaL, showcased through experiments with various LLMs, including more limited models and those not specifically trained on code. The approach proves that the relative improvement remains consistent across model scales, highlighting the method's scalability.

Theoretical Implications

The use of interpreters reveals a pathway towards integrating neural and symbolic methods, leveraging LLMs for their natural language processing capabilities while outsourcing precise computations to symbolic engines. This hybrid approach may prompt a reconsideration of how future models are trained, emphasizing multimodal capabilities and the integration of non-neural components.

Practical Implications and Future Directions

PaL introduces a framework that paves the way for more reliable AI systems, particularly in high-stakes domains like finance or data science, where both reasoning and computational accuracy are paramount. The method encourages the exploration of other types of interpreters or symbolic processors that can handle varying reasoning tasks and integrate into existing AI ecosystems.

Looking forward, future research could extend the PaL framework to other domains involving structured reasoning or explore training methodologies that inherently integrate symbolic and neural reasoning capabilities. Furthermore, dialog-based AI systems could greatly benefit from PaL’s structure, particularly for user queries that require logical deductions and precise calculations.

In conclusion, this paper contributes significantly to the field of neural-based reasoning by proposing an innovative synergy with symbolic computation, facilitating a pathway toward more generalized and reliable AI systems. This work not only advances current methodologies but invites broader consideration of hybrid systems in future AI research.