TSpec-LLM: An In-Depth Analysis of a Telecommunications Dataset for LLMs

Introduction

The paper "TSpec-LLM: An Open-source Dataset for LLM Understanding of 3GPP Specifications" introduces a comprehensive dataset, TSpec-LLM, designed to enhance the processing and understanding capabilities of LLMs in the context of 3rd Generation Partnership Project (3GPP) standards. This dataset encompasses all 3GPP documents from Release 8 to Release 19 (spanning 1999 to 2023) and is structured to facilitate the training and fine-tuning of LLMs specifically for telecommunications tasks.

Main Contributions

The paper presents several key contributions, which can be summarized as follows:

- TSpec-LLM Dataset:

- Comprehensive Coverage: The dataset includes all 3GPP documents from Release 8 to Release 19, totaling 13.5 GB of data with 30,137 documents and 535 million words.

- Detailed Content Preservation: Unlike previous efforts, TSpec-LLM retains original content from tables and formulas within 3GPP specifications, ensuring that essential technical details are not lost.

- Open-Source Availability: The dataset, along with the associated questionnaire and prompts, is available open-source, encouraging further research and development in this domain.

- Automated Questionnaire Generation:

- The authors developed a method to automatically generate technical questions from 3GPP documents using GPT-4 API, followed by validation using the open-source Mixture of Experts model Mistral and human verification. This ensures a robust set of test questions for evaluating LLM performance.

- Performance Assessment and Enhancement:

- The paper assessed the baseline performance of various LLMs like GPT-3.5, GPT-4, and Gemini Pro 1.0 on domain-specific questions. The baseline accuracy was noted to be 44\%, 51\%, and 46\%, respectively.

- The authors proposed a naive-RAG (Retrieval-Augmented Generation) framework, which significantly improved the performance of these models, boosting their accuracy to 71\%, 75\%, and 72\%, respectively.

Evaluation and Results

The evaluation followed a meticulous methodology:

- Utilizing TSpec-LLM for RAG:

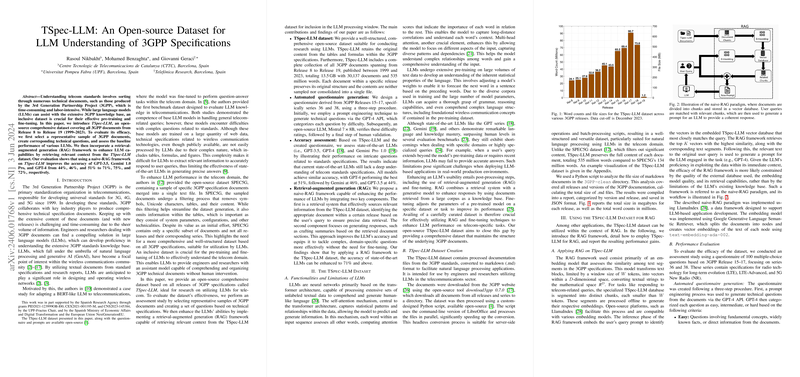

- Documents are divided into manageable chunks which are then embedded into a vector space for efficient similarity searches using Google's semantic retrieval model.

- When a user query is processed, the RAG framework retrieves the most relevant chunks and integrates them into the LLM's processing window, significantly enhancing the model's accuracy in answering technical questions.

- Accuracy by Category:

- The naive-RAG framework showed substantial improvements across all difficulty levels (easy, intermediate, and hard). For hard questions, baseline models struggled (16-36\% accuracy), whereas RAG boosted performance to 66\%.

- Comparison with SPEC5G:

- The TSpec-LLM dataset demonstrated superior performance over SPEC5G, showcasing the importance of retaining detailed content from original 3GPP documents. TSpec-LLM with RAG achieved an overall accuracy of 75\%, compared to 60\% with SPEC5G.

Implications and Future Directions

Practical Implications:

- The TSpec-LLM dataset is poised to be a valuable resource for the telecommunications industry and academia, facilitating the development of domain-specific LLMs.

- The improvements shown by incorporating RAG frameworks signal potential for deploying such systems in real-world applications, reducing the labor and time involved in understanding complex telecom standards.

Theoretical Implications:

- This paper underscores the necessity of well-structured, comprehensive datasets in enhancing the performance of LLMs in specialized domains.

- By demonstrating the efficacy of RAG frameworks, it opens avenues for further research into optimizing retrieval strategies and indexing methods tailored to domain-specific tasks.

Future Developments:

- Future work will involve refining the indexing strategies within RAG frameworks to ensure higher retrieval quality and accuracy.

- Expanding the questionnaire and enhancing the fine-tuning process of smaller open-source models, such as Phi3, using TSpec-LLM will be crucial for developing robust, telecom-specific LLMs.

- The deployment of these fine-tuned models using efficient inference libraries could further broaden their accessibility and utility in browser-based applications.

Conclusion

The TSpec-LLM dataset represents a significant advancement in the application of LLMs within telecommunications. The thorough evaluation and the marked performance improvements via the naive-RAG framework emphasize the dataset's potential. This paper lays a solid foundation for future research and practical implementations aimed at leveraging LLMs for better understanding and managing the extensive 3GPP standards.