Analysis of "Telco-RAG: Navigating the Challenges of Retrieval-Augmented LLMs for Telecommunications"

The paper "Telco-RAG: Navigating the Challenges of Retrieval-Augmented LLMs for Telecommunications" introduces a novel framework named Telco-RAG, designed to address the specific challenges encountered when deploying Retrieval-Augmented Generation (RAG) systems in the telecommunications domain. Given the intricate and rapidly evolving nature of telecom standards, particularly those developed by the 3rd Generation Partnership Project (3GPP), Telco-RAG emerges as a strategic solution tailored to improve the deployment and efficacy of LLMs in this technical field.

Core Contributions

The paper identifies several critical challenges intrinsic to implementing RAG systems within telecommunications, including the need for sensitivity to hyperparameters, the handling of vague user queries, high memory usage, and sensitivity to the quality of AI prompt engineering. Telco-RAG addresses these challenges through the following innovations:

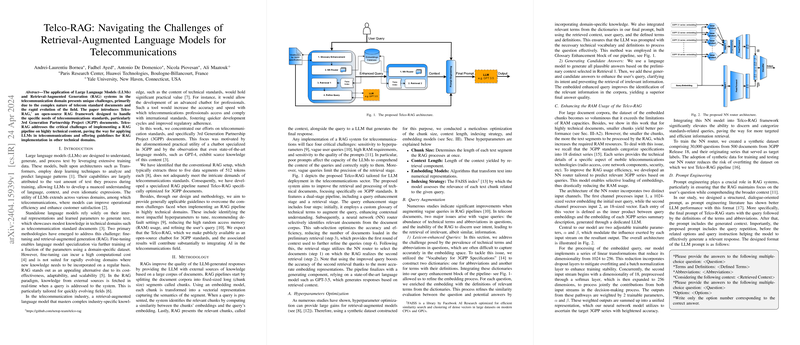

- Optimized RAG Pipeline: By introducing a dual-stage retrieval and query enhancement process, Telco-RAG refines the retrieval of telecom-relevant technical documents and improves response accuracy. This dual-stage process involves a custom glossary augmenting queries with technical terminologies and definitions, aligning closely with the technical demands of the 3GPP documentation.

- Hyperparameter Tuning and Query Augmentation: Comprehensive optimization of key parameters such as chunk size, context length, and indexing strategies proved significant. The paper highlights that smaller chunk sizes and extended context lengths improve accuracy, bringing a 2.9% accuracy gain by reducing chunk size from 500 to 125 tokens. Additionally, enriching user queries with generated candidate answers provides a substantial accuracy boost, with improvements ranging from 2.06% to 3.56%.

- Enhanced Memory Efficiency: The integration of a neural network (NN) router enables the selective loading of embeddings that pertain specifically to the user's query, significantly reducing RAM usage by approximately 45% compared to benchmark models.

- Advanced Prompt Engineering: By employing a structured dialogue-oriented prompt, Telco-RAG enhances the LLM’s ability to process complex telecom queries, resulting in a 4.6% boost in accuracy compared to standard formats.

Implications and Future Prospects

The research implications are manifold. Practically, Telco-RAG sets a new precedent for deploying LLMs in telecommunications by enhancing performance and reducing resource demands. Theoretically, it expands the methodologies for hyperparameter optimization and query handling in technically complex domains. The authors propose that the advances made with Telco-RAG in telecommunications can be generalized and applied to other technical fields, suggesting a wider applicability of the developed techniques.

Looking forward, the paper envisages further refinement and expansion of Telco-RAG functionalities. Future prospects include enhancing the NN router's precision in categorizing and retrieving relevant technical documents and potentially integrating more sophisticated natural language processing techniques to further improve query understanding and response generation.

In essence, Telco-RAG offers substantial improvements over existing systems, elevating both the accuracy and efficiency of RAG systems in telecommunications. By addressing distinct challenges through innovative methodologies, this framework not only bolsters accuracy but also demonstrates a scalable model that can be adapted beyond telecommunications.