CulturePark: Advancing Cross-Cultural Understanding in LLMs

Introduction

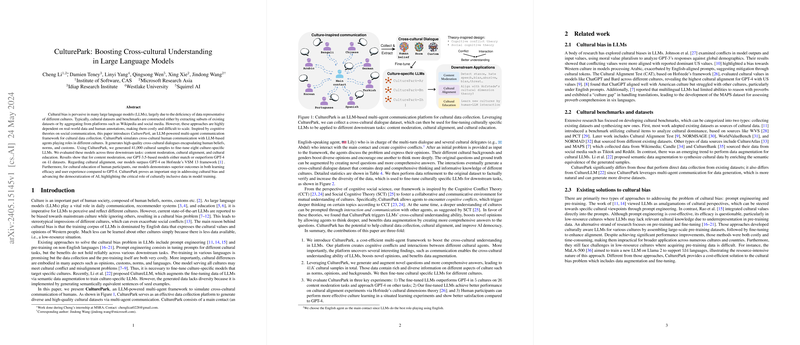

Cultural bias in LLMs is a well-documented issue, primarily arising from the predominance of English-language data that reflects Western cultural values. Traditional approaches to creating cultural datasets and benchmarks involve either selecting subsets of existing datasets or aggregating data from platforms such as Wikipedia and social media, both methods being resource-intensive and hard to scale. This paper introduces CulturePark, an innovative framework inspired by cognitive theories to address cultural bias in LLMs through simulated cross-cultural dialogues.

CulturePark Framework

CulturePark is an LLM-powered multi-agent communication platform designed to simulate cross-cultural communication between agents representing different cultures. The system operationalizes two primary roles: the main contact, an English-speaking agent, and several cultural delegates from various cultural backgrounds. By facilitating multi-turn dialogues among these agents, CulturePark generates a diverse and detailed dataset encapsulating human beliefs, customs, and norms from different cultures.

The platform employs improved prompting techniques to maintain high-quality dialogues. Self-calibration prompts ensure cultural bias reduction by aligning agent responses with culturally appropriate attitudes derived from seed data. Moreover, communication styles are diversified through self-guidance prompts and natural free chat to mitigate redundancy in responses and inspire novel, comprehensive ideas.

Data Generation and Refinement

CulturePark generated 41,000 cultural samples from an initial dataset of 4,100 seed inputs sourced from the World Values Survey (WVS) and Global Attitudes surveys (GAS). To prepare this data for fine-tuning cultural-specific LLMs, a multi-step data refinement process was employed. The process involves:

- Extracting cultural opinions: Opinions from cross-cultural dialogues are extracted via GPT-4.

- Fact verification: Extracted opinions are validated for factual accuracy and relevance.

- Removing redundancy: Semantic clustering techniques are used to enhance data diversity by removing similar data points.

Experimental Evaluation

Content Moderation

The fine-tuned models were evaluated on content moderation tasks across eight cultures: Arabic, Bengali, Chinese, German, Korean, Portuguese, Spanish, and Turkish. Impressively, the CulturePark-generated models either matched or outperformed GPT-4 on 41 datasets, indicating the effectiveness of the generated data in enhancing model performance on cultural-specific tasks.

Cultural Alignment

Utilizing Hofstede's VSM 13 framework, the culturally fine-tuned models exhibited superior cultural alignment compared to GPT-4. Evaluations on Hofstede’s cultural dimensions revealed that these models achieved significantly lower Euclidean distances, demonstrating enhanced alignment with the cultural values and norms specific to the evaluated cultures.

Situated Learning for Cultural Education

For cultural education, a human paper involving 24 participants validated the efficacy of the fine-tuned models. Participants interacting with CulturePark fine-tuned models showed better learning outcomes and higher satisfaction scores compared to those interacting with GPT-4. This indicates that CulturePark models provide more relevant and detailed cultural insights, thus aiding better academic and practical understanding of different cultures.

Implications and Future Directions

The implications of CulturePark are broad and significant:

- Practical Implications: CulturePark promotes more inclusive and equitable AI applications by providing culturally sensitive and representative outputs, thereby reducing stereotypes and social biases. This can enhance user trust and adoption of AI systems in diverse cultural contexts.

- Theoretical Implications: The framework contributes to the body of research on cultural representation in AI and natural language processing, offering a scalable, cost-effective method for generating culturally diverse data. It highlights the importance of multi-agent communication in uncovering and understanding deep-seated cultural norms and beliefs.

Future research could explore integrating more cultures and fine-tuning techniques using other open-source models like Llama2, broadening the scope and impact of CulturePark. Additionally, investigating the interplay between general model capabilities and cultural fine-tuning could yield insights into improving LLMs' general reasoning abilities while maintaining cultural specificity.

Conclusion

CulturePark stands as a promising approach to mitigating cultural bias in LLMs. By leveraging a multi-agent, cognitive conflict-inspired framework, it successfully generates high-quality, diverse cultural data and fine-tunes models that outperform state-of-the-art LLMs in multiple cultural contexts. The potential for enhanced cross-cultural understanding and AI democratization marks CulturePark as a valuable contribution to the field of natural language processing and AI ethics.