Efficient Deployment of Long-Context Transformers

Introduction

In the rapidly evolving world of AI applications, we encounter a unique challenge: deploying long-context transformers efficiently. These models, which process huge contexts like entire books or extensive code repositories, are invaluable for applications demanding vast input data. Yet, their deployment is prohibitively expensive compared to their short-context counterparts. This article will break down a paper that tackles this pressing issue, offering both insights and practical solutions.

Challenges in Deploying Long-Context Transformers

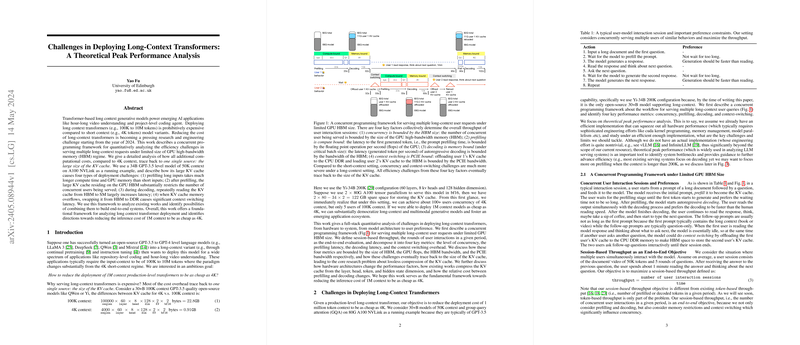

Deploying a long-context transformer involves addressing several unique challenges. The crux of the problem lies in the KV cache—an internal storage used by transformers to keep track of key-value pairs necessary for generating outputs. Here's a breakdown of the key challenges:

- Prefilling Latency: Long inputs take significantly more time and GPU memory to preprocess compared to short inputs.

- Concurrency: The large KV cache uses up considerable GPU high-bandwidth memory (HBM), limiting the number of concurrent user sessions.

- Decoding Latency: Repeatedly reading from the KV cache during output generation increases latency.

- Context Switching: When memory overflows, swapping KV cache data between GPU and CPU adds significant delay.

Quantitative Analysis Using a Concurrent Programming Framework

To tackle these challenges, the paper introduces a concurrent programming framework. This framework quantitatively analyzes the efficiency bottlenecks in serving multiple long-context requests under the constraints of limited GPU HBM. By examining a model with a 50K token context length on an A100 GPU, the framework breaks down the primary challenges:

- Concurrency Bound: The level of concurrency is directly limited by the size of the GPU HBM.

- Compute Bound Prefilling: The time taken to prefill the input is bounded by the GPU's floating point operations per second (FLOPS).

- Memory Bound Decoding: The delay in decoding sequences is constrained by the HBM bandwidth.

- PCIE Bound Context Switching: The latency in switching contexts is restricted by the PCIE bandwidth between GPU HBM and CPU DDR memory.

Numerical Results and Performance Metrics

The analysis shows stark differences between short and long-context models. For a 34B model with a 4K context, the KV cache requires only 0.91 GB, compared to a massive 22.8 GB for a 100K context model. This discrepancy highlights the significant increase in memory and computational costs associated with long contexts.

Key Findings:

- Prefilling for 50K tokens can take around 14.1 seconds, compared to just 0.89 seconds for 4K tokens.

- Concurrent user support drops from about 20 users (4K context) to only 1 user (50K context) per GPU.

- Decoding latency slightly increases with longer contexts, while context switching overhead also significantly rises.

Implications and Future Directions

This paper provides a foundational framework that identifies the principal source of inefficiency: the size of the KV cache. Practical implications include:

- Optimizing Memory Use: Methods to compress the KV cache without losing information could democratize access to long-context models.

- Prefilling and Decoding: Innovations that reduce the prefilling and decoding latencies are crucial. For instance, reducing the KV cache size from 100K to 1GB will greatly impact cost efficiency.

Moving forward, integrating multiple existing approaches to build an end-to-end optimized system is promising. Collaborative efforts could yield substantial advancements, making the deployment of long-context transformers as cost-effective as their short-context counterparts.

Conclusion

In summary, deploying long-context transformers efficiently poses several significant challenges rooted in the size of the KV cache. The concurrent programming framework provided in the paper offers a detailed analysis of these issues and identifies key areas for optimization. As the AI community continues to innovate, working towards compressing the KV cache and improving both prefilling and decoding processes will be crucial steps in enabling widespread use of these powerful models.