Exploring Multimodal Graph Theory Problems Through VisionGraph

Introduction: The Challenge of Multimodal Graphs in AI

Graph theory, a fundamental area of mathematics, presents unique challenges when combined with visual data in AI applications. Understanding the structure of graphs visually and resolving complex problems computationally demands advanced capabilities from AI models. The paper introduces VisionGraph, a new benchmark tailored to test the efficiency of Large Multimodal Models (LMMs) on graph theory problems set within a visual context.

VisionGraph Benchmark: An Overview

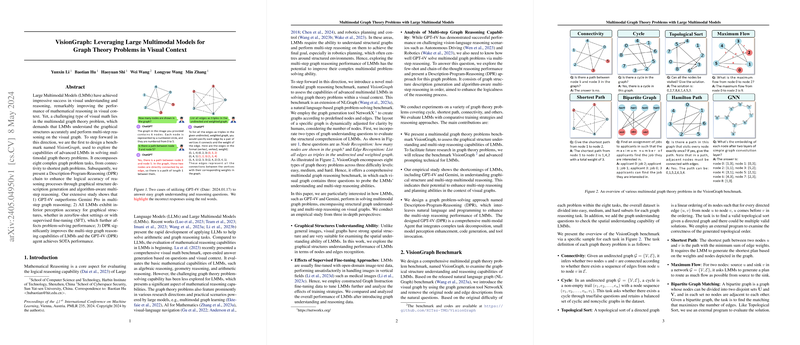

The VisionGraph benchmark is designed to rigorously test the comprehension and reasoning abilities of LMMs, such as GPT-4V and Gemini Pro, on multimodal graph theory problems. This tool employs visual graphs dynamically adjusted for clarity and includes multiple question types for comprehensive assessment:

- Graph Understanding Questions: These assess basic recognition skills, such as identifying nodes and listing edges.

- Graph Theory Problems: Spread over three difficulty levels (easy, medium, hard), these problems challenge models with tasks that are central to graph theory, like finding the shortest path, detecting cycles, or performing topological sorts.

Experimental Findings: LLMs Under the Lens

The paper explores the performance of various LMMs using VisionGraph, focusing on both the perception of graphical structures and their problem-solving finesse. It was observed that:

- Graphical Structure Comprehension: All LMMs, including the high-profile GPT-4V, showed limitations in accurately perceiving detailed graphical structures, such as edge recognition, highlighting a need for more focused training.

- Problem-solving Accuracy: LMMs displayed varied success on multimodal graph theory problems. For instance, GPT-4V excelled at node recognition and complex reasoning tasks involving the correct identification of paths within graphs.

- Impact of Supervised Fine-Tuning: Additional fine-tuning using graph-specific data consistently improved model performance, suggesting that tailored training regimens are beneficial.

Advanced Solutions: The DPR Approach

To counteract some observed deficiencies, the paper introduces an innovative solution named Description-Program-Reasoning (DPR). This approach integrates natural language processing with algorithmic reasoning, augmenting a base model's capabilities to navigate, comprehend, and solve complex graph-related tasks efficiently. The DPR process boosts LMMs' multimodal abilities by:

- Generating Detailed Descriptions: Describing the graph's structure in natural language to set a context.

- Algorithmic Reasoning: Utilizing code generation for algorithmic tasks directly linked to graph theory problems.

- Enhanced Execution: Using external tools to perform and verify multi-step reasoning based on the generated descriptions and algorithms.

Practical Implications and Future Prospects

This research is pivotal for advancing AI's capabilities in fields where complex visual data interacts with structural and logical challenges, such as autonomous driving, robotics, and interactive educational technologies. Looking forward, the development of more robust multimodal models could greatly benefit from:

- Balanced Training Data: Creating datasets with balanced complexity across different types of graph theory problems.

- Contextual and Algorithmic Training: Broadening the training to include more context-sensitive tasks and algorithmic reasoning could refine LLMs' efficiency and adaptability.

Ethical and Technical Considerations

The authors address potential biases by acknowledging the limitations inherent in the training data of models like GPT-4V and Gemini Pro. They emphasize transparency and reproducibility in their research by making their methods and datasets publicly available, advocating for a responsible approach to AI development.

Concluding Thoughts

The VisionGraph benchmark highlights both the progress and the gaps in current AI technologies concerning multimodal graph theory problems. By offering a structured way to assess and enhance model capabilities, this research contributes to the gradual evolution of AI problem-solving skill sets, potentially leading to more intelligent, versatile, and reliable AI systems in the future.