Understanding ChuXin: A Fully Open-Source LLM with Extended Context Length

Introduction to ChuXin

ChuXin is a notably open-source LLM that not only provides model weights but also shares its entire training framework, including data, processes, and evaluation techniques. This transparency is intended to facilitate better research and innovation within the field of AI, specifically in NLP. ChuXin comes in two versions, the base model with a 1.6 billion parameter count trained on a diverse dataset of 2.3 trillion tokens, and an extended model capable of handling up to 1 million tokens in context length.

Comprehensive Open-Source Approach

Unlike many models that offer limited access to their inner workings, ChuXin presents a full suite of resources:

- Training Data: The datasets contributing to ChuXin’s knowledge span a myriad of sources from web pages to encyclopedias, emphasizing diversity.

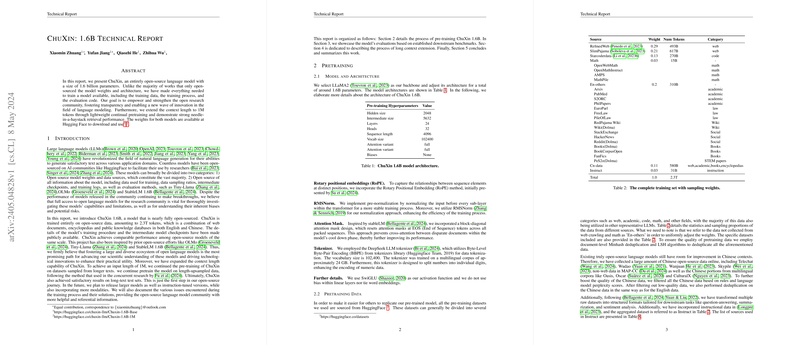

- Model Architecture: ChuXin uses a modified version of the LLaMA2 architecture enhanced by recent breakthroughs in AI research, such as RoPE for embedding positional relations and RMSNorm for normalization.

- Evaluation: Comprehensive evaluation methods and benchmarks are provided, ensuring that researchers can accurately measure the model’s performance and compare it against other models.

Breakdown of Training Data and Process

The training data for ChuXin is meticulously chosen to encompass a broad spectrum of knowledge:

- Source and Composition: Data sources include a mix from web documents, academic databases, code repositories, and more, with heavy emphasis on quality and diversity.

- Tokenization and Model Setup: Utilization of Byte-Level Byte-Pair Encoding and a multilingual corpus ensure that ChuXin can handle textual input effectively across different languages and formats.

ChuXin's training leverages cutting-edge techniques such as FlashAttention-2 for improved processing efficiency and BFloat16 precision to balance computational cost and performance. The strategic implementation of these advanced methods allows ChuXin to manage its extensive parameter settings without compromising on training speed.

Numerical Outcomes and Model Evaluation

The evaluation of ChuXin spans multiple benchmarks:

- Performance Metrics: ChuXin demonstrates strong performance across a variety of English and Chinese benchmarks, showing competitive results compared to other large models in tasks involving commonsense reasoning and reading comprehension.

- Extended Context Handling: Tests on the "Needle In a Haystack" benchmark indicate that ChuXin maintains robust retrieval abilities even with significantly expanded context windows, a relatively rare capability among current models.

Extending Context Length

A highlight of ChuXin is its ability to process remarkably long text sequences:

- Context Extension Strategy: ChuXin utilizes a curriculum learning strategy to gradually extend the context window it can handle, culminating in the ability to process up to 1 million tokens.

- Effect on Model Performance: Despite the extended context, ChuXin retains strong performance on tasks designed for shorter contexts, showcasing its versatility.

Implications and Future Directions

ChuXin’s open-source nature and extended context capabilities could greatly influence the future landscape of AI research:

- Open Science and Collaboration: By providing complete access to all aspects of ChuXin’s training and architecture, the model sets a new standard in transparency, potentially accelerating advancements in LLMing.

- Innovation in Context Handling: The techniques developed to extend ChuXin’s context window could inspire new research into handling long-form texts, which are common in many real-world applications such as document analysis and summarization.

In conclusion, ChuXin represents not just a step forward in model transparency and performance but also serves as a beacon for future explorations in AI, urging the community towards more open, collaborative, and innovative research environments.