The Qwen2.5-1M technical report details the design, training, and deployment strategies of a series of LLMs engineered to handle up to one million tokens of context. The report systematically covers modifications to the training regime, architectural adaptations, and inference optimizations necessary for scaling context length while preserving performance on both long and short sequences.

The technical approach is divided into two main parts:

1. Long-Context Training and Post-Training

- Pre-Training Strategy The models leverage a combination of natural and synthetic long-text data. Synthetic tasks such as Fill-in-the-Middle, keyword-based retrieval, and paragraph reordering are integrated into the corpus to enforce long-range dependency learning. A progressive context expansion schedule is adopted by initially training with 4096-token sequences and subsequently increasing the context window to 32K, 65K, 131K, and finally 262K tokens. During each phase, the model’s Rotary Positional Embedding (RoPE) base frequency is adjusted, ensuring that attention mechanisms effectively capture longer-range dependencies. Quantitative improvements on the RULER benchmark across these successive stages highlight a marked enhancement in the models’ ability to handle extensive context lengths.

- Post-Training Enhancements To further bolster long-context performance without degrading capabilities on shorter inputs, the report describes a two-stage supervised fine-tuning (SFT) process. Initially, the model is fine-tuned exclusively on data with shorter sequences; subsequently, a mixed dataset containing sequences that range up to 262K tokens is introduced. Complementing this, an offline reinforcement learning (RL) phase—akin to Direct Preference Optimization—is applied. This RL stage leverages training pairs from short-context samples yet achieves generalization on long-context tasks, as evidenced by improvements on the longbench-chat benchmark. The synthesis of long instruction data, generated using an agent-based framework with retrieval-augmented generation and multi-hop reasoning, addresses the scarcity of human-annotated long instructions.

2. Efficient Inference and Deployment

- Length Extrapolation via Dual Chunk Attention (DCA) The report introduces a training-free length extrapolation method based on Dual Chunk Attention, which decomposes the input into manageable chunks and remaps relative positions. By ensuring that inter-token distances do not exceed the maximum length seen during training, the method preserves the effectiveness of rotary position embeddings, even when processing contexts up to one million tokens. Complementary attention scaling techniques (referred to as YaRN) are applied to maintain precision and stability.

- Sparse Attention and Memory Optimizations Recognizing that full attention computation is prohibitively expensive for ultra-long contexts, the report leverages a sparse attention mechanism inspired by MInference. This approach dynamically selects critical tokens—following a “Vertical-Slash” pattern—to reduce computational complexity while maintaining nearly identical accuracy compared to full attention. Integration with chunked prefill dramatically reduces VRAM usage (up to 96.7% savings for activation storage in MLP layers) by processing input sequences in smaller segmented chunks. Additionally, a sparsity refinement method based on an attention recall metric is used to calibrate the sparse configuration for 1M-token sequences.

- Inference Engine Optimizations The deployment framework (open-sourced as part of BladeLLM and integrated with vLLM) incorporates kernel-level optimizations, including highly tuned sparse attention kernels and MoE kernel enhancements, which exploit advanced GPU architectures (e.g., NVIDIA Ampere/Hopper and AMD MI300) to achieve up to a 90% peak FLOPs utilization rate. Further efficiency is gained via dynamic chunked pipeline parallelism and the Totally Asynchronous Generator (TAG) scheduling system. These system-level optimizations collectively reduce the time-to-first-token by 3× to 7× in ultra-long-context scenarios across different model sizes and hardware platforms.

Evaluation and Performance

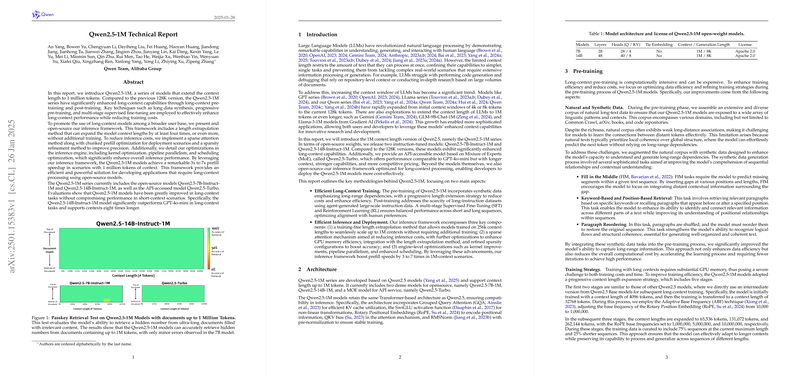

- Long-Context Benchmarks The Qwen2.5-1M models are evaluated on rigorous tasks such as Passkey Retrieval (with 1M token documents) and extended versions of RULER and LV-Eval. Notably, the Qwen2.5-14B-Instruct-1M variant achieves accuracy levels exceeding 90% on 128K sequence samples, and even competitive retrieval accuracies in the 1M token regime demonstrate the effectiveness of the long-context training and inference strategies.

- Short-Context Performance Despite the focus on extended context lengths, the models maintain competitive performance on standard benchmarks for natural language understanding, coding, mathematics, and reasoning. This balanced performance is crucial to ensuring that the enhancements for long-context processing do not impair the baseline capabilities.

- Inference Speed Gains Extensive speed comparisons on GPUs (including NVIDIA H20 and A100) show dramatic reductions in inference latency. For example, the Qwen2.5-14B-Instruct-1M model reduces its prefill time from approximately 12 minutes (with full attention) to around 109 seconds when enhanced with sparse attention and optimized kernel computations.

In summary, the report presents a comprehensive framework for extending the context length of LLMs to one million tokens by innovating across the data synthesis, training, and inference pipelines. The work demonstrates that with carefully designed progressive training, specialized synthetic data, and advanced inference optimizations, it is feasible to significantly expand the operational context window without compromising accuracy or short-sequence performance.