Advancing LLMs for Turkish: Strategies and Evaluations

The paper "Bridging the Bosphorus: Advancing Turkish LLMs through Strategies for Low-Resource Language Adaptation and Benchmarking" by Emre Can Acikgoz et al. addresses the significant challenges and strategic solutions in advancing LLMs for the Turkish language. The paper includes two main methodologies for developing Turkish LLMs: (i) fine-tuning existing LLMs pretrained in English and (ii) training models from scratch using Turkish pretraining data. Both approaches are supplemented with supervised instruction-tuning on a novel Turkish dataset to enhance reasoning capabilities. This paper also introduces new benchmarks and a leaderboard specifically for Turkish LLMs to facilitate fair and reproducible evaluation of these models.

Challenges in Developing LLMs for Turkish

Although Turkish is not a low-resource language per se, it suffers from a lack of research focus, solid base models, and standardized benchmarks. The primary obstacles identified include data scarcity, model selection, evaluation challenges, and computational limitations. Addressing these gaps is essential for making advanced NLP technologies accessible for underrepresented languages like Turkish.

Methodological Approaches

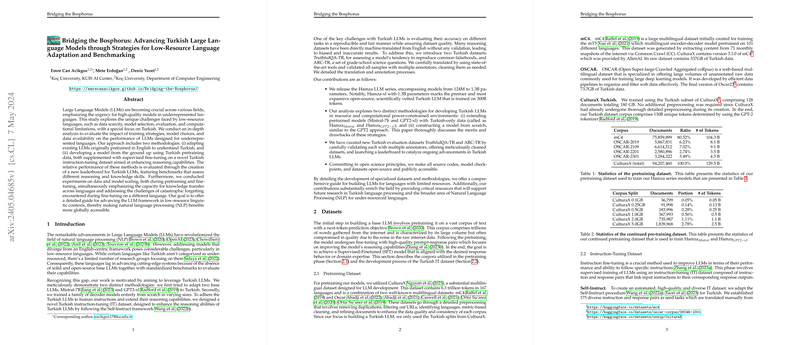

The paper carried out two distinct methods: adapting existing LLMs (Mistral-7B and GPT2-xl) to Turkish and training a series of decoder-only models from scratch, which are named Hamza models. The aim was to explore the feasibility and performance differences between these approaches.

- Adaptation of Existing LLMs: The selected LLMs, Mistral-7B and GPT2-xl, were further pretrained on Turkish data. The models were subjected to progressive enlargement of the training corpus size to ensure efficient adaptation. LoRA (Low-Rank Adaptation) was employed to make training cost-efficient while preventing catastrophic forgetting.

- Training from Scratch: A family of decoder-only models named Hamza, ranging from 124M to 1.3B parameters, was trained on the Turkish splits of CulturaX, a multilingual dataset. The training adhered to the architectural and training principles similar to GPT2, with adaptations for the specificities of the Turkish language.

Instruction-Tuning and Evaluation Datasets

A novel Turkish instruction-tuning (IT) dataset was designed following the Self-Instruct framework to improve the models' reasoning abilities. The dataset was meticulously validated and augmented with diverse and challenging tasks.

The paper also released two new evaluation datasets, TruthfulQA-TR and ARC-TR, which were carefully translated and validated by annotators to ensure quality. These datasets were designed to evaluate models on their ability to avoid common falsehoods and answer grade-school science questions, respectively.

Experimental Results

The paper's experimental results highlighted the following key findings:

- Performance on Benchmark Datasets:

The adapted models, particularly Hamza, showed superior performance in Turkish question-answering tasks compared to models trained from scratch.

- Bits-Per-Character (BPC) Metrics:

Lower BPC values indicated that the adapted models were better at compressing Turkish text, suggesting more efficient LLMing capabilities.

- Implications of Fine-Tuning:

The supervised fine-tuning with the novel Turkish IT dataset yielded improvements in model performance across downstream benchmarks.

- Catastrophic Forgetting:

The paper found that models pretrained on English experienced a decline in English task performance when further trained on Turkish data, indicating catastrophic forgetting. This finding suggests the need for balanced training data to maintain multilingual capabilities.

Practical and Theoretical Implications

- Practical Implications: The release of new datasets and the open-source nature of Hamza models contribute significantly to the NLP community. They provide essential resources for future research in Turkish language processing and facilitate the development of better models.

- Theoretical Implications: The paper explores the challenges of adapting high-resource LLMs for underrepresented languages and provides insights into effective strategies for overcoming these challenges. It emphasizes the importance of dataset quality and balanced training to avoid catastrophic forgetting.

Future Developments in AI

The future of AI and NLP, as inferred from this paper, involves creating more efficient, adaptable, and inclusive models. There is a need for high-quality, diverse, and representative datasets. Additionally, innovative training techniques like LoRA and better fine-tuning strategies are crucial in advancing LLMs for underrepresented languages. Future efforts could also focus on improving hardware capabilities to support large-scale model training in resource-constrained settings.

Conclusion

This paper's comprehensive approach sheds light on the development of LLMs for Turkish, presenting a clear roadmap for other underrepresented languages. The methodologies, benchmarks, and models introduced in this paper significantly enrich the field by making NLP technologies more accessible and effective for diverse linguistic contexts. The research underscores the need to balance between extending existing models and developing new ones from scratch, highlighting the importance of dataset quality, computational efficiency, and multilingual adaptability.